MuleSoft MCIA-LEVEL-1 Exam Questions

Questions for the MCIA-LEVEL-1 were updated on : Jul 05 ,2025

Page 1 out of 9. Viewing questions 1-15 out of 130

Question 1

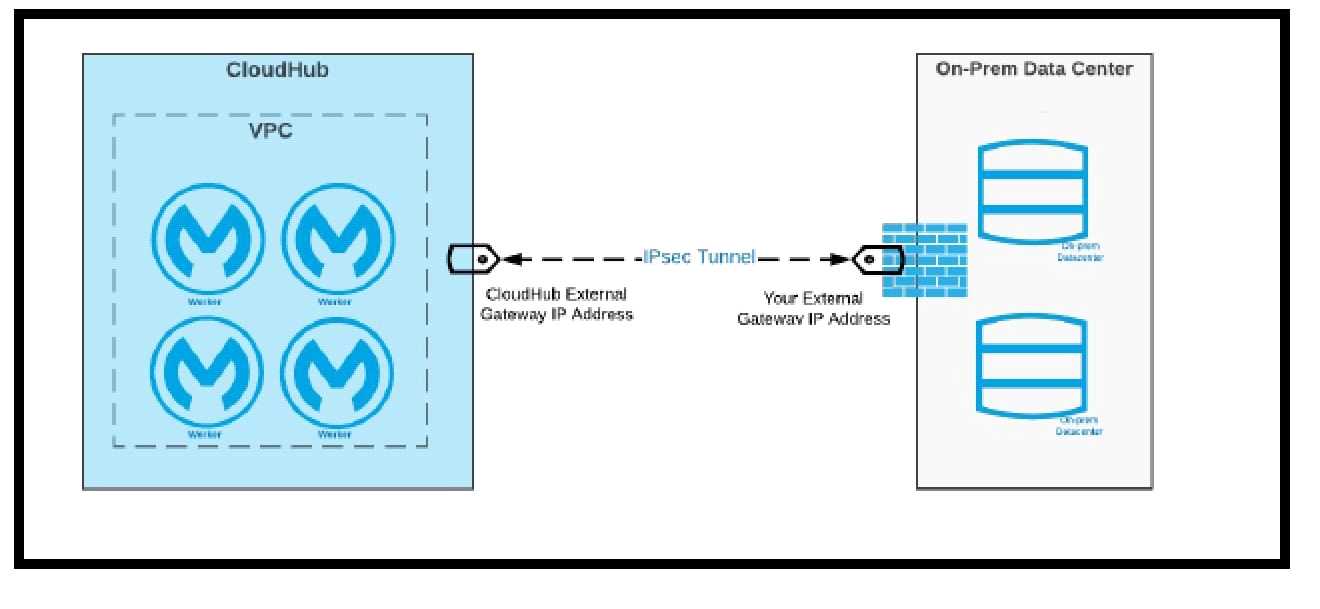

Mule applications need to be deployed to CloudHub so they can access on-premises database

systems. These systems store sensitive and hence tightly protected data, so are not accessible over

the internet.

What network architecture supports this requirement?

- A. An Anypoint VPC connected to the on-premises network using an IPsec tunnel or AWS DirectConnect, plus matching firewall rules in the VPC and on-premises network

- B. Static IP addresses for the Mule applications deployed to the CloudHub Shared Worker Cloud, plus matching firewall rules and IP whitelisting in the on-premises network

- C. An Anypoint VPC with one Dedicated Load Balancer fronting each on-premises database system, plus matching IP whitelisting in the load balancer and firewall rules in the VPC and on-premises network

- D. Relocation of the database systems to a DMZ in the on-premises network, with Mule applications deployed to the CloudHub Shared Worker Cloud connecting only to the DMZ

Answer:

A

Explanation:

Correct answer is An Anypoint VPC connected to the on-premises network using an IPsec tunnel or

AWS DirectConnect, plus matching firewall rules in the VPC and on-premises network

IPsec Tunnel You can use an IPsec tunnel with network-to-network configuration to connect your on-

premises data centers to your Anypoint VPC. An IPsec VPN tunnel is generally the recommended

solution for VPC to on-premises connectivity, as it provides a standardized, secure way to connect.

This method also integrates well with existing IT infrastructure such as routers and appliances.

https://docs.mulesoft.com/runtime-manager/vpc-connectivity-methods-concept

* "Relocation of the database systems to a DMZ in the on-premises network, with Mule applications

deployed to the CloudHub Shared Worker Cloud connecting only to the DMZ" is not a feasible option

* "Static IP addresses for the Mule applications deployed to the CloudHub Shared Worker Cloud, plus

matching firewall rules and IP whitelisting in the on-premises network" - It is risk for sensitive data. -

Even if you whitelist the database IP on your app, your app went be able to connect to the database

so this is also not a feasible option

* "An Anypoint VPC with one Dedicated Load Balancer fronting each on-premises database system,

plus matching IP whitelisting in the load balancer and firewall rules in the VPC and on-premises

network" Adding one VPC with a DLB for each backend system also makes no sense, is way too much

work. Why would you add a LB for one system.

* Correct answer: "An Anypoint VPC connected to the on-premises network using an IPsec tunnel or

AWS DirectConnect, plus matching firewall rules in the VPC and on-premises network"

IPsec Tunnel You can use an IPsec tunnel with network-to-network configuration to connect your on-

premises data centers to your Anypoint VPC. An IPsec VPN tunnel is generally the recommended

solution for VPC to on-premises connectivity, as it provides a standardized, secure way to connect.

This method also integrates well with existing IT infrastructure such as routers and appliances.

Reference:

https://docs.mulesoft.com/runtime-manager/vpc-connectivity-methods-concept

Question 2

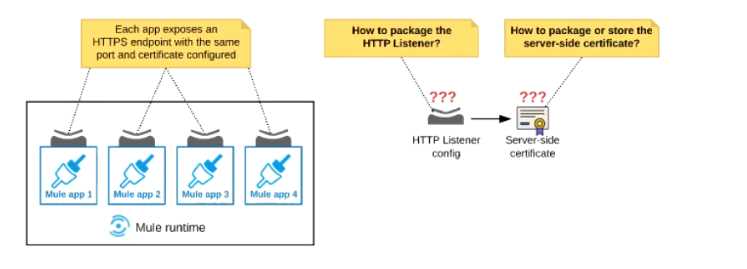

Refer to the exhibit.

An organization deploys multiple Mule applications to the same customer -hosted Mule runtime.

Many of these Mule applications must expose an HTTPS endpoint on the same port using a server-

side certificate that rotates often.

What is the most effective way to package the HTTP Listener and package or store the server-side

certificate when deploying these Mule applications, so the disruption caused by certificate rotation is

minimized?

- A. Package the HTTPS Listener configuration in a Mule DOMAIN project, referencing it from all Mule applications that need to expose an HTTPS endpoint Package the server-side certificate in ALL Mule APPLICATIONS that need to expose an HTTPS endpoint

- B. Package the HTTPS Listener configuration in a Mule DOMAIN project, referencing it from all Mule applications that need to expose an HTTPS endpoint. Store the server-side certificate in a shared filesystem location in the Mule runtime's classpath, OUTSIDE the Mule DOMAIN or any Mule APPLICATION

- C. Package an HTTPS Listener configuration In all Mule APPLICATIONS that need to expose an HTTPS endpoint Package the server-side certificate in a NEW Mule DOMAIN project

- D. Package the HTTPS Listener configuration in a Mule DOMAIN project, referencing It from all Mule applications that need to expose an HTTPS endpoint. Package the server-side certificate in the SAME Mule DOMAIN project Go to Set

Answer:

B

Explanation:

In this scenario, both A & C will work, but A is better as it does not require repackage to the domain

project at all.

Correct answer is Package the HTTPS Listener configuration in a Mule DOMAIN project, referencing it

from all Mule applications that need to expose an HTTPS endpoint. Store the server-side certificate

in a shared filesystem location in the Mule runtimes classpath, OUTSIDE the Mule DOMAIN or any

Mule APPLICATION.

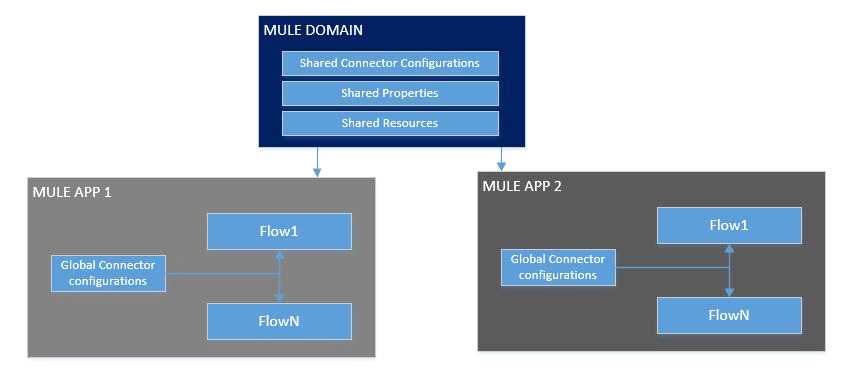

What is Mule Domain Project?

* A Mule Domain Project is implemented to configure the resources that are shared among different

projects. These resources can be used by all the projects associated with this domain. Mule

applications can be associated with only one domain, but a domain can be associated with multiple

projects. Shared resources allow multiple development teams to work in parallel using the same set

of reusable connectors. Defining these connectors as shared resources at the domain level allows the

team to: - Expose multiple services within the domain through the same port. - Share the connection

to persistent storage. - Share services between apps through a well-defined interface. - Ensure

consistency between apps upon any changes because the configuration is only set in one place.

* Use domains Project to share the same host and port among multiple projects. You can declare the

http connector within a domain project and associate the domain project with other projects. Doing

this also allows to control thread settings, keystore configurations, time outs for all the requests

made within multiple applications. You may think that one can also achieve this by duplicating the

http connector configuration across all the applications. But, doing this may pose a nightmare if you

have to make a change and redeploy all the applications.

* If you use connector configuration in the domain and let all the applications use the new domain

instead of a default domain, you will maintain only one copy of the http connector configuration. Any

changes will require only the domain to the redeployed instead of all the applications.

You can start using domains in only three steps:

1) Create a Mule Domain project

2) Create the global connector configurations which needs to be shared across the applications inside

the Mule Domain project

3) Modify the value of domain in mule-deploy.properties file of the applications

Use a certificate defined in already deployed Mule domain Configure the certificate in the domain so

that the API proxy HTTPS Listener references it, and then deploy the secure API proxy to the target

Runtime Fabric, or on-premises target. (CloudHub is not supported with this approach because it

does not support Mule domains.)

Question 3

An API client is implemented as a Mule application that includes an HTTP Request operation using a

default configuration. The HTTP Request operation invokes an external API that follows standard

HTTP status code conventions, which causes the HTTP Request operation to return a 4xx status code.

What is a possible cause of this status code response?

- A. An error occurred inside the external API implementation when processing the HTTP request that was received from the outbound HTTP Request operation of the Mule application

- B. The external API reported that the API implementation has moved to a different external endpoint

- C. The HTTP response cannot be interpreted by the HTTP Request operation of the Mule application after it was received from the external API

- D. The external API reported an error with the HTTP request that was received from the outbound HTTP Request operation of the Mule application

Answer:

D

Explanation:

Correct choice is: "The external API reported an error with the HTTP request that was received from

the outbound HTTP Request operation of the Mule application"

Understanding HTTP 4XX Client Error Response Codes : A 4XX Error is an error that arises in cases

where there is a problem with the users request, and not with the server.

Such cases usually arise when a users access to a webpage is restricted, the user misspells the URL,

or when a webpage is nonexistent or removed from the publics view.

In short, it is an error that occurs because of a mismatch between what a user is trying to access, and

its availability to the user either because the user does not have the right to access it, or because

what the user is trying to access simply does not exist. Some of the examples of 4XX errors are

400 Bad Request The server could not understand the request due to invalid syntax. 401

Unauthorized Although the HTTP standard specifies "unauthorized", semantically this response

means "unauthenticated". That is, the client must authenticate itself to get the requested response.

403 Forbidden The client does not have access rights to the content; that is, it is unauthorized, so the

server is refusing to give the requested resource. Unlike 401, the client's identity is known to the

server. 404 Not Found The server can not find the requested resource. In the browser, this means the

URL is not recognized. In an API, this can also mean that the endpoint is valid but the resource itself

does not exist. Servers may also send this response instead of 403 to hide the existence of a resource

from an unauthorized client. This response code is probably the most famous one due to its frequent

occurrence on the web. 405 Method Not Allowed The request method is known by the server but

has been disabled and cannot be used. For example, an API may forbid DELETE-ing a resource. The

two mandatory methods, GET and HEAD, must never be disabled and should not return this error

code. 406 Not Acceptable This response is sent when the web server, after performing server-driven

content negotiation, doesn't find any content that conforms to the criteria given by the user agent.

The external API reported that the API implementation has moved to a different external endpoint

cannot be the correct answer as in this situation 301 Moved Permanently The URL of the requested

resource has been changed permanently. The new URL is given in the response. ----------------------------

------------------------------------------------------------------------------------------------------------------- In Lay man's

term the scenario would be: API CLIENT > MuleSoft API - HTTP request Hey, API.. process this

> External API API CLIENT < MuleSoft API - http response "I'm sorry Client.. something is wrong

with that request" < (4XX) External API

Question 4

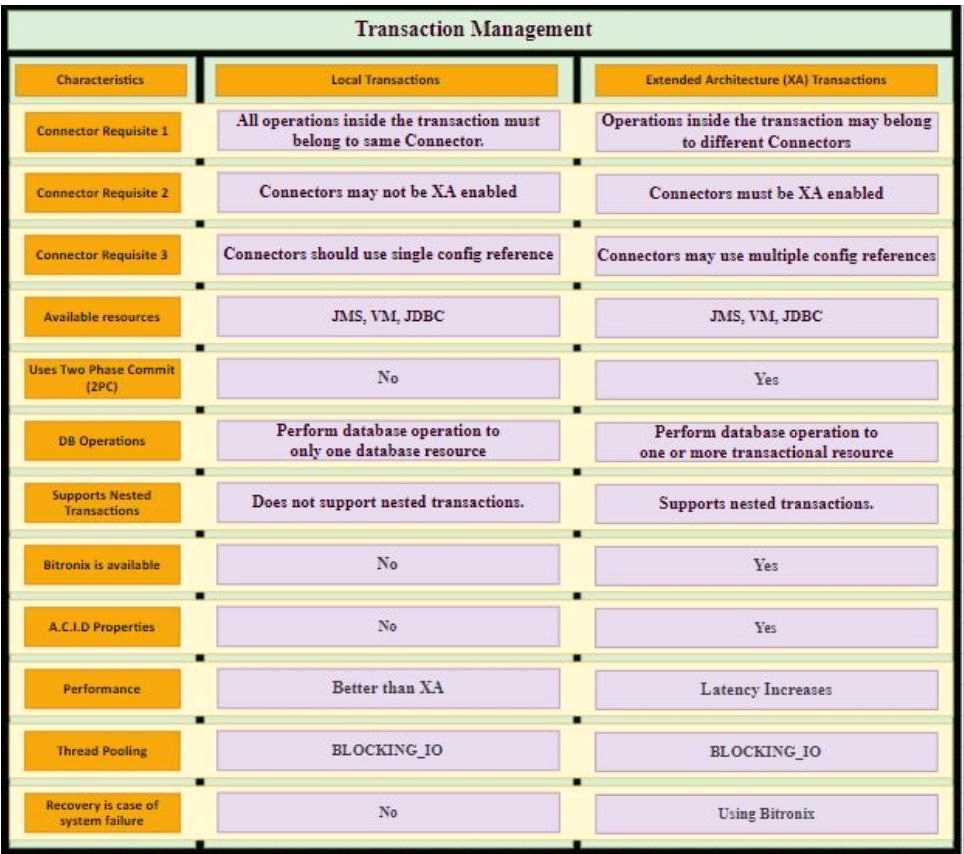

An XA transaction Is being configured that involves a JMS connector listening for Incoming JMS

messages. What is the meaning of the timeout attribute of the XA transaction, and what happens

after the timeout expires?

- A. The time that is allowed to pass between committing the transaction and the completion of the Mule flow After the timeout, flow processing triggers an error

- B. The time that Is allowed to pass between receiving JMS messages on the same JMS connection After the timeout, a new JMS connection Is established

- C. The time that Is allowed to pass without the transaction being ended explicitly After the timeout, the transaction Is forcefully rolled-back

- D. The time that Is allowed to pass for state JMS consumer threads to be destroyed After the timeout, a new JMS consumer thread is created

Answer:

C

Explanation:

* Setting a transaction timeout for the Bitronix transaction manager

Set the transaction timeout either

In wrapper.conf

In CloudHub in the Properties tab of the Mule application deployment

The default is 60 secs. It is defined as

mule.bitronix.transactiontimeout = 120

* This property defines the timeout for each transaction created for this manager.

If the transaction has not terminated before the timeout expires it will be automatically rolled

back.

---------------------------------------------------------------------------------------------------------------------

Additional Info around Transaction Management:

Bitronix is available as the XA transaction manager for Mule applications

To use Bitronix, declare it as a global configuration element in the Mule application

<bti:transaction-manager />

Each Mule runtime can have only one instance of a Bitronix transaction manager, which is

shared by all Mule applications

For customer-hosted deployments, define the XA transaction manager in a Mule domain

Then share this global element among all Mule applications in the Mule runtime

Question 5

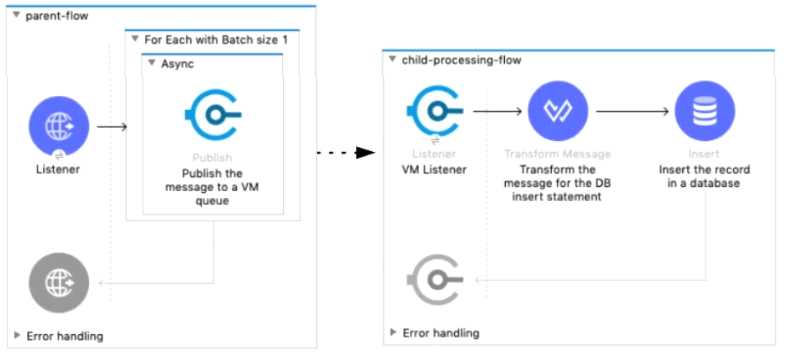

Refer to the exhibit.

A Mule 4 application has a parent flow that breaks up a JSON array payload into 200 separate items,

then sends each item one at a time inside an Async scope to a VM queue.

A second flow to process orders has a VM Listener on the same VM queue. The rest of this flow

processes each received item by writing the item to a database.

This Mule application is deployed to four CloudHub workers with persistent queues enabled.

What message processing guarantees are provided by the VM queue and the CloudHub workers, and

how are VM messages routed among the CloudHub workers for each invocation of the parent flow

under normal operating conditions where all the CloudHub workers remain online?

- A. EACH item VM message is processed AT MOST ONCE by ONE CloudHub worker, with workers chosen in a deterministic round-robin fashion Each of the four CloudHub workers can be expected to process 1/4 of the Item VM messages (about 50 items)

- B. EACH item VM message is processed AT LEAST ONCE by ONE ARBITRARY CloudHub worker Each of the four CloudHub workers can be expected to process some item VM messages

- C. ALL Item VM messages are processed AT LEAST ONCE by the SAME CloudHub worker where the parent flow was invoked This one CloudHub worker processes ALL 200 item VM messages

- D. ALL item VM messages are processed AT MOST ONCE by ONE ARBITRARY CloudHub worker This one CloudHub worker processes ALL 200 item VM messages

Answer:

B

Explanation:

Correct answer is EACH item VM message is processed AT LEAST ONCE by ONE ARBITRARY CloudHub

worker. Each of the four CloudHub workers can be expected to process some item VM messages In

Cloudhub, each persistent VM queue is listened on by every CloudHub worker - But each message is

read and processed at least once by only one CloudHub worker and the duplicate processing is

possible - If the CloudHub worker fails , the message can be read by another worker to prevent loss

of messages and this can lead to duplicate processing - By default , every CloudHub worker's VM

Listener

receives

different

messages

from

VM

Queue

Referenece:

https://dzone.com/articles/deploying-mulesoft-application-on-1-worker-vs-mult

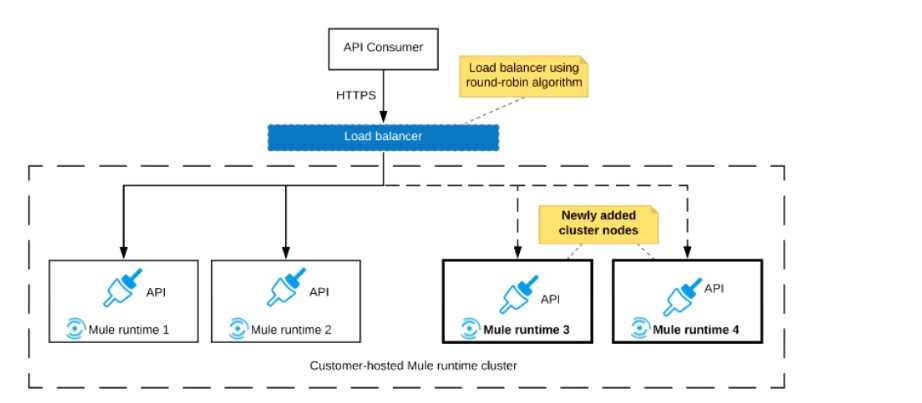

Question 6

Refer to the exhibit.

An organization uses a 2-node Mute runtime cluster to host one stateless API implementation. The

API is accessed over HTTPS through a load balancer that uses round-robin for load distribution.

Two additional nodes have been added to the cluster and the load balancer has been configured to

recognize the new nodes with no other change to the load balancer.

What average performance change is guaranteed to happen, assuming all cluster nodes are fully

operational?

- A. 50% reduction in the response time of the API

- B. 100% increase in the throughput of the API

- C. 50% reduction In the JVM heap memory consumed by each node

- D. 50% reduction In the number of requests being received by each node

Answer:

D

Question 7

An integration Mule application is deployed to a customer-hosted multi-node Mule 4 runtime duster.

The Mule application uses a Listener operation of a JMS connector to receive incoming messages

from a JMS queue.

How are the messages consumed by the Mule application?

- A. Depending on the JMS provider's configuration, either all messages are consumed by ONLY the primary cluster node or else ALL messages are consumed by ALL cluster nodes

- B. Regardless of the Listener operation configuration, all messages are consumed by ALL cluster nodes

- C. Depending on the Listener operation configuration, either all messages are consumed by ONLY the primary cluster node or else EACH message is consumed by ANY ONE cluster node

- D. Regardless of the Listener operation configuration, all messages are consumed by ONLY the primary cluster node

Answer:

C

Explanation:

Correct answer is Depending on the Listener operation configuration, either all messages are

consumed by ONLY the primary cluster node or else EACH message is consumed by ANY ONE cluster

node

For applications running in clusters, you have to keep in mind the concept of primary node and how

the connector will behave. When running in a cluster, the JMS listener default behavior will be to

receive messages only in the primary node, no matter what kind of destination you are consuming

from. In case of consuming messages from a Queue, youll want to change this configuration to

receive messages in all the nodes of the cluster, not just the primary.

This can be done with the primaryNodeOnly parameter:

<jms:listener config-ref="config" destination="${inputQueue}" primaryNodeOnly="false"/>

Question 8

An Integration Mule application is being designed to synchronize customer data between two

systems. One system is an IBM Mainframe and the other system is a Salesforce Marketing Cloud

(CRM) instance. Both systems have been deployed in their typical configurations, and are to be

invoked using the native protocols provided by Salesforce and IBM.

What interface technologies are the most straightforward and appropriate to use in this Mute

application to interact with these systems, assuming that Anypoint Connectors exist that implement

these interface technologies?

- A. IBM: DB access CRM: gRPC

- B. IBM: REST CRM:REST

- C. IBM: Active MQ CRM: REST

- D. IBM:QCS CRM: SOAP

Answer:

C

Explanation:

Correct answer is IBM: CICS CRM: SOAP

* Within Anypoint Exchange, MuleSoft offers the IBM CICS connector. Anypoint Connector for IBM

CICS Transaction Gateway (IBM CTG Connector) provides integration with back-end CICS apps using

the CICS Transaction Gateway.

* Anypoint Connector for Salesforce Marketing Cloud (Marketing Cloud Connector) enables you to

connect to the Marketing Cloud API web services (now known as the Marketing Cloud API), which is

also known as the Salesforce Marketing Cloud. This connector exposes convenient operations via

SOAP for exploiting the capabilities of Salesforce Marketing Cloud.

Question 9

What is required before an API implemented using the components of Anypoint Platform can be

managed and governed (by applying API policies) on Anypoint Platform?

- A. The API must be published to Anypoint Exchange and a corresponding API instance ID must be obtained from API Manager to be used in the API implementation

- B. The API implementation source code must be committed to a source control management system (such as GitHub)

- C. A RAML definition of the API must be created in API designer so it can then be published to Anypoint Exchange

- D. The API must be shared with the potential developers through an API portal so API consumers can interact with the API

Answer:

C

Explanation:

Context of the question is about managing and governing mule applications deployed on Anypoint

platform.

Anypoint API Manager (API Manager) is a component of Anypoint Platform that enables you to

manage, govern, and secure APIs. It leverages the runtime capabilities of API Gateway and Anypoint

Service Mesh, both of which enforce policies, collect and track analytics data, manage proxies,

provide encryption and authentication, and manage applications.

Prerequisite of managing an API is that the API must be published to Anypoint Exchange. Hence the

correct option in C

Mule Ref Doc :

https://docs.mulesoft.com/api-manager/2.x/getting-started-proxy

Reference:

https://docs.mulesoft.com/api-manager/2.x/api-auto-discovery-new-concept

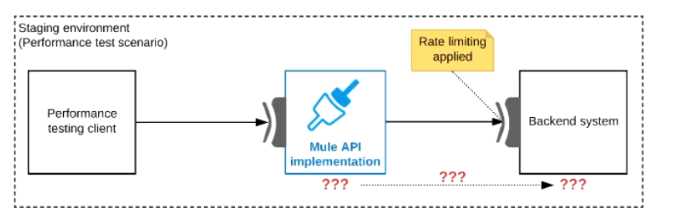

Question 10

Refer to the exhibit.

One of the backend systems invoked by an API implementation enforces rate limits on the number of

requests a particular client can make. Both the backend system and the API implementation are

deployed to several non-production environments in addition to production.

Rate limiting of the backend system applies to all non-production environments. The production

environment, however, does NOT have any rate limiting.

What is the most effective approach to conduct performance tests of the API implementation in a

staging (non-production) environment?

- A. Create a mocking service that replicates the backend system's production performance characteristics. Then configure the API implementation to use the mocking service and conduct the performance tests

- B. Use MUnit to simulate standard responses from the backend system then conduct performance tests to identify other bottlenecks in the system

- C. Include logic within the API implementation that bypasses invocations of the backend system in a performance test situation. Instead invoking local stubs that replicate typical backend system responses then conduct performance tests using this API Implementation

- D. Conduct scaled-down performance tests in the staging environment against the rate limited backend system then upscale performance results to full production scale

Answer:

A

Explanation:

Correct answer is Create a mocking service that replicates the backend systems production

performance characteristics. Then configure the API implementation to use the mocking service and

conduct the performance tests

* MUnit is for only Unit and integration testing for APIs and Mule apps. Not for performance Testing,

even if it has the ability to Mock the backend.

* Bypassing the backend invocation defeats the whole purpose of performance testing. Hence it is

not a valid answer.

* Scaled down performance tests cant be relied upon as performance of API's is not linear against

load.

Question 11

An API has been unit tested and is ready for integration testing. The API is governed by a Client ID

Enforcement policy in all environments.

What must the testing team do before they can start integration testing the API in the Staging

environment?

- A. They must access the API portal and create an API notebook using the Client ID and Client Secret supplied by the API portal in the Staging environment

- B. They must request access to the API instance in the Staging environment and obtain a Client ID and Client Secret to be used for testing the API

- C. They must be assigned as an API version owner of the API in the Staging environment

- D. They must request access to the Staging environment and obtain the Client ID and Client Secret for that environment to be used for testing the API

Answer:

B

Explanation:

* It's mentioned that the API is governed by a Client ID Enforcement policy in all environments.

* Client ID Enforcement policy allows only authorized applications to access the deployed API

implementation.

* Each authorized application is configured with credentials: client_id and client_secret.

* At runtime, authorized applications provide the credentials with each request to the API

implementation.

MuleSoft

Reference:

https://docs.mulesoft.com/api-manager/2.x/policy-mule3-client-id-based-

policies

Question 12

What requires configuration of both a key store and a trust store for an HTTP Listener?

- A. Support for TLS mutual (two-way) authentication with HTTP clients B. Encryption of requests to both subdomains and API resource endpoints fhttPs://aDi.customer.com/ and https://customer.com/api )

- C. Encryption of both HTTP request and HTTP response bodies for all HTTP clients

- D. Encryption of both HTTP request header and HTTP request body for all HTTP clients

Answer:

A

Question 13

A retailer is designing a data exchange interface to be used by its suppliers. The interface must

support secure communication over the public internet. The interface must also work with a wide

variety of programming languages and IT systems used by suppliers.

What are suitable interface technologies for this data exchange that are secure, cross-platform, and

internet friendly, assuming that Anypoint Connectors exist for these interface technologies?

- A. EDJFACT XML over SFTP JSON/REST over HTTPS

- B. SOAP over HTTPS HOP over TLS gRPC over HTTPS

- C. XML over ActiveMQ XML over SFTP XML/REST over HTTPS

- D. CSV over FTP YAML over TLS JSON over HTTPS

Answer:

D

Explanation:

* As per definition of API by Mulesoft , it is Application Programming Interface using HTTP-based

protocols. Non-HTTP-based programmatic interfaces are not APIs.

* HTTP-based programmatic interfaces are APIs even if they dont use REST or JSON. Hence

implementation based on Java RMI, CORBA/IIOP, raw TCP/IP interfaces are not API's as they are not

using HTTP.

* One more thing to note is FTP was not built to be secure. It is generally considered to be an

insecure protocol because it relies on clear-text usernames and passwords for authentication and

does not use encryption.

* Data sent via FTP is vulnerable to sniffing, spoofing, and brute force attacks, among other basic

attack methods.

Considering the above points only correct option is

-XML over ActiveMQ

- XML over SFTP

- XML/REST over HTTPS

Question 14

An organization currently uses a multi-node Mule runtime deployment model within their

datacenter, so each Mule runtime hosts several Mule applications. The organization is planning to

transition to a deployment model based on Docker containers in a Kubernetes cluster. The

organization has already created a standard Docker image containing a Mule runtime and all required

dependencies (including a JVM), but excluding the Mule application itself.

What is an expected outcome of this transition to container-based Mule application deployments?

- A. Required redesign of Mule applications to follow microservice architecture principles

- B. Required migration to the Docker and Kubernetes-based Anypoint Platform - Private Cloud Edition

- C. Required change to the URL endpoints used by clients to send requests to the Mule applications

- D. Guaranteed consistency of execution environments across all deployments of a Mule application

Answer:

A

Explanation:

* Organization can continue using existing load balancer even if backend application changes are

there. So option A is ruled out.

* As Mule runtime is within their datacenter, this model is RTF and not PCE. So option C is ruled out.

Mule runtime deployment model within their datacenter, so each Mule runtime hosts several Mule

applications -- This mean PCE or Hybird not RTF - Also mentioned in Question is that - Mule runtime

is hosting several Mule Application, so that also rules out RTF and as for hosting multiple Application

it will have Domain project which need redesign to make it microservice architecture

---------------------------------------------------------------------------------------------------------------

Correct Answer: Required redesign of Mule applications to follow microservice

Explanation:architecture principles

Question 15

A team would like to create a project skeleton that developers can use as a starting point when

creating API Implementations with Anypoint Studio. This skeleton should help drive consistent use of

best practices within the team.

What type of Anypoint Exchange artifact(s) should be added to Anypoint Exchange to publish the

project skeleton?

- A. A custom asset with the default API implementation

- B. A RAML archetype and reusable trait definitions to be reused across API implementations

- C. An example of an API implementation following best practices

- D. a Mule application template with the key components and minimal integration logic

Answer:

D