microsoft DP-203 Exam Questions

Questions for the DP-203 were updated on : Jul 05 ,2025

Page 1 out of 15. Viewing questions 1-15 out of 212

Question 1 Topic 1, Case Study 1Case Study Question View Case

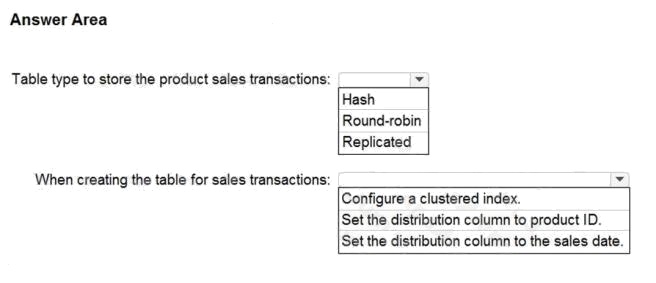

HOTSPOT

You need to design a data storage structure for the product sales transactions. The solution must meet the sales transaction

dataset requirements.

What should you include in the solution? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Answer:

Explanation:

Box 1: Hash Scenario:

Ensure that queries joining and filtering sales transaction records based on product ID complete as quickly as possible.

A hash distributed table can deliver the highest query performance for joins and aggregations on large tables.

Box 2: Set the distribution column to the sales date.

Scenario: Partition data that contains sales transaction records. Partitions must be designed to provide efficient loads by

month. Boundary values must belong to the partition on the right.

Reference: https://rajanieshkaushikk.com/2020/09/09/how-to-choose-right-data-distribution-strategy-for-azure-synapse/

Question 2 Topic 1, Case Study 1Case Study Question View Case

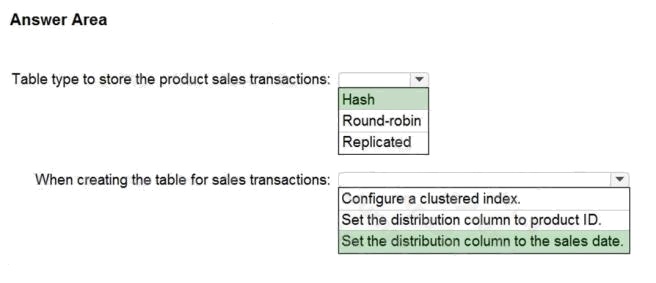

DRAG DROP

You need to ensure that the Twitter feed data can be analyzed in the dedicated SQL pool. The solution must meet the

customer sentiment analytic requirements.

Which three Transact-SQL DDL commands should you run in sequence? To answer, move the appropriate commands from

the list of commands to the answer area and arrange them in the correct order.

NOTE: More than one order of answer choices is correct. You will receive credit for any of the correct orders you select.

Select and Place:

Answer:

Explanation:

Scenario: Allow Contoso users to use PolyBase in an Azure Synapse Analytics dedicated SQL pool to query the content of

the data records that host the Twitter feeds. Data must be protected by using row-level security (RLS). The users must be

authenticated by using their own Azure AD credentials.

Box 1: CREATE EXTERNAL DATA SOURCE External data sources are used to connect to storage accounts.

Box 2: CREATE EXTERNAL FILE FORMAT

CREATE EXTERNAL FILE FORMAT creates an external file format object that defines external data stored in Azure Blob

Storage or Azure Data Lake Storage. Creating an external file format is a prerequisite for creating an external table.

Box 3: CREATE EXTERNAL TABLE AS SELECT

When used in conjunction with the CREATE TABLE AS SELECT statement, selecting from an external table imports data

into a table within the SQL pool. In addition to the COPY statement, external tables are useful for loading data.

Incorrect Answers:

CREATE EXTERNAL TABLE

The CREATE EXTERNAL TABLE command creates an external table for Synapse SQL to access data stored in Azure Blob

Storage or Azure Data Lake Storage.

Reference: https://docs.microsoft.com/en-us/azure/synapse-analytics/sql/develop-tables-external-tables

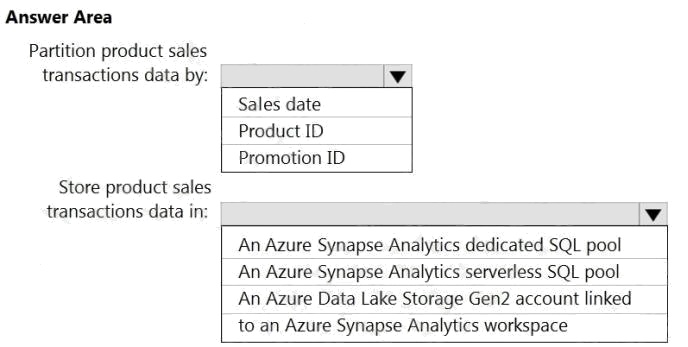

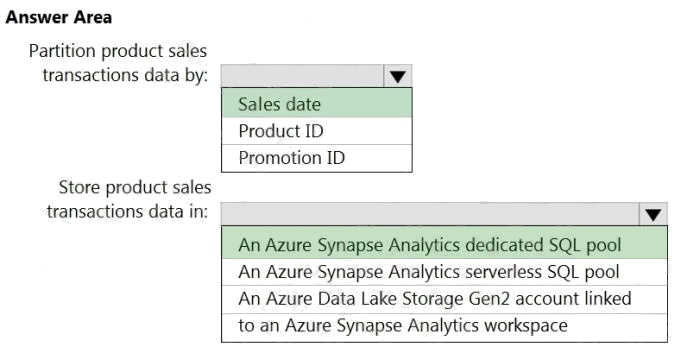

Question 3 Topic 1, Case Study 1Case Study Question View Case

HOTSPOT

You need to design the partitions for the product sales transactions. The solution must meet the sales transaction dataset

requirements.

What should you include in the solution? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Answer:

Explanation:

Box 1: Sales date

Scenario: Contoso requirements for data integration include:

Partition data that contains sales transaction records. Partitions must be designed to provide efficient loads by month.

Boundary values must belong to the partition on the right.

Box 2: An Azure Synapse Analytics Dedicated SQL pool

Scenario: Contoso requirements for data integration include:

Ensure that data storage costs and performance are predictable.

The size of a dedicated SQL pool (formerly SQL DW) is determined by Data Warehousing Units (DWU).

Dedicated SQL pool (formerly SQL DW) stores data in relational tables with columnar storage. This format significantly

reduces the data storage costs, and improves query performance.

Synapse analytics dedicated sql pool

Reference: https://docs.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/sql-data-warehouse-overview-

what-is

Question 4 Topic 1, Case Study 1Case Study Question View Case

You need to implement the surrogate key for the retail store table. The solution must meet the sales transaction dataset

requirements.

What should you create?

- A. a table that has an IDENTITY property

- B. a system-versioned temporal table

- C. a user-defined SEQUENCE object

- D. a table that has a FOREIGN KEY constraint

Answer:

A

Explanation:

Scenario: Implement a surrogate key to account for changes to the retail store addresses.

A surrogate key on a table is a column with a unique identifier for each row. The key is not generated from the table data.

Data modelers like to create surrogate keys on their tables when they design data warehouse models. You can use the

IDENTITY property to achieve this goal simply and effectively without affecting load performance.

Reference: https://docs.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/sql-data-warehouse-tables-identity

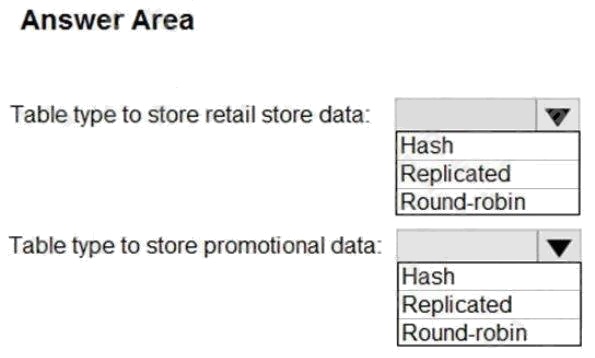

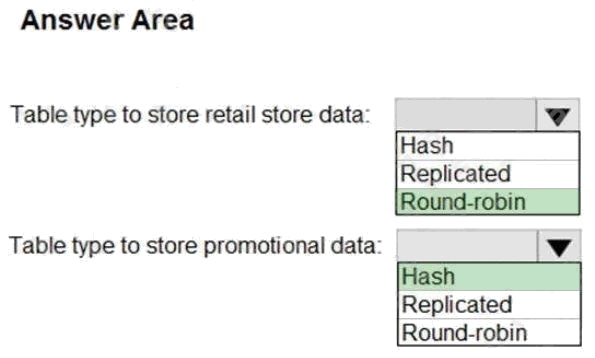

Question 5 Topic 1, Case Study 1Case Study Question View Case

HOTSPOT

You need to design an analytical storage solution for the transactional data. The solution must meet the sales transaction

dataset requirements.

What should you include in the solution? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Answer:

Explanation:

Box 1: Round-robin

Round-robin tables are useful for improving loading speed.

Scenario: Partition data that contains sales transaction records. Partitions must be designed to provide efficient loads by

month.

Box 2: Hash

Hash-distributed tables improve query performance on large fact tables.

Scenario:

You plan to create a promotional table that will contain a promotion ID. The promotion ID will be associated to a specific

product. The product will be identified by a product ID. The table will be approximately 5 GB.

Ensure that queries joining and filtering sales transaction records based on product ID complete as quickly as possible.

Reference: https://docs.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/sql-data-warehouse-tables-

distribute

Question 6 Topic 1, Case Study 1Case Study Question View Case

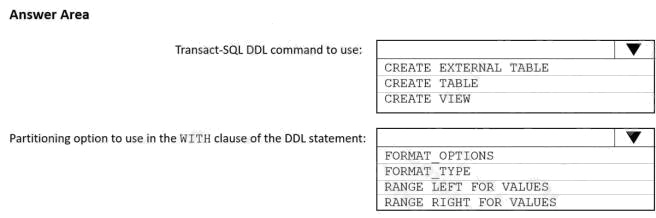

HOTSPOT

You need to implement an Azure Synapse Analytics database object for storing the sales transactions data. The solution

must meet the sales transaction dataset requirements.

What should you do? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Answer:

Explanation:

Box 1: Create table

Scenario: Load the sales transaction dataset to Azure Synapse Analytics

Box 2: RANGE RIGHT FOR VALUES

Scenario: Partition data that contains sales transaction records. Partitions must be designed to provide efficient loads by

month. Boundary values must belong to the partition on the right.

RANGE RIGHT: Specifies the boundary value belongs to the partition on the right (higher values). FOR VALUES (

boundary_value [,...n] ): Specifies the boundary values for the partition.

Scenario: Load the sales transaction dataset to Azure Synapse Analytics.

Contoso identifies the following requirements for the sales transaction dataset:

Partition data that contains sales transaction records. Partitions must be designed to provide efficient loads by month.

Boundary values must belong to the partition on the right. Ensure that queries joining and filtering sales transaction

records based on product ID complete as quickly as possible. Implement a surrogate key to account for changes to the

retail store addresses.

Ensure that data storage costs and performance are predictable. Minimize how long it takes to remove old records.

Reference: https://docs.microsoft.com/en-us/sql/t-sql/statements/create-table-azure-sql-data-warehouse

Question 7 Topic 1, Case Study 1Case Study Question View Case

You need to design a data retention solution for the Twitter feed data records. The solution must meet the customer

sentiment analytics requirements.

Which Azure Storage functionality should you include in the solution?

- A. change feed

- B. soft delete

- C. time-based retention

- D. lifecycle management

Answer:

D

Explanation:

Scenario: Purge Twitter feed data records that are older than two years.

Data sets have unique lifecycles. Early in the lifecycle, people access some data often. But the need for access often drops

drastically as the data ages. Some data remains idle in the cloud and is rarely accessed once stored. Some data sets expire

days or months after creation, while other data sets are actively read and modified throughout their lifetimes. Azure Storage

lifecycle management offers a rule-based policy that you can use to transition blob data to the appropriate access tiers or to

expire data at the end of the data lifecycle.

Reference: https://docs.microsoft.com/en-us/azure/storage/blobs/lifecycle-management-overview

Question 8 Topic 2, Case Study 2Case Study Question View Case

What should you recommend to prevent users outside the Litware on-premises network from accessing the analytical data

store?

- A. a server-level virtual network rule

- B. a database-level virtual network rule

- C. a server-level firewall IP rule

- D. a database-level firewall IP rule

Answer:

C

Explanation:

Scenario:

Ensure that the analytical data store is accessible only to the company's on-premises network and Azure services.

Litware does not plan to implement Azure ExpressRoute or a VPN between the on-premises network and Azure.

Since Litware does not plan to implement Azure ExpressRoute or a VPN between the on-premises network and Azure, they

will have to create firewall IP rules to allow connection from the IP ranges of the on-premise network. They can also use the

firewall rule 0.0.0.0 to allow access from Azure services.

Reference: https://docs.microsoft.com/en-us/azure/sql-database/sql-database-vnet-service-endpoint-rule-overview

Question 9 Topic 2, Case Study 2Case Study Question View Case

What should you recommend using to secure sensitive customer contact information?

- A. Transparent Data Encryption (TDE)

- B. row-level security

- C. column-level security

- D. data sensitivity labels

Answer:

D

Explanation:

Scenario: Limit the business analysts access to customer contact information, such as phone numbers, because this type of

data is not analytically relevant.

Labeling: You can apply sensitivity-classification labels persistently to columns by using new metadata attributes that have

been added to the SQL Server database engine. This metadata can then be used for advanced, sensitivity-based auditing

and protection scenarios.

Incorrect Answers:

A: Transparent Data Encryption (TDE) encrypts SQL Server, Azure SQL Database, and Azure Synapse Analytics data files,

known as encrypting data at rest. TDE does not provide encryption across communication channels.

Reference:

https://docs.microsoft.com/en-us/azure/azure-sql/database/data-discovery-and-classification-overview

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-security-overview

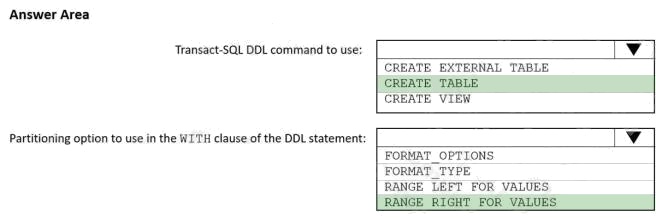

Question 10 Topic 3, Mixed Questions

You have a table in an Azure Synapse Analytics dedicated SQL pool. The table was created by using the following Transact-

SQL statement.

You need to alter the table to meet the following requirements:

Ensure that users can identify the current manager of employees.

Support creating an employee reporting hierarchy for your entire company. Provide fast lookup of the managers

attributes such as name and job title.

Which column should you add to the table?

- A. [ManagerEmployeeID] [smallint] NULL

- B. [ManagerEmployeeKey] [smallint] NULL

- C. [ManagerEmployeeKey] [int] NULL

- D. [ManagerName] [varchar](200) NULL

Answer:

C

Explanation:

We need an extra column to identify the Manager. Use the data type as the EmployeeKey column, an int column.

Reference: https://docs.microsoft.com/en-us/analysis-services/tabular-models/hierarchies-ssas-tabular

Question 11 Topic 3, Mixed Questions

You have an Azure Synapse workspace named MyWorkspace that contains an Apache Spark database named mytestdb.

You run the following command in an Azure Synapse Analytics Spark pool in MyWorkspace.

CREATE TABLE mytestdb.myParquetTable(

EmployeeID int,

EmployeeName string,

EmployeeStartDate date) USING Parquet

You then use Spark to insert a row into mytestdb.myParquetTable. The row contains the following data.

One minute later, you execute the following query from a serverless SQL pool in MyWorkspace.

SELECT EmployeeID

FROM mytestdb.dbo.myParquetTable WHERE EmployeeName = 'Alice';

What will be returned by the query?

- A. 24

- B. an error

- C. a null value

Answer:

A

Explanation:

Once a database has been created by a Spark job, you can create tables in it with Spark that use Parquet as the storage

format. Table names will be converted to lower case and need to be queried using the lower case name. These tables will

immediately become available for querying by any of the Azure Synapse workspace Spark pools. They can also be used

from any of the Spark jobs subject to permissions.

Note: For external tables, since they are synchronized to serverless SQL pool asynchronously, there will be a delay until they

appear.

Reference: https://docs.microsoft.com/en-us/azure/synapse-analytics/metadata/table

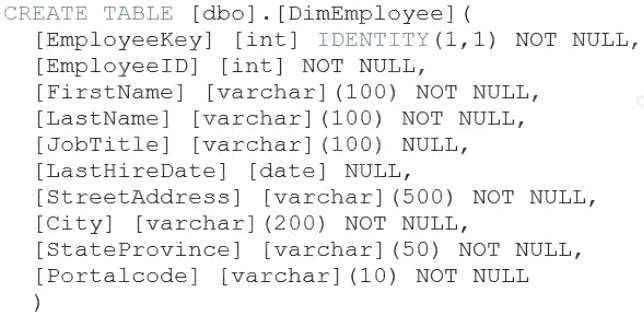

Question 12 Topic 3, Mixed Questions

DRAG DROP

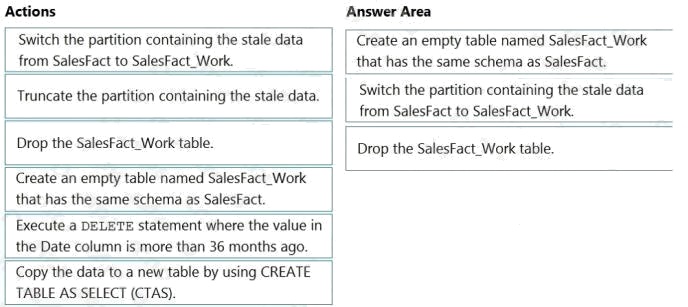

You have a table named SalesFact in an enterprise data warehouse in Azure Synapse Analytics. SalesFact contains sales

data from the past 36 months and has the following characteristics:

Is partitioned by month

Contains one billion rows

Has clustered columnstore indexes

At the beginning of each month, you need to remove data from SalesFact that is older than 36 months as quickly as

possible.

Which three actions should you perform in sequence in a stored procedure? To answer, move the appropriate actions from

the list of actions to the answer area and arrange them in the correct order.

Select and Place:

Answer:

Explanation:

Step 1: Create an empty table named SalesFact_work that has the same schema as SalesFact.

Step 2: Switch the partition containing the stale data from SalesFact to SalesFact_Work.

SQL Data Warehouse supports partition splitting, merging, and switching. To switch partitions between two tables, you must

ensure that the partitions align on their respective boundaries and that the table definitions match.

Loading data into partitions with partition switching is a convenient way stage new data in a table that is not visible to users

the switch in the new data.

Step 3: Drop the SalesFact_Work table.

Reference: https://docs.microsoft.com/en-us/azure/sql-data-warehouse/sql-data-warehouse-tables-partition

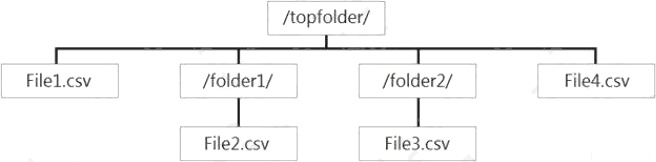

Question 13 Topic 3, Mixed Questions

You have files and folders in Azure Data Lake Storage Gen2 for an Azure Synapse workspace as shown in the following

exhibit.

You create an external table named ExtTable that has LOCATION='/topfolder/'.

When you query ExtTable by using an Azure Synapse Analytics serverless SQL pool, which files are returned?

- A. File2.csv and File3.csv only

- B. File1.csv and File4.csv only

- C. File1.csv, File2.csv, File3.csv, and File4.csv

- D. File1.csv only

Answer:

C

Explanation:

To run a T-SQL query over a set of files within a folder or set of folders while treating them as a single entity or rowset,

provide a path to a folder or a pattern (using wildcards) over a set of files or folders.

Reference: https://docs.microsoft.com/en-us/azure/synapse-analytics/sql/query-data-storage#query-multiple-files-or-folders

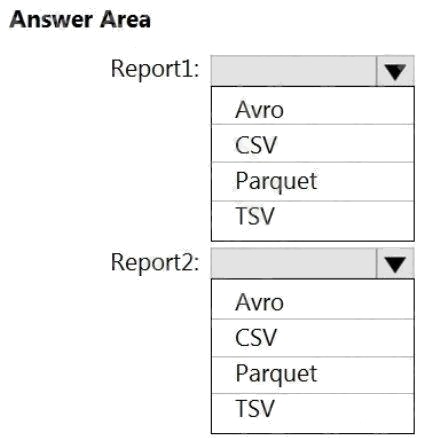

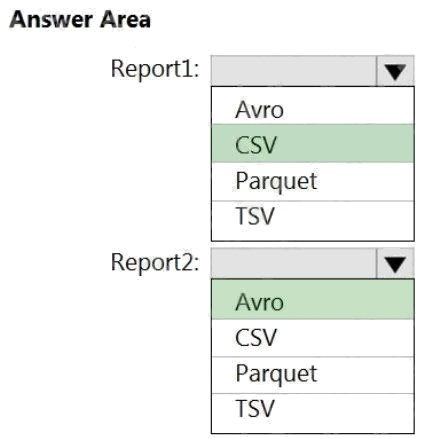

Question 14 Topic 3, Mixed Questions

HOTSPOT

You are planning the deployment of Azure Data Lake Storage Gen2.

You have the following two reports that will access the data lake:

Report1: Reads three columns from a file that contains 50 columns. Report2: Queries a single record based on a

timestamp.

You need to recommend in which format to store the data in the data lake to support the reports. The solution must minimize

read times.

What should you recommend for each report? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Answer:

Explanation:

Report1: CSV

CSV: The destination writes records as delimited data.

Report2: AVRO

AVRO supports timestamps.

Not Parquet, TSV: Not options for Azure Data Lake Storage Gen2.

Reference: https://streamsets.com/documentation/datacollector/latest/help/datacollector/UserGuide/Destinations/ADLS-G2-

D.html

Question 15 Topic 3, Mixed Questions

You are designing the folder structure for an Azure Data Lake Storage Gen2 container.

Users will query data by using a variety of services including Azure Databricks and Azure Synapse Analytics serverless SQL

pools. The data will be secured by subject area. Most queries will include data from the current year or current month.

Which folder structure should you recommend to support fast queries and simplified folder security?

- A. /{SubjectArea}/{DataSource}/{DD}/{MM}/{YYYY}/{FileData}_{YYYY}_{MM}_{DD}.csv

- B. /{DD}/{MM}/{YYYY}/{SubjectArea}/{DataSource}/{FileData}_{YYYY}_{MM}_{DD}.csv

- C. /{YYYY}/{MM}/{DD}/{SubjectArea}/{DataSource}/{FileData}_{YYYY}_{MM}_{DD}.csv

- D. /{SubjectArea}/{DataSource}/{YYYY}/{MM}/{DD}/{FileData}_{YYYY}_{MM}_{DD}.csv

Answer:

D

Explanation:

There's an important reason to put the date at the end of the directory structure. If you want to lock down certain regions or

subject matters to users/groups, then you can easily do so with the POSIX permissions. Otherwise, if there was a need to

restrict a certain security group to viewing just the UK data or certain planes, with the date structure in front a separate

permission would be required for numerous directories under every hour directory. Additionally, having the date structure in

front would exponentially increase the number of directories as time went on.

Note: In IoT workloads, there can be a great deal of data being landed in the data store that spans across numerous

products, devices, organizations, and customers. Its important to pre-plan the directory layout for organization, security, and

efficient processing of the data for down-stream consumers. A general template to consider might be the following layout:

{Region}/{SubjectMatter(s)}/{yyyy}/{mm}/{dd}/{hh}/