linux foundation CKS Exam Questions

Questions for the CKS were updated on : Feb 20 ,2026

Page 1 out of 4. Viewing questions 1-15 out of 48

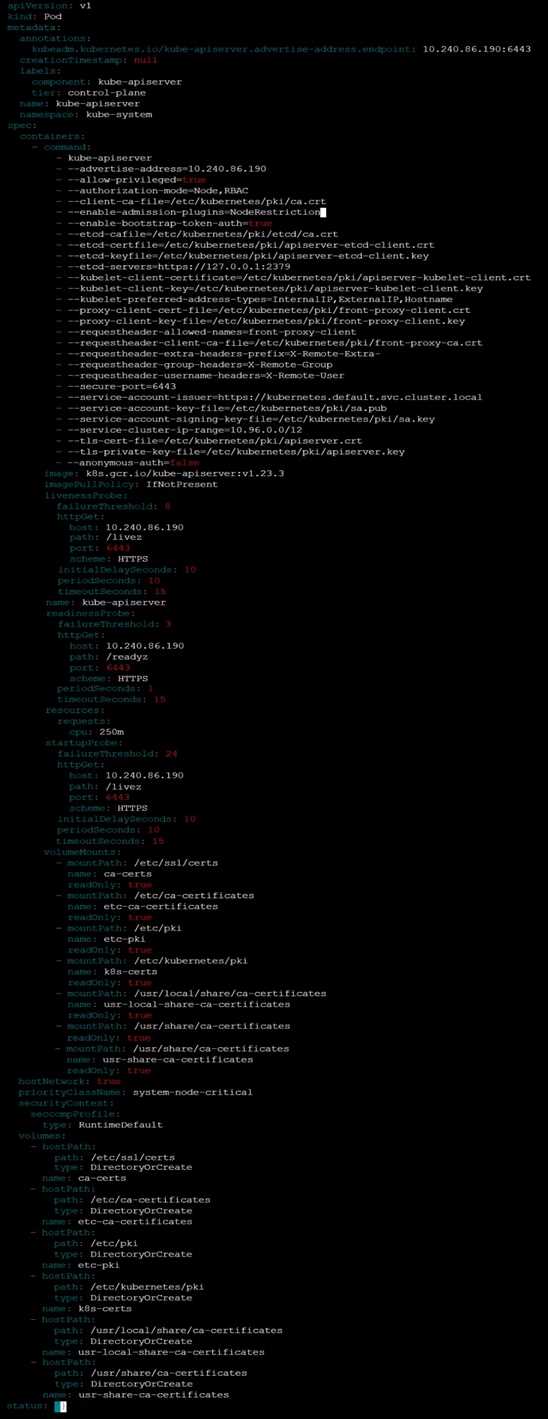

Question 1

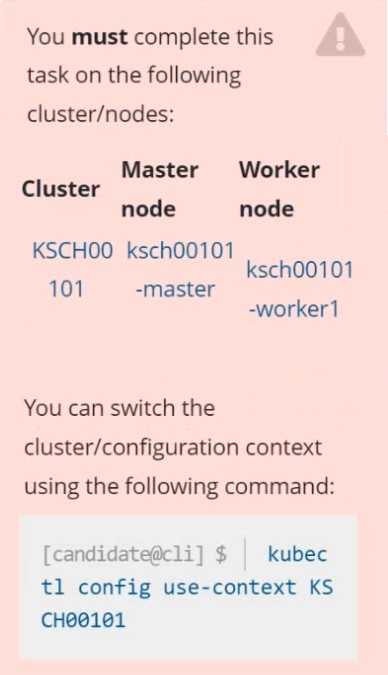

Context

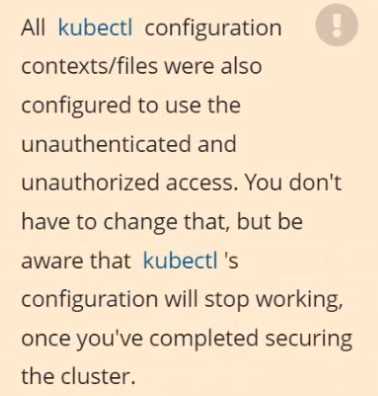

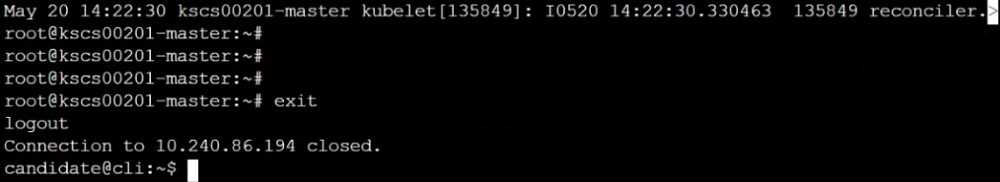

The kubeadm-created cluster's Kubernetes API server was, for testing purposes, temporarily

configured to allow unauthenticated and unauthorized access granting the anonymous user duster-

admin access.

Task

Reconfigure the cluster's Kubernetes API server to ensure that only authenticated and authorized

REST requests are allowed.

Use authorization mode Node,RBAC and admission controller NodeRestriction.

Cleaning up, remove the ClusterRoleBinding for user system:anonymous.

Answer:

See

explanation below.

Explanation:

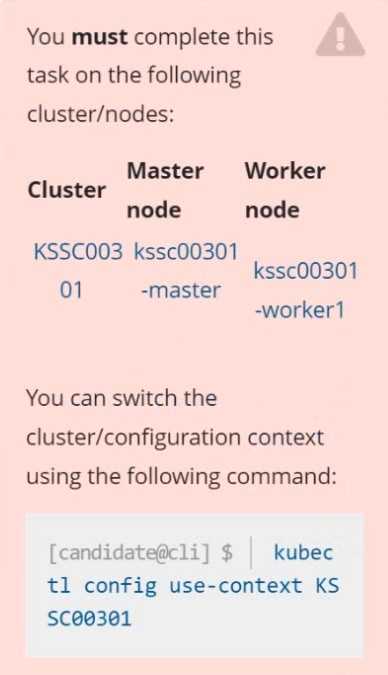

Question 2

Task

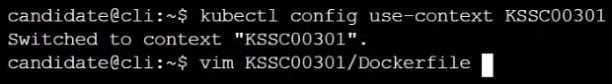

Analyze and edit the given Dockerfile /home/candidate/KSSC00301/Docker file (based on the

ubuntu:16.04 image), fixing two instructions present in the file that are prominent security/best-

practice issues.

Analyze and edit the given manifest file /home/candidate/KSSC00301/deployment.yaml, fixing two

fields present in the file that are prominent security/best-practice issues.

Answer:

See

explanation below.

Explanation:

Question 3

Context

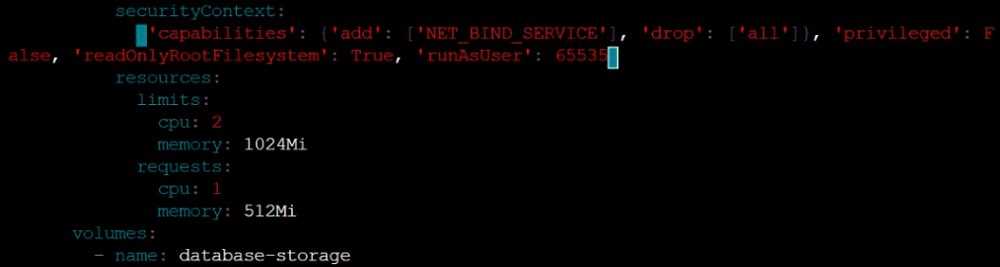

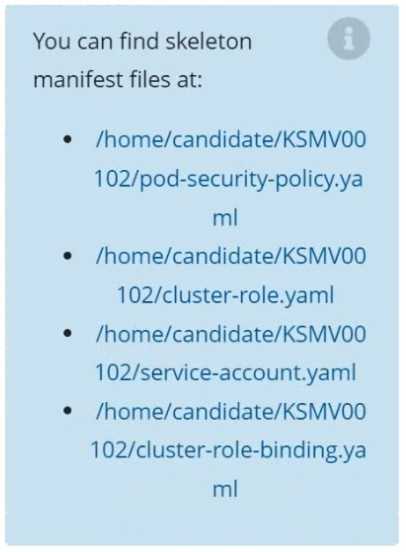

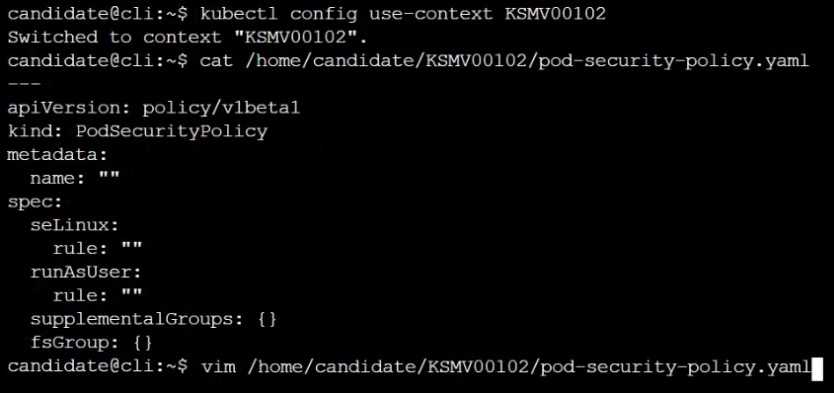

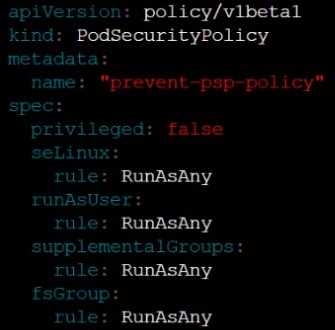

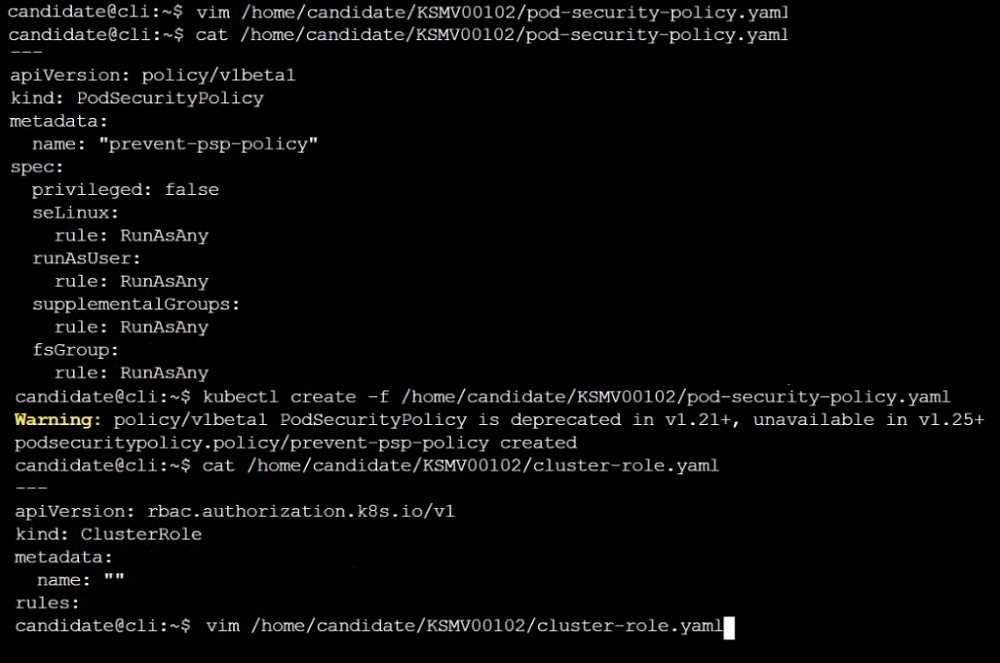

A PodSecurityPolicy shall prevent the creation of privileged Pods in a specific namespace.

Task

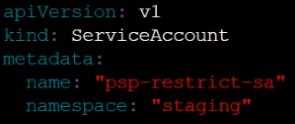

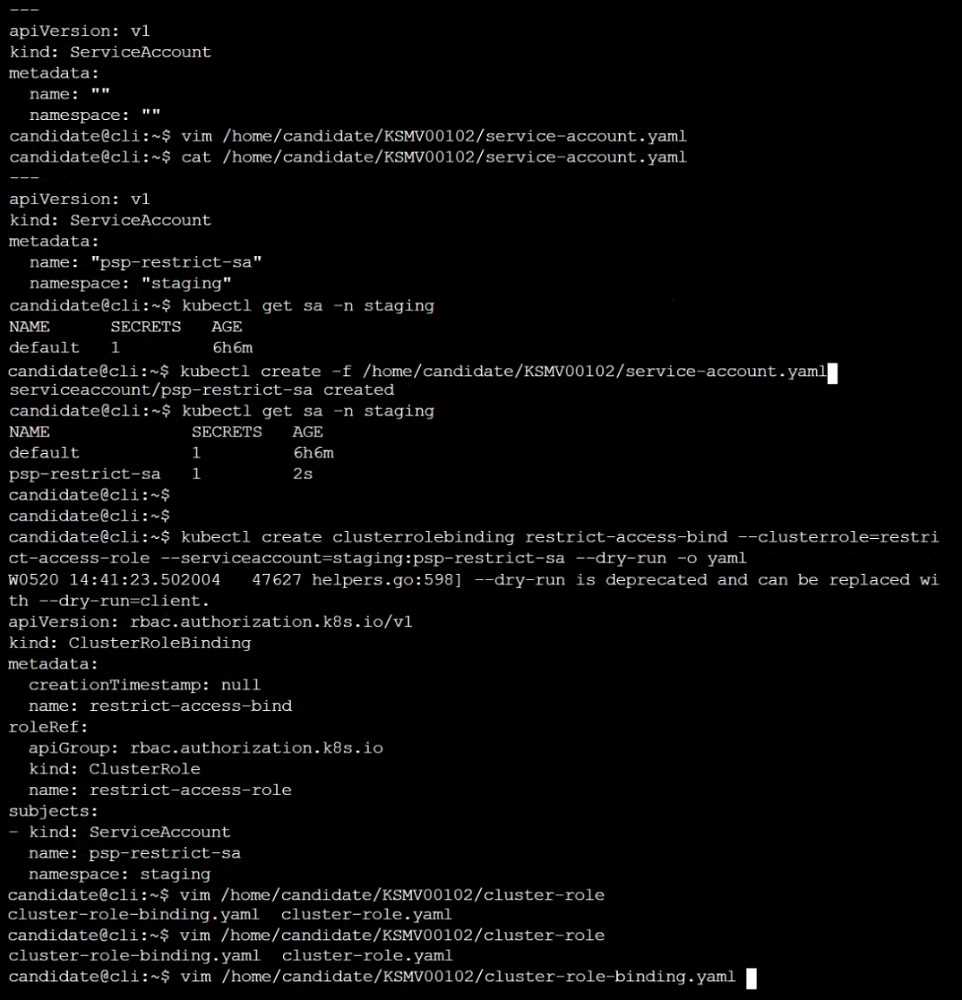

Create a new PodSecurityPolicy named prevent-psp-policy,which prevents the creation of privileged

Pods.

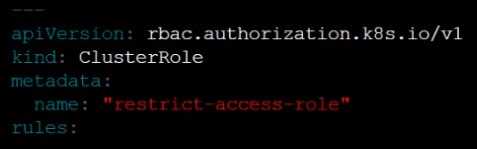

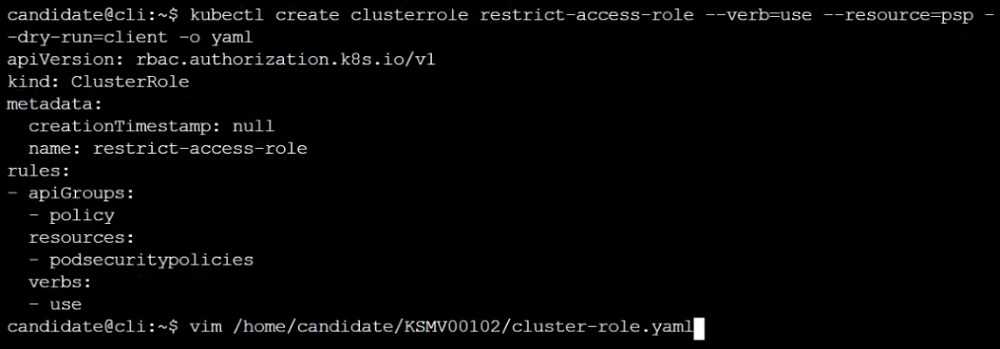

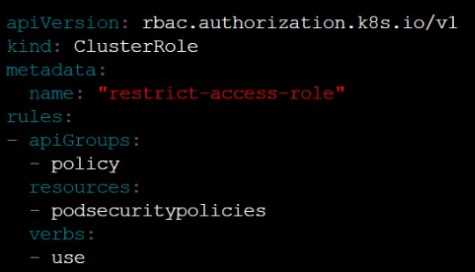

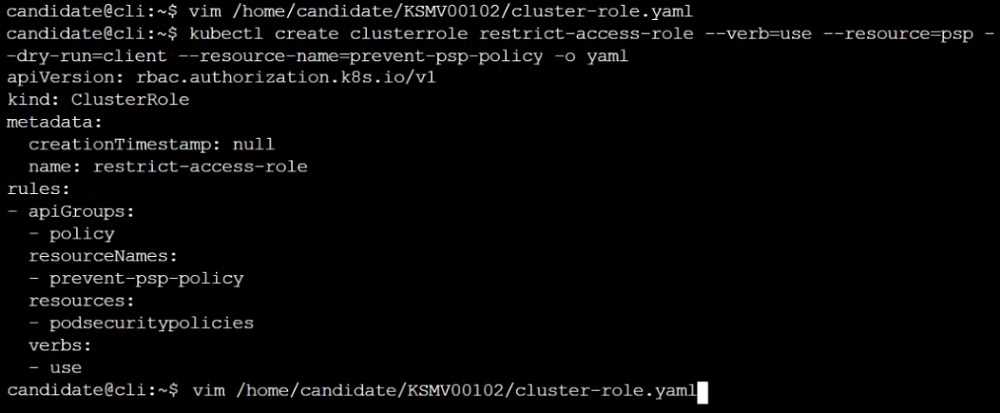

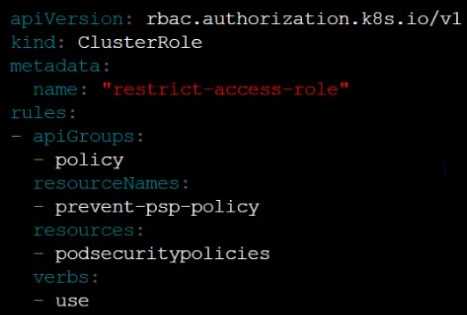

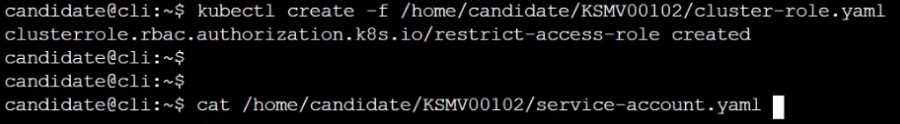

Create a new ClusterRole named restrict-access-role, which uses the newly created PodSecurityPolicy

prevent-psp-policy.

Create a new ServiceAccount named psp-restrict-sa in the existing namespace staging.

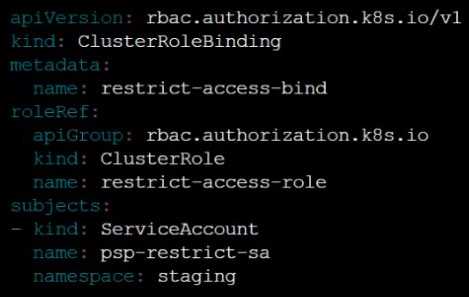

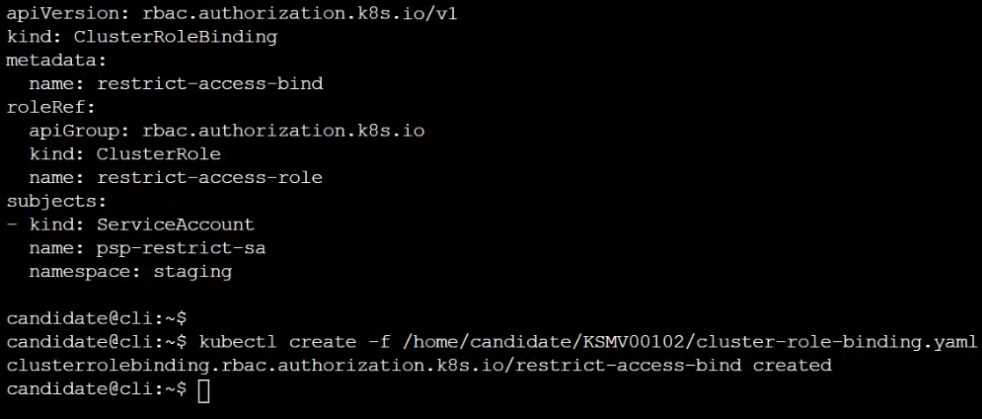

Finally, create a new ClusterRoleBinding named restrict-access-bind, which binds the newly created

ClusterRole restrict-access-role to the newly created ServiceAccount psp-restrict-sa.

Answer:

See

explanation below.

Explanation:

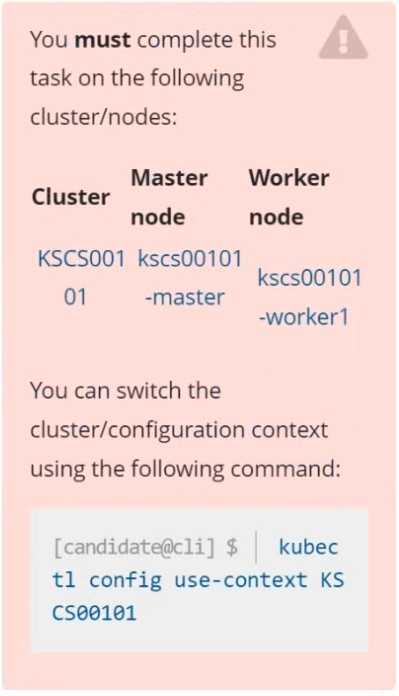

Question 4

Context

A default-deny NetworkPolicy avoids to accidentally expose a Pod in a namespace that doesn't have

any other NetworkPolicy defined.

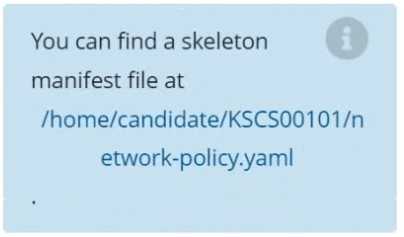

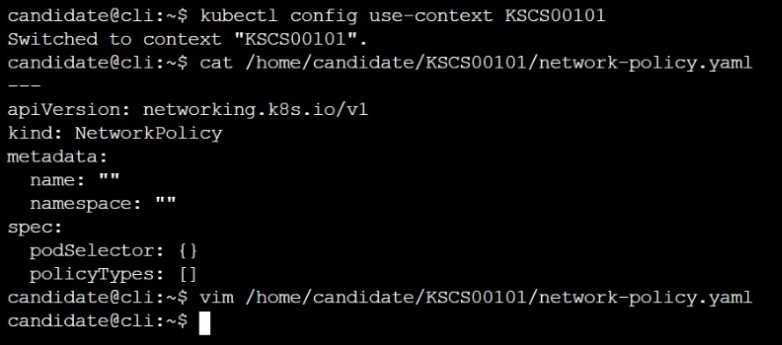

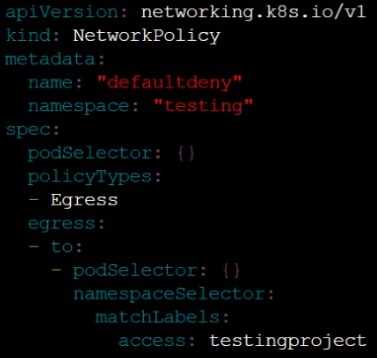

Task

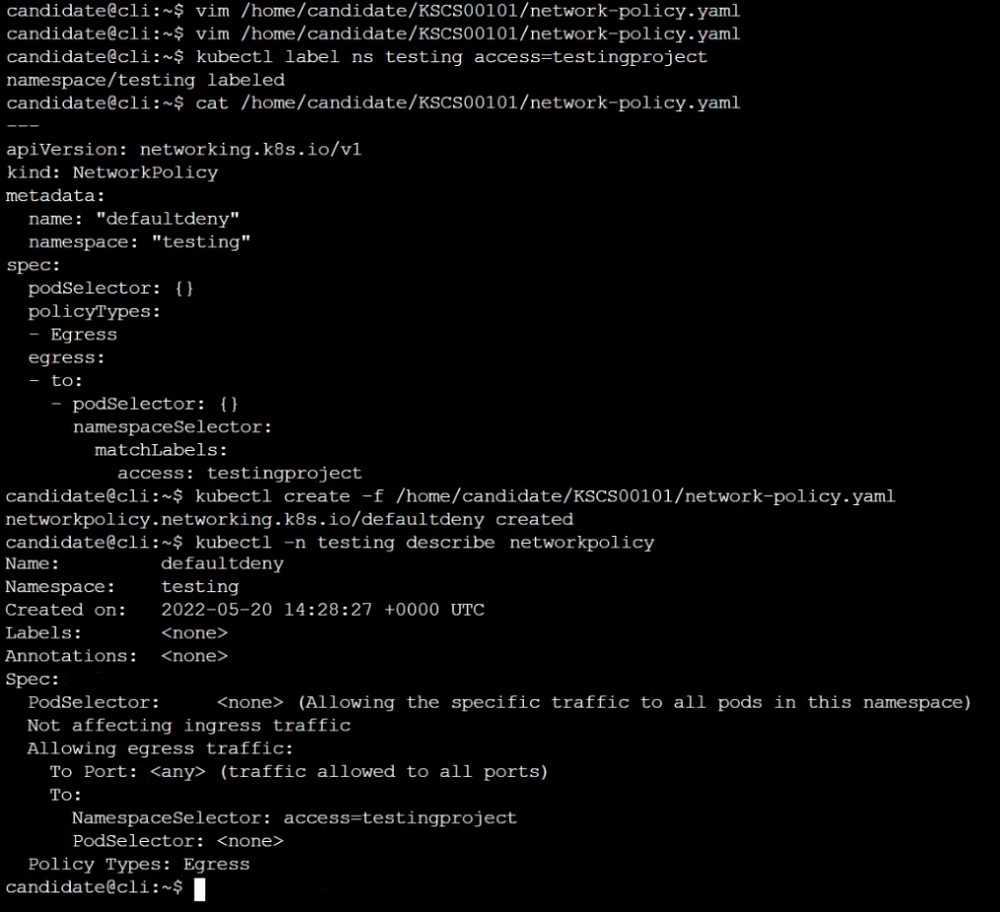

Create a new default-deny NetworkPolicy named defaultdeny in the namespace testing for all traffic

of type Egress.

The new NetworkPolicy must deny all Egress traffic in the namespace testing.

Apply the newly created default-deny NetworkPolicy to all Pods running in namespace testing.

Answer:

See

explanation below.

Explanation:

Question 5

Context

A CIS Benchmark tool was run against the kubeadm-created cluster and found multiple issues that

must be addressed immediately.

Task

Fix all issues via configuration and restart the affected components to ensure the new settings take

effect.

Fix all of the following violations that were found against the API server:

Fix all of the following violations that were found against the Kubelet:

Fix all of the following violations that were found against etcd:

Answer:

See

explanation below.

Explanation:

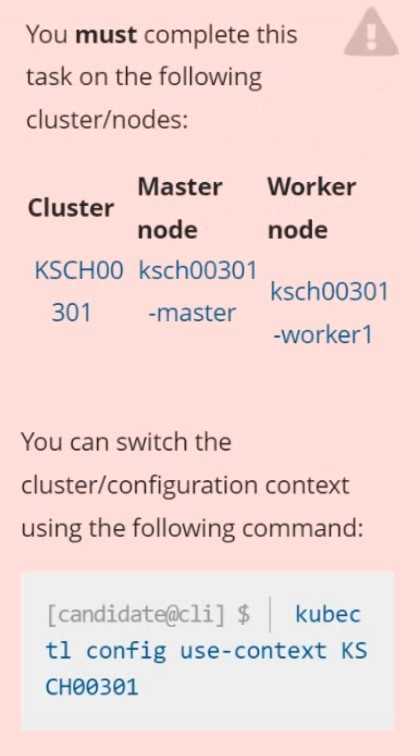

Question 6

Context

Your organization’s security policy includes:

ServiceAccounts must not automount API credentials

ServiceAccount names must end in "-sa"

The Pod specified in the manifest file /home/candidate/KSCH00301 /pod-m

nifest.yaml fails to schedule because of an incorrectly specified ServiceAccount.

Complete the following tasks:

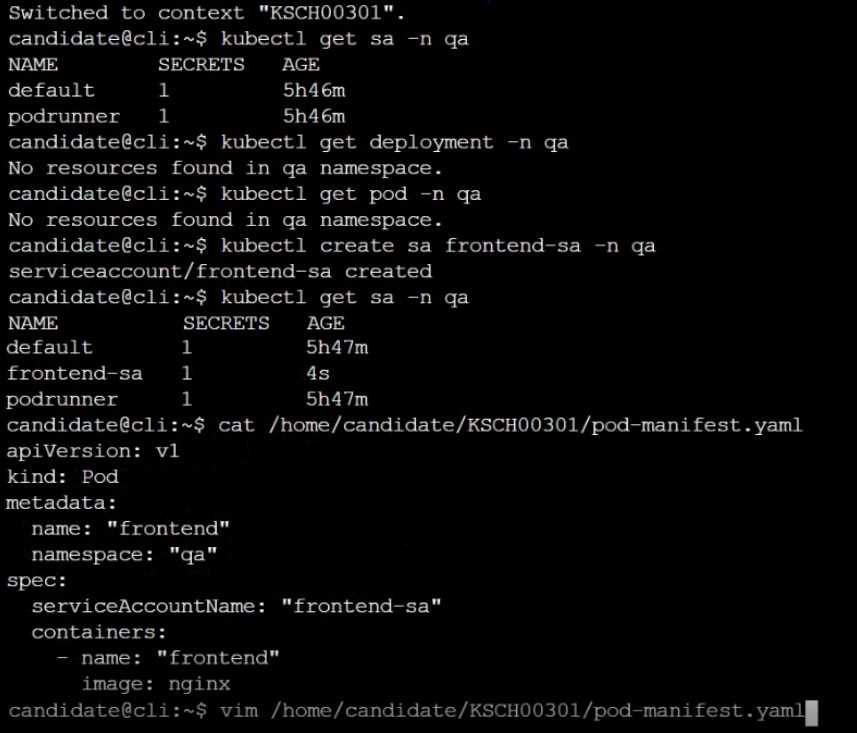

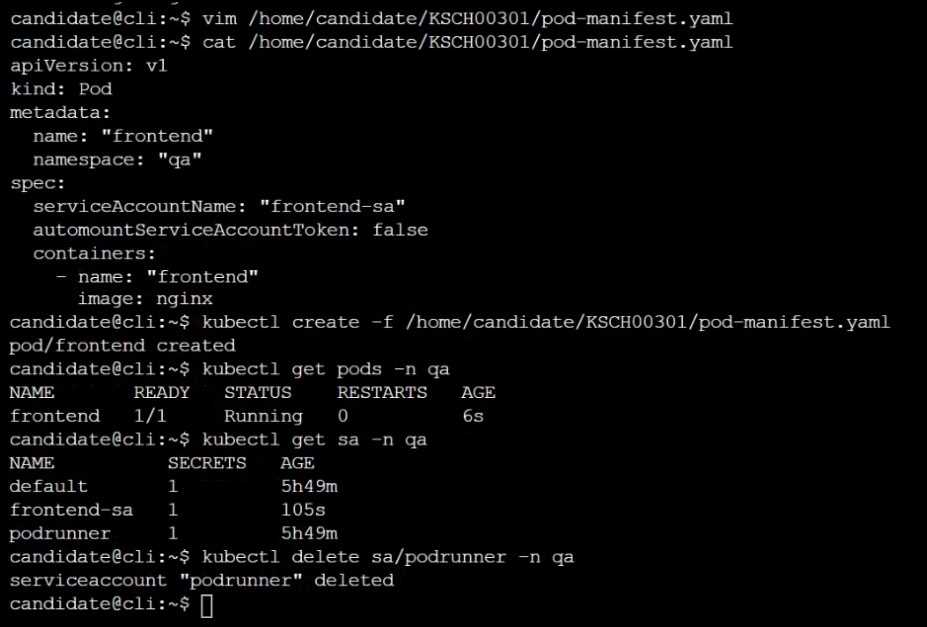

Task

1. Create a new ServiceAccount named frontend-sa in the existing namespace q

a. Ensure the ServiceAccount does not automount API credentials.

2. Using the manifest file at /home/candidate/KSCH00301 /pod-manifest.yaml, create the Pod.

3. Finally, clean up any unused ServiceAccounts in namespace qa.

Answer:

See the

explanation below

Explanation:

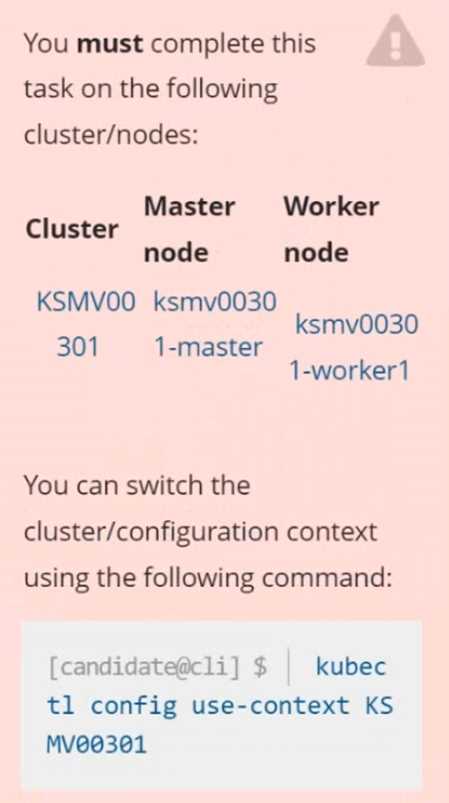

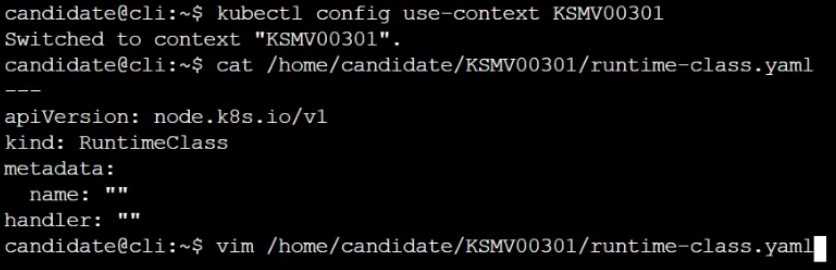

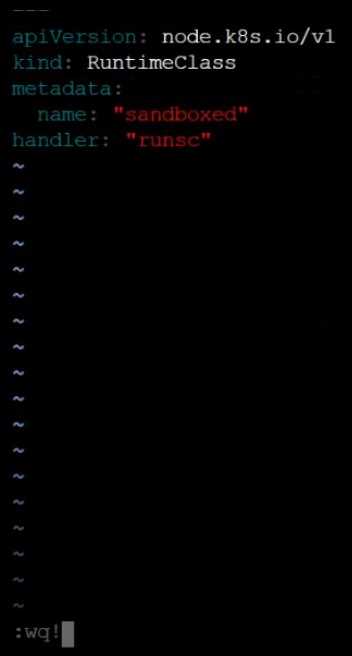

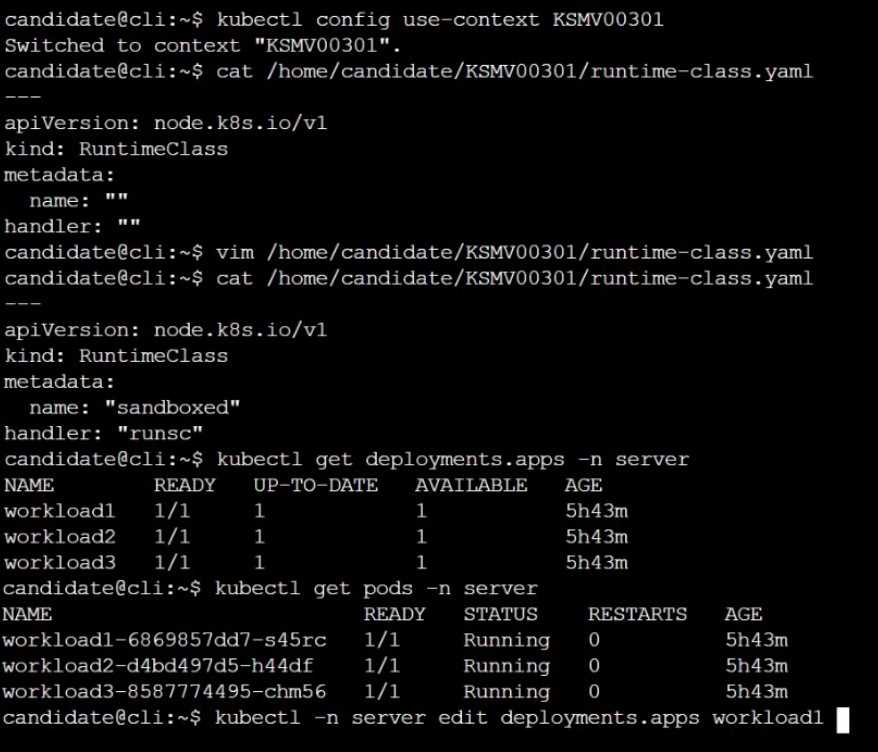

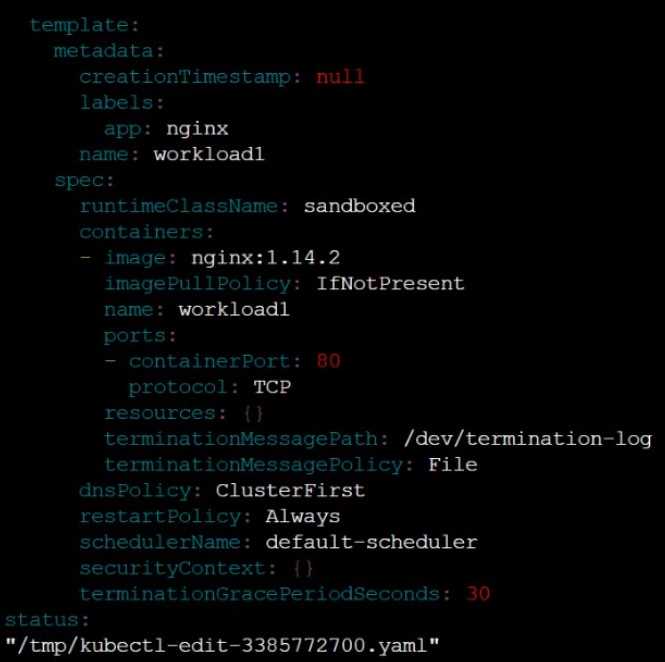

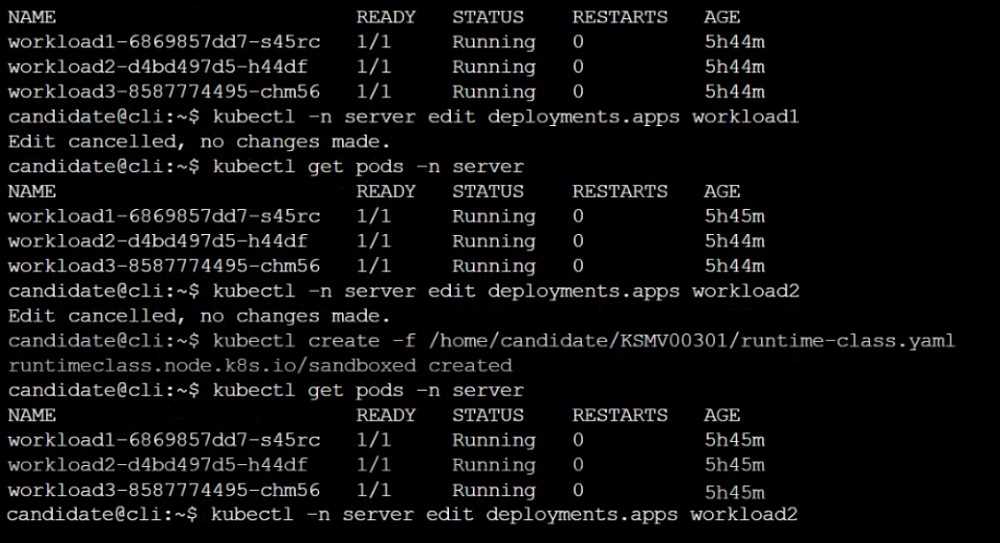

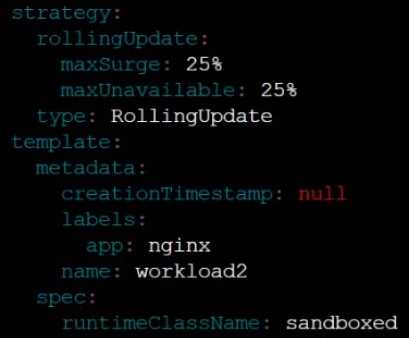

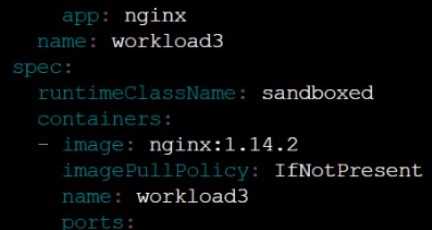

Question 7

Context

This cluster uses containerd as CRI runtime.

Containerd's default runtime handler is runc. Containerd has been prepared to support an additional

runtime handler, runsc (gVisor).

Task

Create a RuntimeClass named sandboxed using the prepared runtime handler named runsc.

Update all Pods in the namespace server to run on gVisor.

Answer:

See the

explanation below

Explanation:

Explanation:

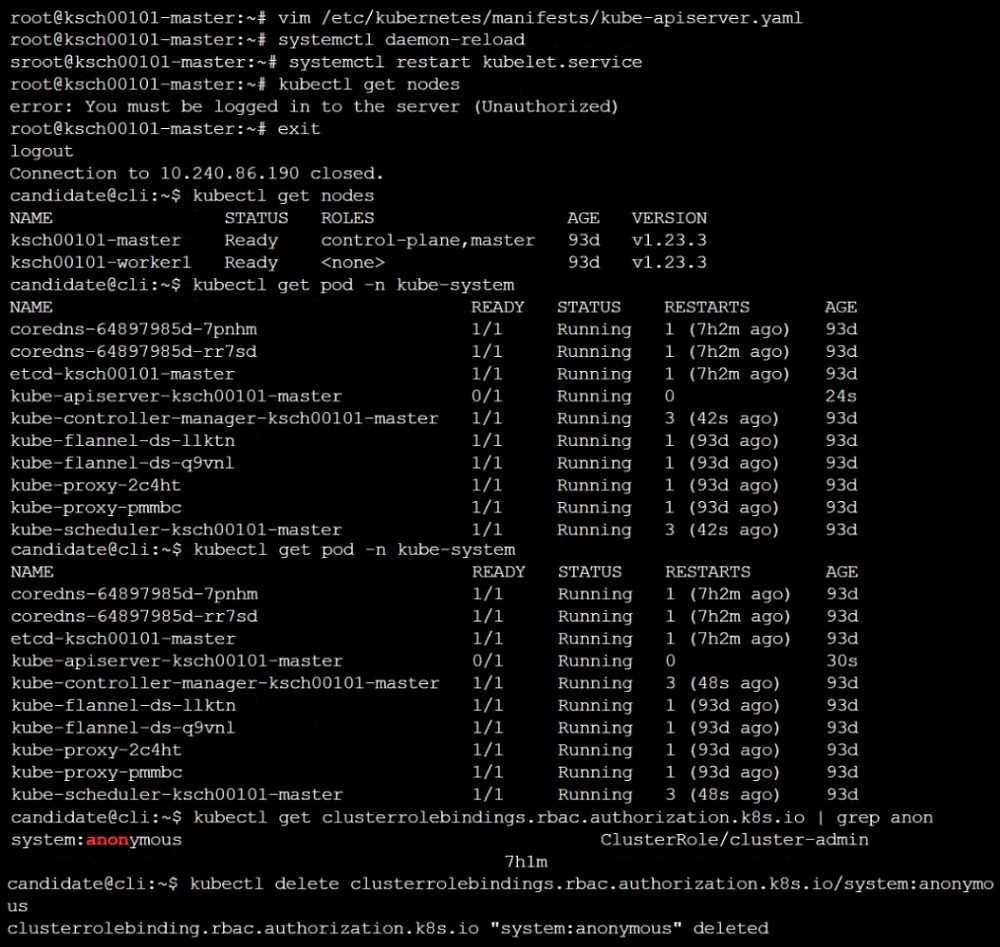

Question 8

You can switch the cluster/configuration context using the following command:

[desk@cli] $ kubectl config use-context dev

Context:

A CIS Benchmark tool was run against the kubeadm created cluster and found multiple issues that

must be addressed.

Task:

Fix all issues via configuration and restart the affected components to ensure the new settings take

effect.

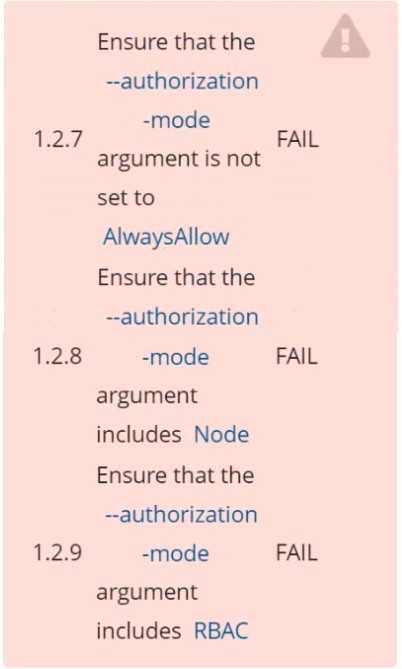

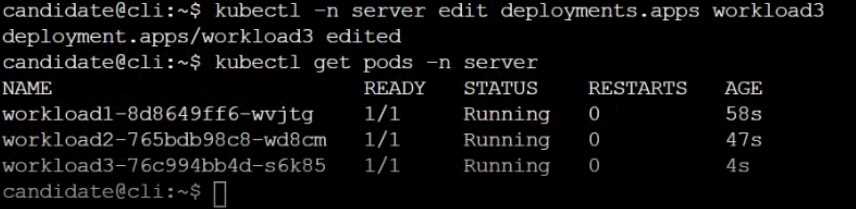

Fix all of the following violations that were found against the API server:

1.2.7 authorization-mode argument is not set to AlwaysAllow FAIL

1.2.8 authorization-mode argument includes Node FAIL

1.2.7 authorization-mode argument includes RBAC FAIL

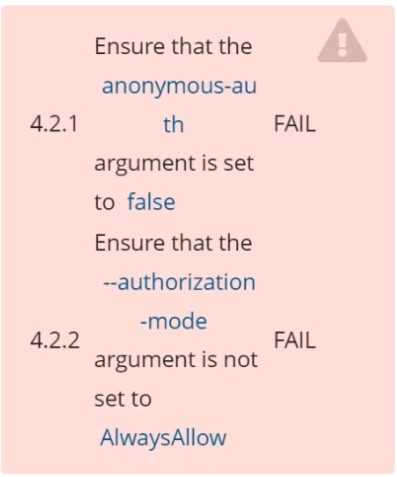

Fix all of the following violations that were found against the Kubelet:

4.2.1 Ensure that the anonymous-auth argument is set to false FAIL

4.2.2 authorization-mode argument is not set to AlwaysAllow FAIL (Use Webhook autumn/authz

where possible)

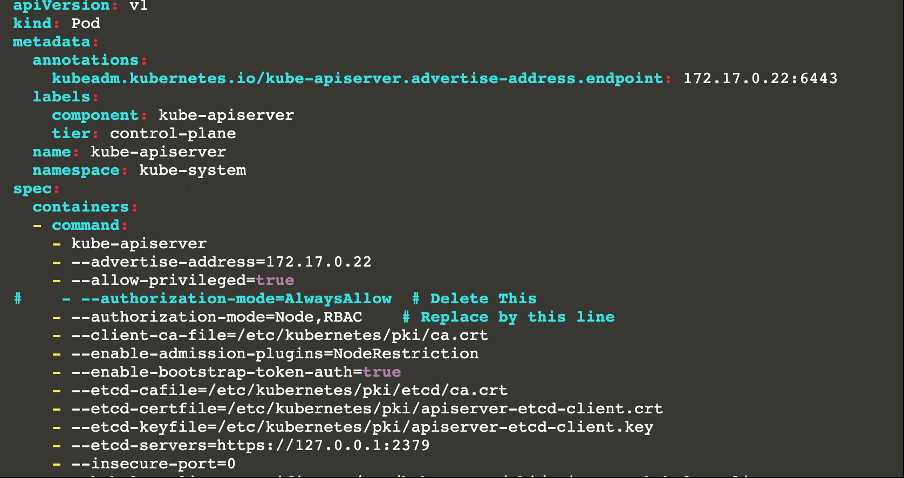

Fix all of the following violations that were found against etcd:

2.2 Ensure that the client-cert-auth argument is set to true

Answer:

See the

explanation below

Explanation:

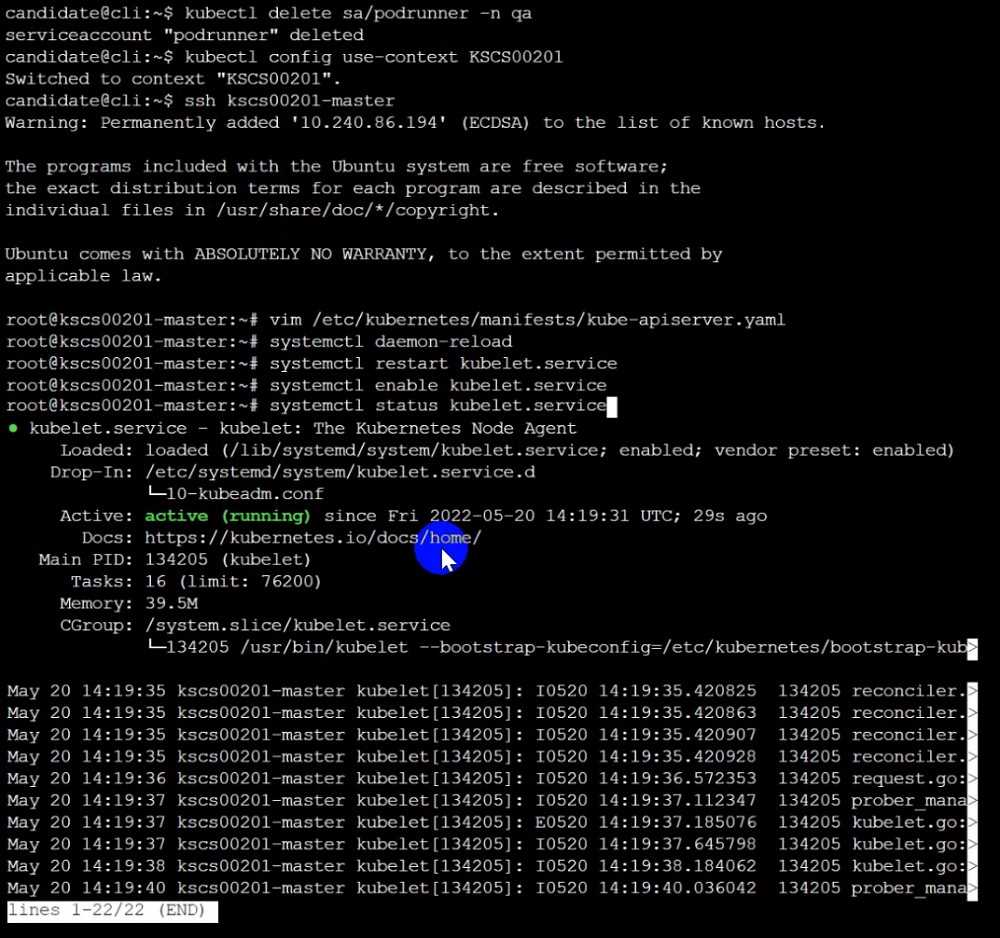

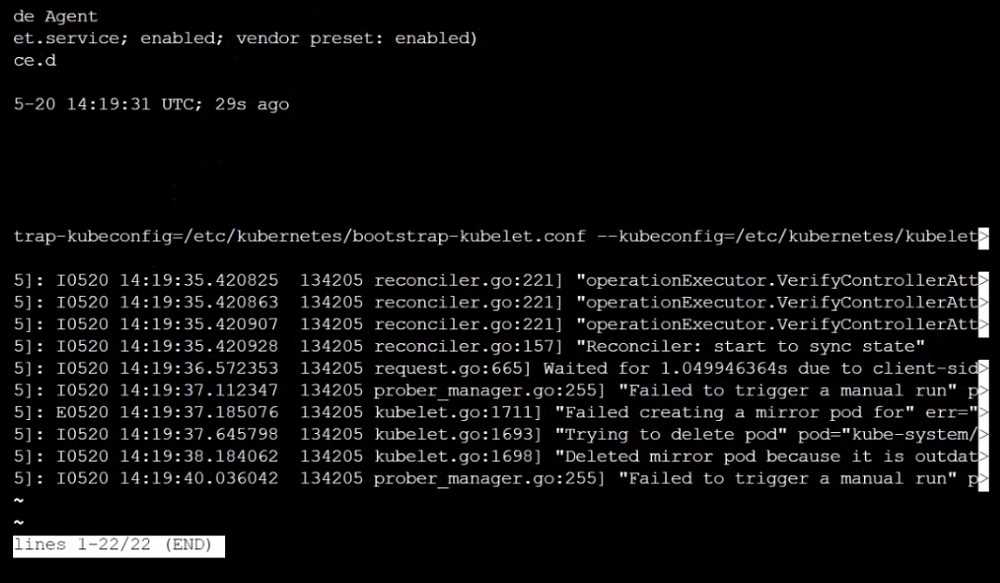

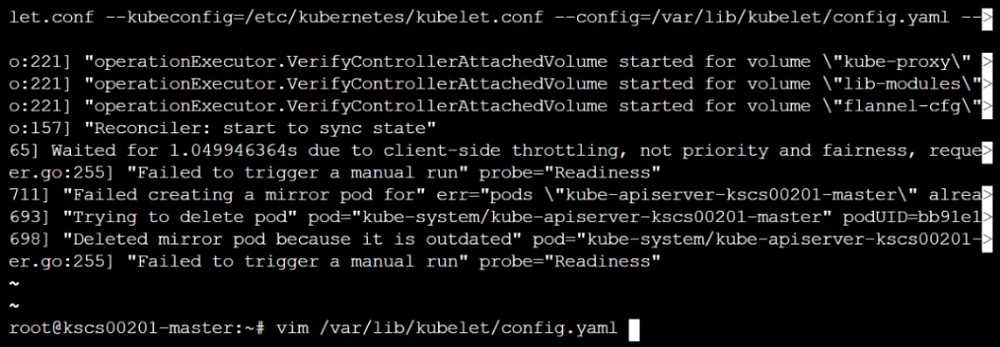

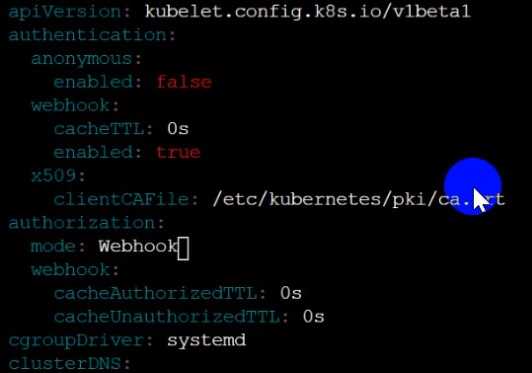

worker1 $ vim /var/lib/kubelet/config.yaml

anonymous:

enabled: true

#Delete this

enabled: false

#Replace by this

authorization:

mode: AlwaysAllow

#Delete this

mode: Webhook

#Replace by this

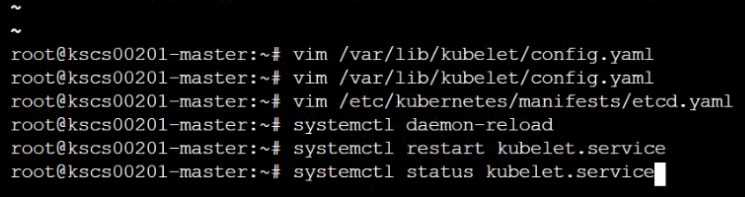

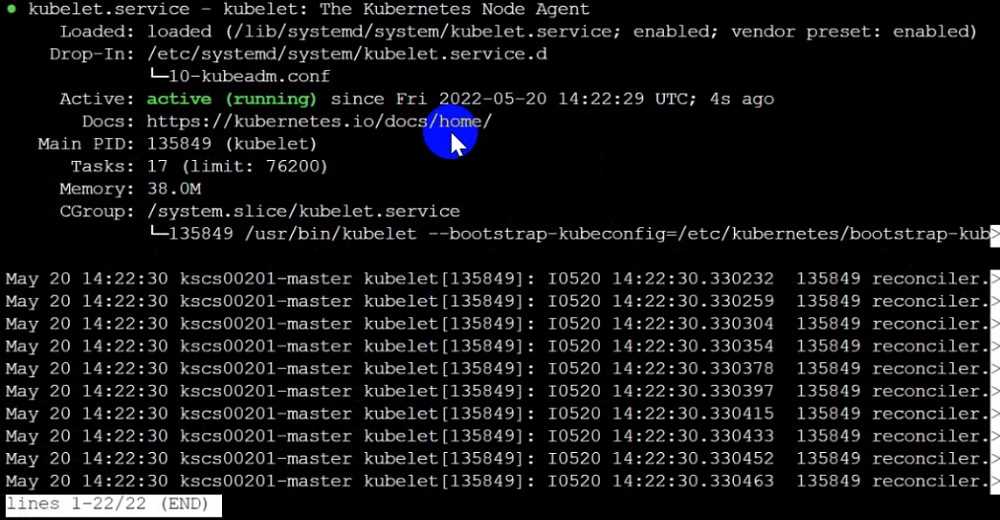

worker1 $ systemctl restart kubelet. # To reload kubelet config

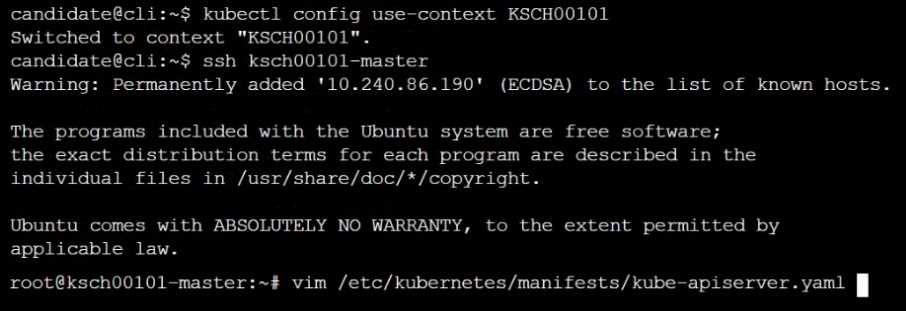

ssh to master1

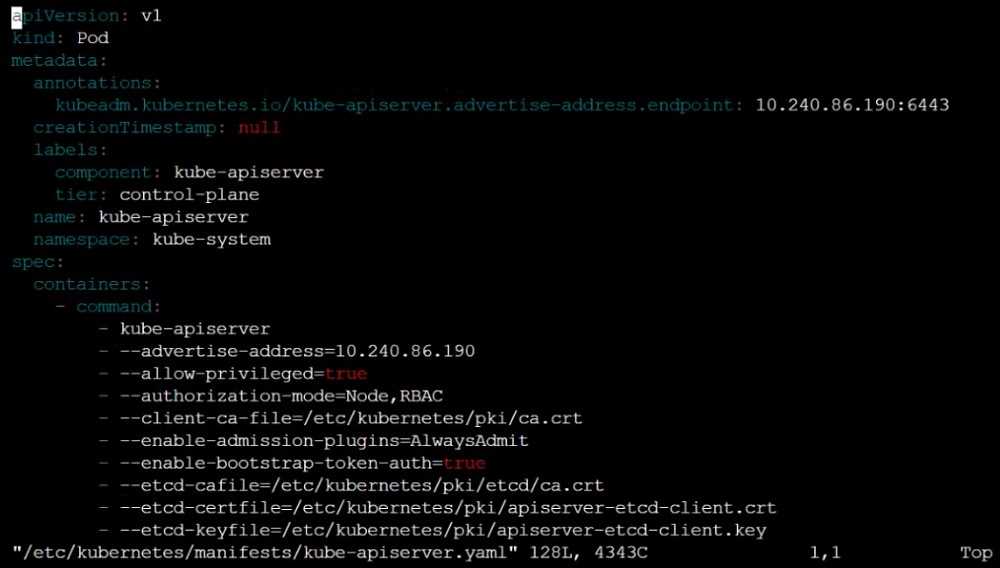

master1 $ vim /etc/kubernetes/manifests/kube-apiserver.yaml

- -- authorization-mode=Node,RBAC

master1 $ vim /etc/kubernetes/manifests/etcd.yaml

- --client-cert-auth=true

Explanation

ssh to worker1

worker1 $ vim /var/lib/kubelet/config.yaml

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

anonymous:

enabled: true

#Delete this

enabled: false

#Replace by this

webhook:

cacheTTL: 0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.crt

authorization:

mode: AlwaysAllow

#Delete this

mode: Webhook

#Replace by this

webhook:

cacheAuthorizedTTL: 0s

cacheUnauthorizedTTL: 0s

cgroupDriver: systemd

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

cpuManagerReconcilePeriod: 0s

evictionPressureTransitionPeriod: 0s

fileCheckFrequency: 0s

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 0s

imageMinimumGCAge: 0s

kind: KubeletConfiguration

logging: {}

nodeStatusReportFrequency: 0s

nodeStatusUpdateFrequency: 0s

resolvConf: /run/systemd/resolve/resolv.conf

rotateCertificates: true

runtimeRequestTimeout: 0s

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 0s

syncFrequency: 0s

volumeStatsAggPeriod: 0s

worker1 $ systemctl restart kubelet. # To reload kubelet config

ssh to master1

master1 $ vim /etc/kubernetes/manifests/kube-apiserver.yaml

master1 $ vim /etc/kubernetes/manifests/etcd.yaml

Reference:

kubelet parameters:

https://kubernetes.io/docs/reference/command-line-tools-reference/kubelet/

kubeapi parameters:

https://kubernetes.io/docs/reference/command-line-tools-reference/kube-

apiserver/

etcd parameters:

https://kubernetes.io/docs/tasks/administer-cluster/configure-upgrade-etcd/

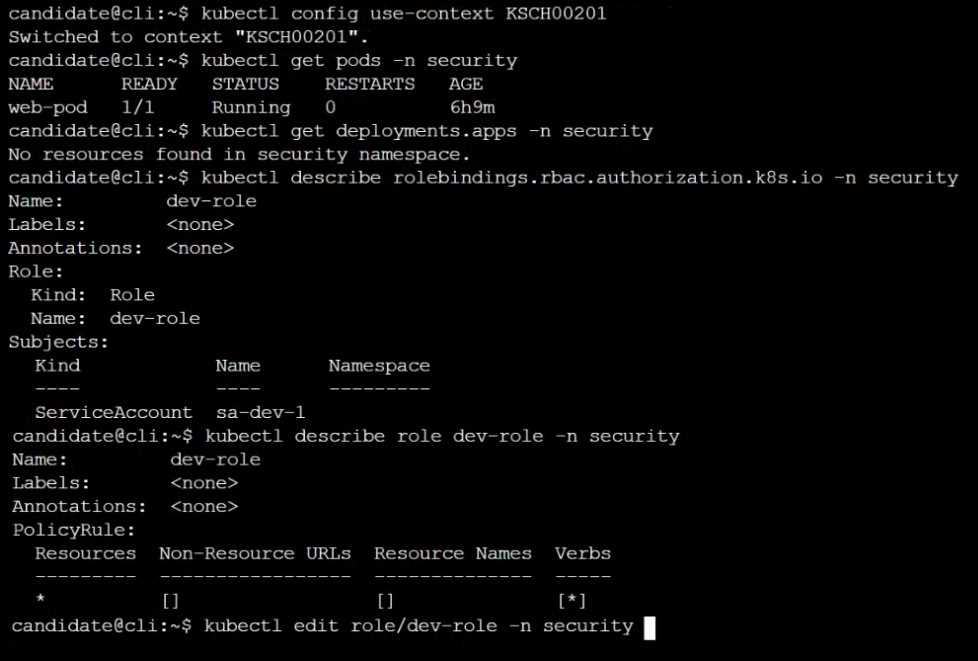

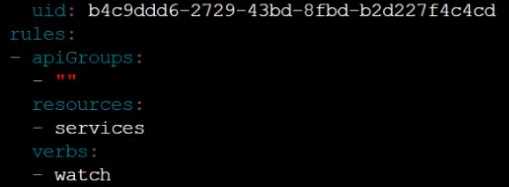

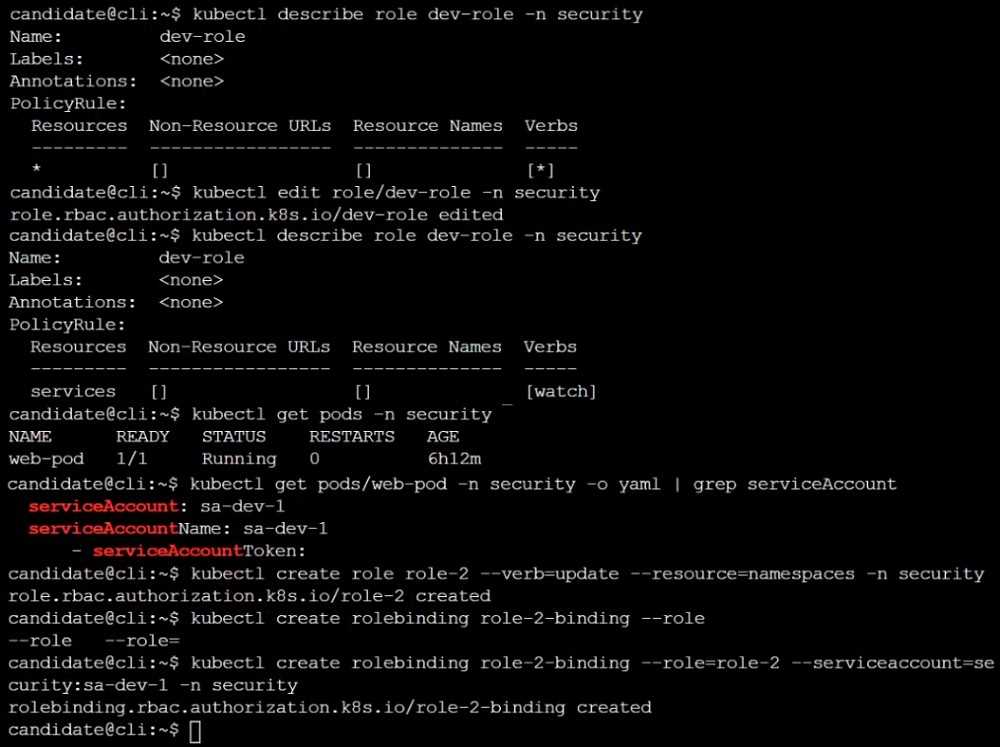

Question 9

You can switch the cluster/configuration context using the following command:

[desk@cli] $ kubectl config use-context prod-account

Context:

A Role bound to a Pod's ServiceAccount grants overly permissive permissions. Complete the

following tasks to reduce the set of permissions.

Task:

Given an existing Pod named web-pod running in the namespace database.

1. Edit the existing Role bound to the Pod's ServiceAccount test-sa to only allow performing get

operations, only on resources of type Pods.

2. Create a new Role named test-role-2 in the namespace database, which only allows

performing update operations, only on resources of type statuefulsets.

3. Create a new RoleBinding named test-role-2-bind binding the newly created Role to the Pod's

ServiceAccount.

Note: Don't delete the existing RoleBinding.

Answer:

See the

explanation below

Explanation:

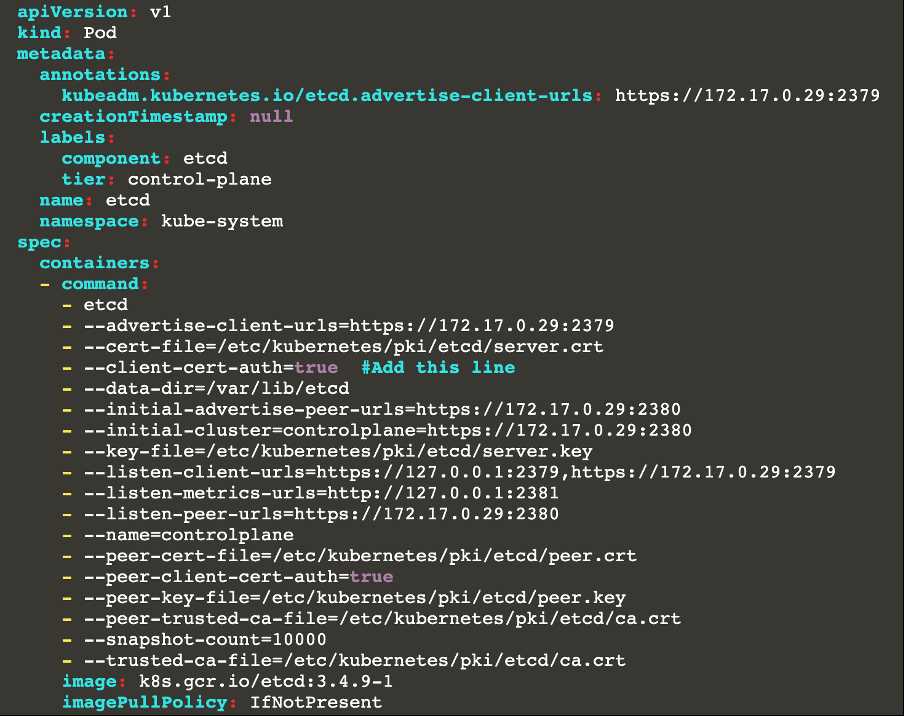

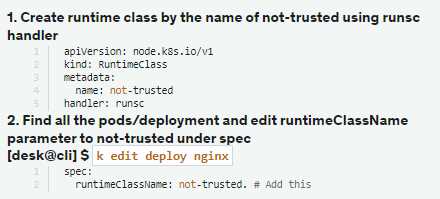

Question 10

Context:

Cluster: gvisor

Master node: master1

Worker node: worker1

You can switch the cluster/configuration context using the following command:

[desk@cli] $ kubectl config use-context gvisor

Context: This cluster has been prepared to support runtime handler, runsc as well as traditional one.

Task:

Create a RuntimeClass named not-trusted using the prepared runtime handler names runsc.

Update all Pods in the namespace server to run on newruntime.

Answer:

See the

explanation below

Explanation:

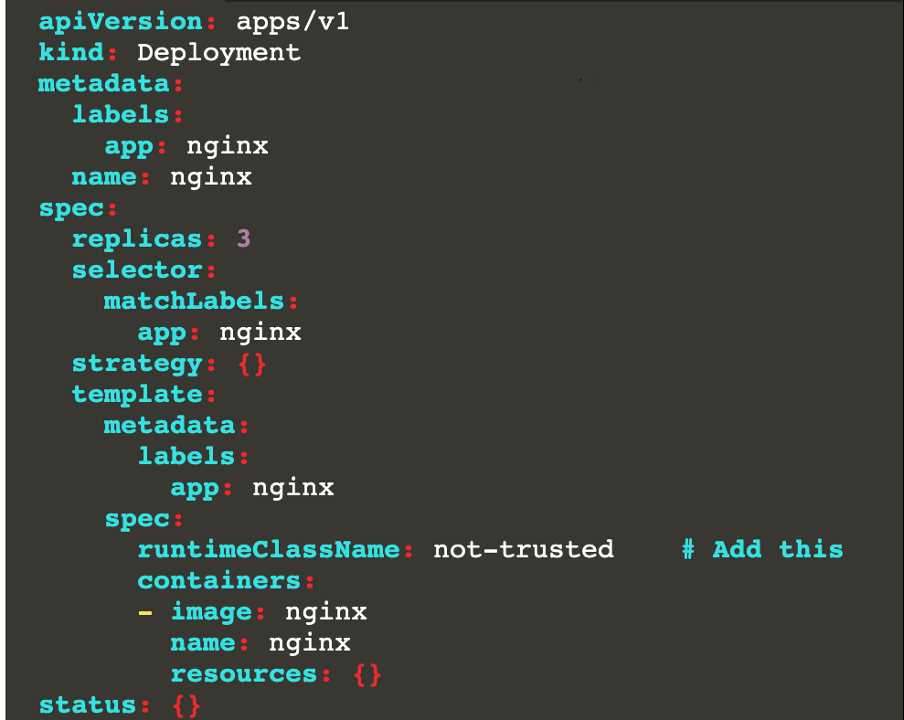

Explanation

[desk@cli] $vim runtime.yaml

apiVersion: node.k8s.io/v1

kind: RuntimeClass

metadata:

name: not-trusted

handler: runsc

[desk@cli] $ k apply -f runtime.yaml

[desk@cli] $ k get pods

NAME

READY STATUS RESTARTS AGE

nginx-6798fc88e8-chp6r

1/1 Running 0

11m

nginx-6798fc88e8-fs53n

1/1 Running 0

11m

nginx-6798fc88e8-ndved

1/1 Running 0

11m

[desk@cli] $ k get deploy

NAME READY UP-TO-DATE

AVAILABLE

AGE

nginx 3/3

5m

[desk@cli] $ k edit deploy nginx

Reference:

https://kubernetes.io/docs/concepts/containers/runtime-class/

Question 11

You can switch the cluster/configuration context using the following command:

[desk@cli] $ kubectl config use-context dev

A default-deny NetworkPolicy avoid to accidentally expose a Pod in a namespace that doesn't have

any other NetworkPolicy defined.

Task: Create a new default-deny NetworkPolicy named deny-network in the namespace test for all

traffic of type Ingress + Egress

The new NetworkPolicy must deny all Ingress + Egress traffic in the namespace test.

Apply the newly created default-deny NetworkPolicy to all Pods running in namespace test.

You can find a skeleton manifests file at /home/cert_masters/network-policy.yaml

Answer:

See the

explanation below

Explanation:

master1 $ k get pods -n test --show-labels

NAME

READY STATUS RESTARTS AGE

LABELS

test-pod 1/1 Running

34s role=test,run=test-pod

testing 1/1 Running

17d run=testing

$ vim netpol.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: deny-network

namespace: test

spec:

podSelector: {}

policyTypes:

- Ingress

- Egress

master1 $ k apply -f netpol.yaml

Explanation

controlplane $ k get pods -n test --show-labels

NAME

READY STATUS RESTARTS AGE

LABELS

test-pod 1/1 Running

34s role=test,run=test-pod

testing 1/1 Running

17d run=testing

master1 $ vim netpol1.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: deny-network

namespace: test

spec:

podSelector: {}

policyTypes:

- Ingress

- Egress

master1 $ k apply -f netpol1.yaml

Reference:

https://kubernetes.io/docs/concepts/services-networking/network-policies/

Explanation

controlplane $ k get pods -n test --show-labels

NAME

READY STATUS RESTARTS AGE

LABELS

test-pod 1/1 Running

34s role=test,run=test-pod

testing 1/1 Running

17d run=testing

master1 $ vim netpol1.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: deny-network

namespace: test

spec:

podSelector: {}

policyTypes:

- Ingress

- Egress

master1 $ k apply -f netpol1.yaml

Reference:

https://kubernetes.io/docs/concepts/services-networking/network-policies/

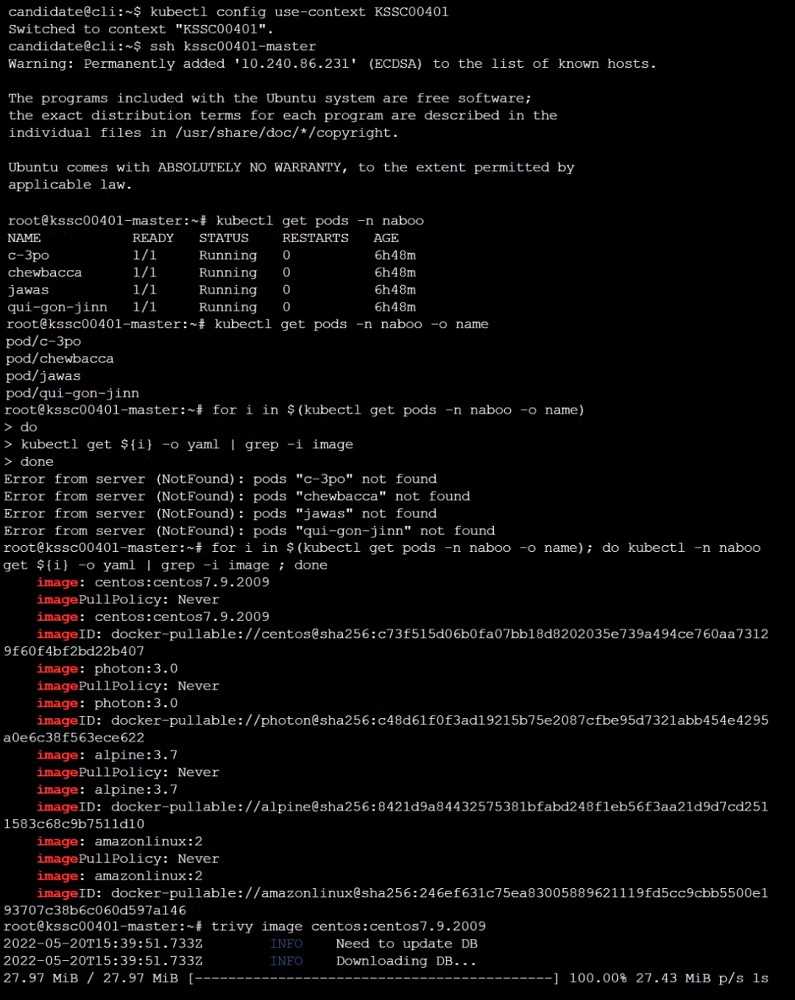

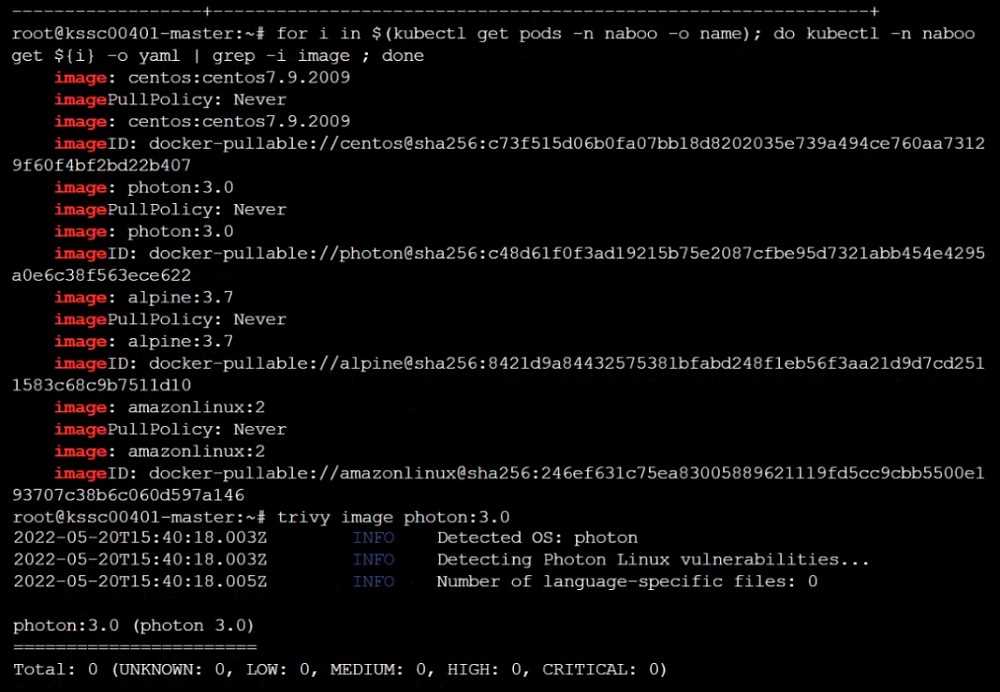

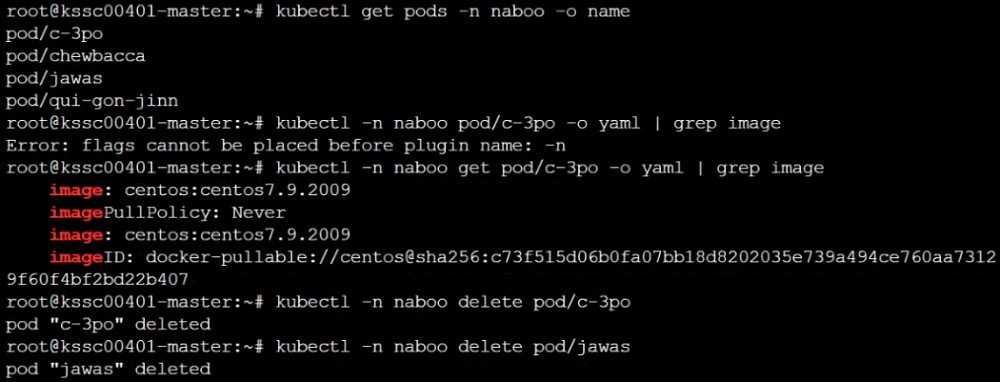

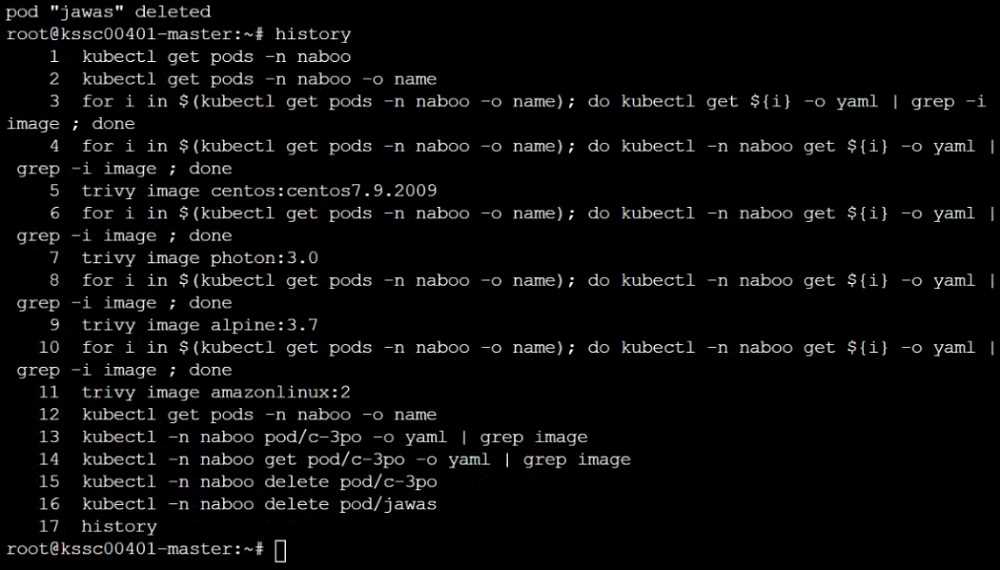

Question 12

Cluster: scanner

Master node: controlplane

Worker node: worker1

You can switch the cluster/configuration context using the following command:

[desk@cli] $ kubectl config use-context scanner

Given:

You may use Trivy's documentation.

Task:

Use the Trivy open-source container scanner to detect images with severe vulnerabilities used by

Pods in the namespace nato.

Look for images with High or Critical severity vulnerabilities and delete the Pods that use those

images.

Trivy is pre-installed on the cluster's master node. Use cluster's master node to use Trivy.

Answer:

See the

explanation below

Explanation:

Reference:

https://github.com/aquasecurity/trivy

Question 13

Cluster: dev

Master node: master1

Worker node: worker1

You can switch the cluster/configuration context using the following command:

[desk@cli] $ kubectl config use-context dev

Task:

Retrieve the content of the existing secret named adam in the safe namespace.

Store the username field in a file names /home/cert-masters/username.txt, and the password field in

a file named /home/cert-masters/password.txt.

1. You must create both files; they don't exist yet.

2. Do not use/modify the created files in the following steps, create new temporary files if needed.

Create a new secret names newsecret in the safe namespace, with the following content:

Username: dbadmin

Password: moresecurepas

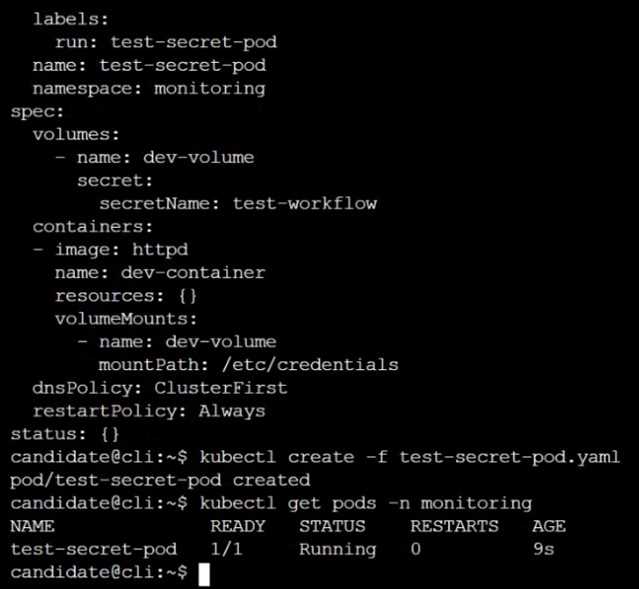

Finally, create a new Pod that has access to the secret newsecret via a volume:

Namespace:

safe

Pod name:

mysecret-pod

Container name:

db-container

Image:

redis

Volume name: secret-vol

Mount path:

/etc/mysecret

Answer:

See the

explanation below

Explanation:

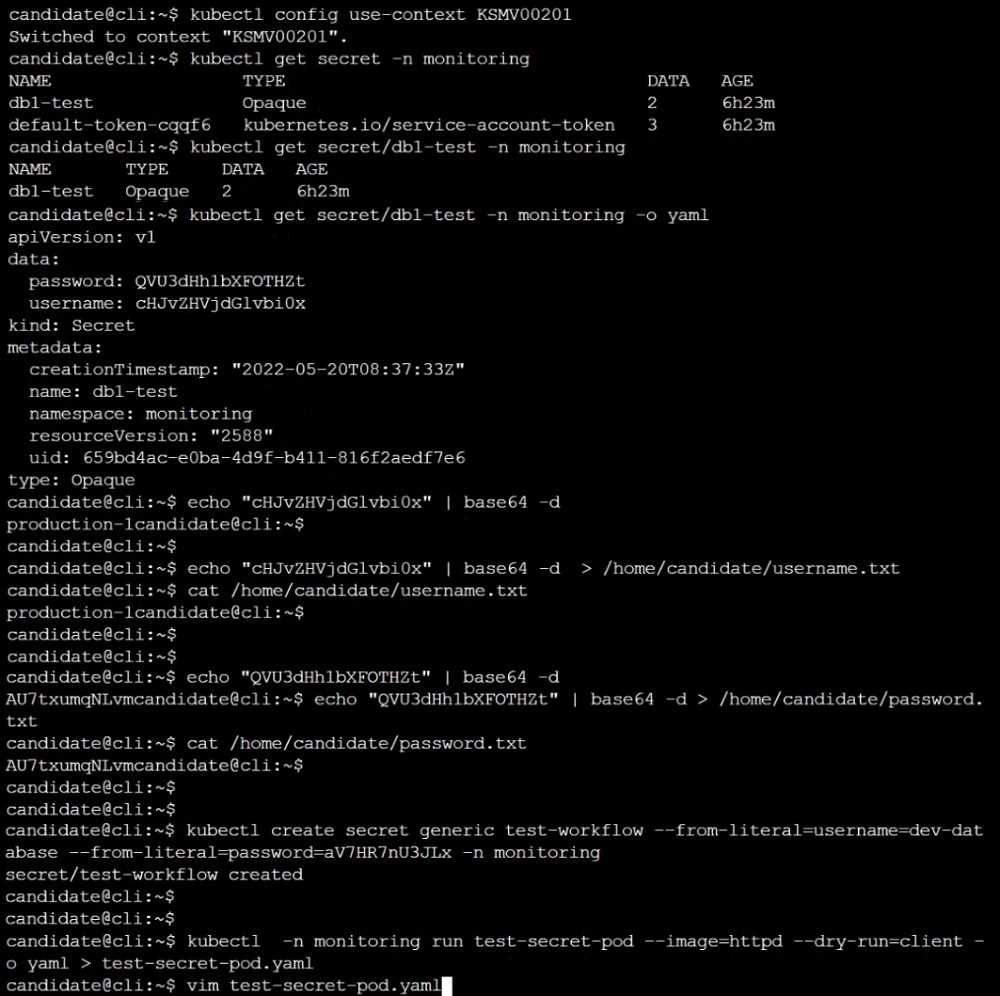

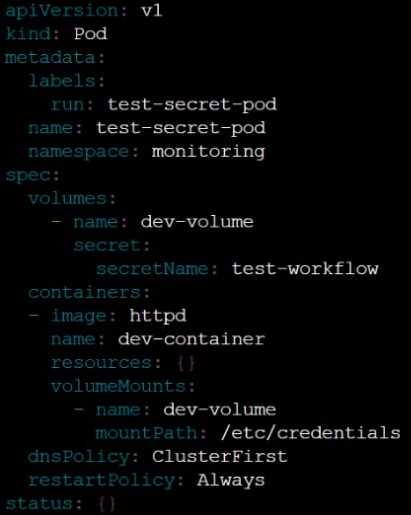

Question 14

You must complete this task on the following cluster/nodes:

Cluster: trace

Master node: master

Worker node: worker1

You can switch the cluster/configuration context using the following command:

[desk@cli] $ kubectl config use-context trace

Given: You may use Sysdig or Falco documentation.

Task:

Use detection tools to detect anomalies like processes spawning and executing something weird

frequently in the single container belonging to Pod tomcat.

Two tools are available to use:

1. falco

2. sysdig

Tools are pre-installed on the worker1 node only.

Analyse the container’s behaviour for at least 40 seconds, using filters that detect newly spawning

and executing processes.

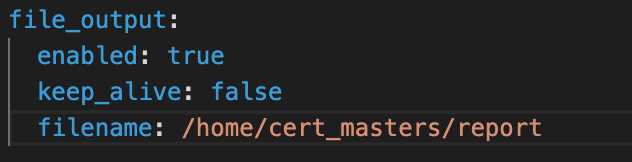

Store an incident file at /home/cert_masters/report, in the following format:

[timestamp],[uid],[processName]

Note: Make sure to store incident file on the cluster's worker node, don't move it to master node.

Answer:

See the

explanation below

Explanation:

$vim /etc/falco/falco_rules.local.yaml

- rule: Container Drift Detected (open+create)

desc: New executable created in a container due to open+create

condition: >

evt.type in (open,openat,creat) and

evt.is_open_exec=true and

container and

not runc_writing_exec_fifo and

not runc_writing_var_lib_docker and

not user_known_container_drift_activities and

evt.rawres>=0

output: >

%evt.time,%user.uid,%proc.name

# Add this/Refer falco documentation

priority: ERROR

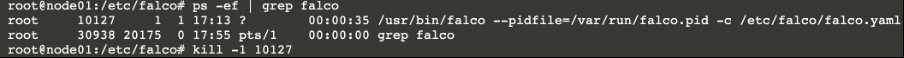

$kill -1 <PID of falco>

Explanation

[desk@cli] $ ssh node01

[node01@cli] $ vim /etc/falco/falco_rules.yaml

search for Container Drift Detected & paste in falco_rules.local.yaml

[node01@cli] $ vim /etc/falco/falco_rules.local.yaml

- rule: Container Drift Detected (open+create)

desc: New executable created in a container due to open+create

condition: >

evt.type in (open,openat,creat) and

evt.is_open_exec=true and

container and

not runc_writing_exec_fifo and

not runc_writing_var_lib_docker and

not user_known_container_drift_activities and

evt.rawres>=0

output: >

%evt.time,%user.uid,%proc.name

# Add this/Refer falco documentation

priority: ERROR

[node01@cli] $ vim /etc/falco/falco.yaml

send HUP signal to falco process to re-read the configuration

Reference:

https://falco.org/docs/alerts/

https://falco.org/docs/rules/supported-fields/

Question 15

You can switch the cluster/configuration context using the following command:

[desk@cli] $ kubectl config use-context qa

Context:

A pod fails to run because of an incorrectly specified ServiceAccount

Task:

Create a new service account named backend-qa in an existing namespace qa, which must not have

access to any secret.

Edit the frontend pod yaml to use backend-qa service account

Note: You can find the frontend pod yaml at /home/cert_masters/frontend-pod.yaml

Answer:

See the

explanation below

Explanation:

[desk@cli] $ k create sa backend-qa -n qa

sa/backend-qa created

[desk@cli] $ k get role,rolebinding -n qa

No resources found in qa namespace.

[desk@cli] $ k create role backend -n qa --resource pods,namespaces,configmaps --verb list

# No access to secret

[desk@cli] $ k create rolebinding backend -n qa --role backend --serviceaccount qa:backend-qa

[desk@cli] $ vim /home/cert_masters/frontend-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: frontend

spec:

serviceAccountName: backend-qa # Add this

image: nginx

name: frontend

[desk@cli] $ k apply -f /home/cert_masters/frontend-pod.yaml

pod created

[desk@cli] $ k create sa backend-qa -n qa

serviceaccount/backend-qa created

[desk@cli] $ k get role,rolebinding -n qa

No resources found in qa namespace.

[desk@cli] $ k create role backend -n qa --resource pods,namespaces,configmaps --verb list

role.rbac.authorization.k8s.io/backend created

[desk@cli] $ k create rolebinding backend -n qa --role backend --serviceaccount qa:backend-qa

rolebinding.rbac.authorization.k8s.io/backend created

[desk@cli] $ vim /home/cert_masters/frontend-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: frontend

spec:

serviceAccountName: backend-qa # Add this

image: nginx

name: frontend

[desk@cli] $ k apply -f /home/cert_masters/frontend-pod.yaml

pod/frontend created

https://kubernetes.io/docs/tasks/configure-pod-container/configure-service-account/