linux foundation CKA Exam Questions

Questions for the CKA were updated on : Feb 20 ,2026

Page 1 out of 6. Viewing questions 1-15 out of 83

Question 1

SIMULATION

Quick Reference

ConfigMaps,

Documentation Deployments,

Namespace

You must connect to the correct host . Failure to do so may result in a zero score.

[candidate@base] $ ssh cka000048b

Task

An NGINX Deployment named nginx-static is running in the nginx-static namespace. It is configured

using a ConfigMap named nginx-config .

First, update the nginx-config ConfigMap to also allow TLSv1.2. connections.

You may re-create, restart, or scale resources as necessary.

You can use the following command to test the changes:

[candidate@cka000048b] $ curl -- tls-max

1.2 https://web.k8s.local

Answer:

See the

solution below.

Explanation:

Task Summary

SSH into cka000048b

Update the nginx-config ConfigMap in the nginx-static namespace to allow TLSv1.2

Ensure the nginx-static Deployment picks up the new config

Verify the change using the provided curl command

Step-by-Step Instructions

Step 1: SSH into the correct host

ssh cka000048b

Step 2: Get the ConfigMap

kubectl get configmap nginx-config -n nginx-static -o yaml > nginx-config.yaml

Open the file for editing:

nano nginx-config.yaml

Look for the TLS configuration in the data field. You are likely to find something like:

ssl_protocols TLSv1.3;

Modify it to include TLSv1.2 as well:

ssl_protocols TLSv1.2 TLSv1.3;

Save and exit the file.

Now update the ConfigMap:

kubectl apply -f nginx-config.yaml

Step 3: Restart the NGINX pods to pick up the new ConfigMap

Pods will not reload a ConfigMap automatically unless it’s mounted in a way that supports dynamic

reload and the app is watching for it (NGINX typically doesn't by default).

The safest way is to restart the pods:

Option 1: Roll the deployment

kubectl rollout restart deployment nginx-static -n nginx-static

Option 2: Delete pods to force recreation

kubectl delete pod -n nginx-static -l app=nginx-static

Step 4: Verify using curl

Use the provided curl command to confirm that TLS 1.2 is accepted:

curl --tls-max 1.2 https://web.k8s.local

A successful response means the TLS configuration is correct.

Final Command Summary

ssh cka000048b

kubectl get configmap nginx-config -n nginx-static -o yaml > nginx-config.yaml

nano nginx-config.yaml # Modify to include "ssl_protocols TLSv1.2 TLSv1.3;"

kubectl apply -f nginx-config.yaml

kubectl rollout restart deployment nginx-static -n nginx-static

# or

kubectl delete pod -n nginx-static -l app=nginx-static

curl --tls-max 1.2 https://web.k8s.local

Question 2

SIMULATION

You must connect to the correct host.

Failure to do so may result in a zero score.

[candidate@base] $ ssh Cka000055

Task

Verify the cert-manager application which has been deployed to your cluster .

Using kubectl, create a list of all cert-manager Custom Resource Definitions (CRDs ) and save it

to ~/resources.yaml .

You must use kubectl 's default output format.

Do not set an output format.

Failure to do so will result in a reduced score.

Using kubectl, extract the documentation for the subject specification field of the Certificate Custom

Resource and save it to ~/subject.yaml.

Answer:

See the

solution below.

Explanation:

Task Summary

You need to:

SSH into the correct node: cka000055

Use kubectl to list all cert-manager CRDs, and save that list to ~/resources.yaml

Do not use any output format flags like -o yaml

Extract the documentation for the spec.subject field of the Certificate custom resource and save it to

~/subject.yaml

Step-by-Step Instructions

Step 1: SSH into the node

ssh cka000055

Step 2: List cert-manager CRDs and save to a file

First, identify all cert-manager CRDs:

kubectl get crds | grep cert-manager

Then extract them without specifying an output format:

kubectl get crds | grep cert-manager | awk '{print $1}' | xargs kubectl get crd > ~/resources.yaml

This saves the default kubectl get output to the required file without formatting flags.

Step 3: Get documentation for spec.subject in the Certificate CRD

Run the following command:

kubectl explain certificate.spec.subject > ~/subject.yaml

This extracts the field documentation and saves it to the specified file.

If you're not sure of the resource, verify it exists:

kubectl get crd certificates.cert-manager.io

Final Command Summary

ssh cka000055

kubectl get crds | grep cert-manager | awk '{print $1}' | xargs kubectl get crd > ~/resources.yaml

kubectl explain certificate.spec.subject > ~/subject.yaml

Question 3

SIMULATION

You must connect to the correct host.

Failure to do so may result in a zero score.

[candidate@base] $ ssh Cka000059

Context

A kubeadm provisioned cluster was migrated to a new machine. It needs configuration changes to

run successfully.

Task

Fix a single-node cluster that got broken during machine migration.

First, identify the broken cluster components and investigate what breaks them.

The decommissioned cluster used an external etcd server.

Next, fix the configuration of all broken cluster

Answer:

See the

solution below.

Explanation:

Task Summary

SSH into node: cka000059

Cluster was migrated to a new machine

It uses an external etcd server

Identify and fix misconfigured components

Bring the cluster back to a healthy state

Step-by-Step Solution

Step 1: SSH into the correct host

ssh cka000059

Step 2: Check the cluster status

Run:

kubectl get nodes

If it fails, the kubelet or kube-apiserver is likely broken.

Check kubelet status:

sudo systemctl status kubelet

Also, check pod statuses in the control plane:

sudo crictl ps -a | grep kube

or:

docker ps -a | grep kube

Look especially for failures in kube-apiserver or kube-controller-manager.

Step 3: Inspect the kube-apiserver manifest

Since this is a kubeadm-based cluster, manifests are in:

ls /etc/kubernetes/manifests

Open kube-apiserver.yaml:

bash

CopyEdit

sudo nano /etc/kubernetes/manifests/kube-apiserver.yaml

Look for the --etcd-servers= flag. If the external etcd endpoint has changed (likely, due to migration),

this needs to be fixed.

Example of incorrect configuration:

--etcd-servers=https://192.168.1.100:2379

If the IP has changed, update it to the correct IP or hostname of the external etcd server.

Also ensure the correct client certificate and key paths are still valid:

--etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt

--etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.crt

--etcd-keyfile=/etc/kubernetes/pki/apiserver-etcd-client.key

If the files are missing or the path is wrong due to migration, correct those as well.

Step 4: Save and exit, and let static pod restart

Static pod changes will be picked up automatically by the kubelet (watch for

/etc/kubernetes/manifests changes).

Check again:

docker ps | grep kube-apiserver

# or

crictl ps | grep kube-apiserver

Step 5: Confirm API is healthy

Once kube-apiserver is up, try:

kubectl get componentstatuses

kubectl get nodes

If these commands work and return valid statuses, the control plane is functional again.

Step 6: Check controller-manager and scheduler (optional)

If still broken, check the other static pods in /etc/kubernetes/manifests/ and correct paths if

necessary.

Also verify that /etc/kubernetes/kubelet.conf and /etc/kubernetes/admin.conf are present and valid.

Command Summary

ssh cka000059

# Check system and kubelet

sudo systemctl status kubelet

docker ps -a | grep kube # or crictl ps -a | grep kube

# Check manifests

ls /etc/kubernetes/manifests

sudo nano /etc/kubernetes/manifests/kube-apiserver.yaml

# Fix --etcd-servers and certificate paths if needed

# Watch pods restart and confirm:

kubectl get nodes

kubectl get componentstatuses

Question 4

SIMULATION

You must connect to the correct host.

Failure to do so may result in a zero score.

[candidate@base] $ ssh Cka000056

Task

Review and apply the appropriate NetworkPolicy from the provided YAML samples.

Ensure that the chosen NetworkPolicy is not overly permissive, but allows communication between

the frontend and backend Deployments, which run in the frontend and backend namespaces

respectively.

First, analyze the frontend and backend Deployments to determine the specific requirements for the

NetworkPolicy that needs to be applied.

Next, examine the NetworkPolicy YAML samples located in the ~/netpol folder.

Failure to comply may result in a reduced score.

Do not delete or modify the provided samples. Only apply one of them.

Finally, apply the NetworkPolicy that enables communication between the frontend and backend

Deployments, without being overly permissive.

Answer:

See the

solution below.

Explanation:

Task Summary

Connect to host cka000056

Review existing frontend and backend Deployments

Choose one correct NetworkPolicy from the ~/netpol directory

The policy must:

Allow traffic only from the frontend Deployment to the backend Deployment

Avoid being overly permissive

Apply the correct NetworkPolicy without modifying any sample files

Step-by-Step Instructions

Step 1: SSH into the correct node

ssh cka000056

Step 2: Inspect the frontend Deployment

Check the labels used in the frontend Deployment:

kubectl get deployment -n frontend -o yaml

Look under metadata.labels or spec.template.metadata.labels. Note the app or similar label (e.g.,

app: frontend).

Step 3: Inspect the backend Deployment

kubectl get deployment -n backend -o yaml

Again, find the labels assigned to the pods (e.g., app: backend).

Step 4: List and review the provided NetworkPolicies

List the available files:

ls ~/netpol

Check the contents of each policy file:

cat ~/netpol/<file-name>.yaml

Look for a policy that:

Has kind: NetworkPolicy

Applies to the backend namespace

Uses a podSelector that matches the backend pods

Includes an ingress.from rule that references the frontend namespace using a namespaceSelector

(and optionally a podSelector)

Does not allow traffic from all namespaces or all pods

Here’s what to look for in a good match:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-frontend-to-backend

namespace: backend

spec:

podSelector:

matchLabels:

app: backend

ingress:

- from:

- namespaceSelector:

matchLabels:

name: frontend

Even better if the policy includes:

- namespaceSelector:

matchLabels:

name: frontend

podSelector:

matchLabels:

app: frontend

This limits access to pods in the frontend namespace with a specific label.

Step 5: Apply the correct NetworkPolicy

Once you’ve identified the best match, apply it:

kubectl apply -f ~/netpol/<chosen-file>.yaml

Apply only one file. Do not alter or delete any existing sample.

ssh cka000056

kubectl get deployment -n frontend -o yaml

kubectl get deployment -n backend -o yaml

ls ~/netpol

cat ~/netpol/*.yaml # Review carefully

kubectl apply -f ~/netpol/<chosen-file>.yaml

Command Summary

ssh cka000056

kubectl get deployment -n frontend -o yaml

kubectl get deployment -n backend -o yaml

ls ~/netpol

cat ~/netpol/*.yaml # Review carefully

kubectl apply -f ~/netpol/<chosen-file>.yaml

Question 5

SIMULATION

You must connect to the correct host.

Failure to do so may result in a zero score.

[candidate@base] $ ssh Cka000047

Task

A MariaDB Deployment in the mariadb namespace has been deleted by mistake. Your task is to

restore the Deployment ensuring data persistence. Follow these steps:

Create a PersistentVolumeClaim (PVC ) named mariadb in the mariadb namespace with the

following specifications:

Access mode ReadWriteOnce

Storage 250Mi

You must use the existing retained PersistentVolume (PV ).

Failure to do so will result in a reduced score.

There is only one existing PersistentVolume .

Edit the MariaDB Deployment file located at ~/mariadb-deployment.yaml to use PVC you

created in the previous step.

Apply the updated Deployment file to the cluster.

Ensure the MariaDB Deployment is running and stable.

Answer:

See the

solution below.

Explanation:

Task Overview

You're restoring a MariaDB deployment in the mariadb namespace with persistent data.

✅

Tasks:

SSH into cka000047

Create a PVC named mariadb:

Namespace: mariadb

Access mode: ReadWriteOnce

Storage: 250Mi

Use the existing retained PV (there’s only one)

Edit ~/mariadb-deployment.yaml to use the PVC

Apply the deployment

Verify MariaDB is running and stable

Step-by-Step Solution

️

⃣

SSH into the correct host

ssh cka000047

⚠️

Required — skipping = zero score

️

⃣

Inspect the existing PersistentVolume

kubectl get pv

✅

Identify the only existing PV, e.g.:

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS

mariadb-pv 250Mi RWO Retain Available <none> manual

Ensure the status is Available, and it is not already bound to a claim.

️

⃣

Create the PVC to bind the retained PV

Create a file mariadb-pvc.yaml:

cat <<EOF > mariadb-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mariadb

namespace: mariadb

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 250Mi

volumeName: mariadb-pv # Match the PV name exactly

EOF

Apply the PVC:

kubectl apply -f mariadb-pvc.yaml

✅

This binds the PVC to the retained PV.

️

⃣

Edit the MariaDB Deployment YAML

Open the file:

nano ~/mariadb-deployment.yaml

Look under the spec.template.spec.containers.volumeMounts and spec.template.spec.volumes

sections and update them like so:

Add this under the container:

yaml

CopyEdit

volumeMounts:

- name: mariadb-storage

mountPath: /var/lib/mysql

And under the pod spec:

volumes:

- name: mariadb-storage

persistentVolumeClaim:

claimName: mariadb

✅

These lines mount the PVC at the MariaDB data directory.

️

⃣

Apply the updated Deployment

kubectl apply -f ~/mariadb-deployment.yaml

️

⃣

Verify the Deployment is running and stable

kubectl get pods -n mariadb

kubectl describe pod -n mariadb <mariadb-pod-name>

✅

Ensure the pod is in Running state and volume is mounted.

Final Command Summary

ssh cka000047

kubectl get pv # Find the retained PV

# Create PVC

cat <<EOF > mariadb-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mariadb

namespace: mariadb

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 250Mi

volumeName: mariadb-pv

EOF

kubectl apply -f mariadb-pvc.yaml

# Edit Deployment

nano ~/mariadb-deployment.yaml

# Add volumeMount and volume to use the PVC as described

kubectl apply -f ~/mariadb-deployment.yaml

kubectl get pods -n mariadb

Question 6

SIMULATION

You must connect to the correct host.

Failure to do so may result in a zero score.

[candidate@base] $ ssh Cka000060

Task

Install Argo CD in the cluster by performing the following tasks:

Add the official Argo CD Helm repository with the name argo

The Argo CD CRDs have already been pre-installed in the cluster

Generate a template of the Argo CD Helm chart version 7.7.3 for the argocd namespace and save it

to ~/argo-helm.yaml . Configure the chart to not install CRDs.

Answer:

See the

solution below.

Explanation:

Task Summary

SSH into cka000060

Add the Argo CD Helm repo named argo

Generate a manifest (~/argo-helm.yaml) for Argo CD version 7.7.3

Target namespace: argocd

Do not install CRDs

Just generate, don’t install

✅

Step-by-Step Solution

️

⃣

SSH into the correct host

ssh cka000060

⚠️

Required — skipping this = zero score

️

⃣

Add the Argo CD Helm repository

helm repo add argo https://argoproj.github.io/argo-helm

helm repo update

✅

This adds the official Argo Helm chart source.

️

⃣

Generate Argo CD Helm chart template (version 7.7.3)

Use the helm template command to generate a manifest and write it to ~/argo-helm.yaml.

helm template argocd argo/argo-cd \

--version 7.7.3 \

--namespace argocd \

--set crds.install=false \

> ~/argo-helm.yaml

argocd → Release name (can be anything; here it's same as the namespace)

--set crds.install=false → Disables CRD installation

> ~/argo-helm.yaml → Save to required file

✅

️

⃣

Verify the generated file (optional but smart)

head ~/argo-helm.yaml

Check that it contains valid Kubernetes YAML and does not include CRDs.

Final Command Summary

ssh cka000060

helm repo add argo https://argoproj.github.io/argo-helm

helm repo update

helm template argocd argo/argo-cd \

--version 7.7.3 \

--namespace argocd \

--set crds.install=false \

> ~/argo-helm.yaml

head ~/argo-helm.yaml # Optional verification

Question 7

SIMULATION

You must connect to the correct host.

Failure to do so may result in a zero score.

[candidate@base] $ ssh Cka000049

Task

Perform the following tasks:

Create a new PriorityClass named high-priority for user-workloads with a value that is one less

than the highest existing user-defined priority class value.

Patch the existing Deployment busybox-logger running in the priority namespace to use the high-

priority priority class.

Answer:

See the

solution below.

Explanation:

Task Summary

SSH into the correct node: cka000049

Find the highest existing user-defined PriorityClass

Create a new PriorityClass high-priority with a value one less

Patch Deployment busybox-logger (in namespace priority) to use this new PriorityClass

Step-by-Step Solution

️

⃣

SSH into the correct node

bash

CopyEdit

ssh cka000049

⚠️

Skipping this = zero score

️

⃣

Find the highest existing user-defined PriorityClass

Run:

bash

CopyEdit

kubectl get priorityclasses.scheduling.k8s.io

Example output:

vbnet

CopyEdit

NAME VALUE GLOBALDEFAULT AGE

default-low 1000 false 10d

mid-tier 2000 false 7d

critical-pods 1000000 true 30d

Exclude system-defined classes like system-* and the default global one (e.g., critical-pods).

Let's assume the highest user-defined value is 2000.

So your new class should be:

Value = 1999

️

⃣

Create the high-priority PriorityClass

Create a file called high-priority.yaml:

cat <<EOF > high-priority.yaml

apiVersion: scheduling.k8s.io/v1

kind: PriorityClass

metadata:

name: high-priority

value: 1999

globalDefault: false

description: "High priority class for user workloads"

EOF

Apply it:

kubectl apply -f high-priority.yaml

️

⃣

Patch the busybox-logger deployment

Now patch the existing Deployment in the priority namespace:

kubectl patch deployment busybox-logger -n priority \

--type='merge' \

-p '{"spec": {"template": {"spec": {"priorityClassName": "high-priority"}}}}'

️

⃣

Verify your work

Confirm the patch was applied:

kubectl get deployment busybox-logger -n priority -o

jsonpath='{.spec.template.spec.priorityClassName}'

✅

You should see:

high-priority

Also, confirm the class exists:

kubectl get priorityclass high-priority

Final Command Summary

ssh cka000049

kubectl get priorityclass

# Create the new PriorityClass

cat <<EOF > high-priority.yaml

apiVersion: scheduling.k8s.io/v1

kind: PriorityClass

metadata:

name: high-priority

value: 1999

globalDefault: false

description: "High priority class for user workloads"

EOF

kubectl apply -f high-priority.yaml

# Patch the deployment

kubectl patch deployment busybox-logger -n priority \

--type='merge' \

-p '{"spec": {"template": {"spec": {"priorityClassName": "high-priority"}}}}'

# Verify

kubectl get deployment busybox-logger -n priority -o

jsonpath='{.spec.template.spec.priorityClassName}'

kubectl get priorityclass high-priority

Question 8

SIMULATION

You must connect to the correct host.

Failure to do so may result in a zero score.

[candidate@base] $ ssh Cka000022

Task

Reconfigure the existing Deployment front-end in namespace spline-reticulator to expose port 80/tcp

of the existing container nginx .

Create a new Service named front-end-svc exposing the container port 80/tcp .

Configure the new Service to also expose the individual Pods via a NodePort .

Answer:

See the

solution below.

Explanation:

Task Summary

✅

SSH into cka000022

Modify an existing Deployment:

Namespace: spline-reticulator

Deployment: front-end

Container: nginx

Expose: port 80/tcp

Create a Service:

Name: front-end-svc

Type: NodePort

Port: 80 → container port 80

✅

Step-by-Step Solution

️

⃣

SSH into the correct node

ssh cka000022

⚠️

Skipping this = zero score

️

⃣

Edit the Deployment to expose port 80

kubectl edit deployment front-end -n spline-reticulator

Under containers: → nginx, add this if not present:

ports:

- containerPort: 80

protocol: TCP

✅

This enables the container to accept traffic on port 80.

️

⃣

Create a NodePort Service

Create a file named front-end-svc.yaml:

cat <<EOF > front-end-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: front-end-svc

namespace: spline-reticulator

spec:

type: NodePort

selector:

app: front-end

ports:

- port: 80

targetPort: 80

protocol: TCP

EOF

⚠️

Make sure the Deployment has a matching label selector like app: front-end. You can verify with:

kubectl get deployment front-end -n spline-reticulator -o yaml | grep labels -A 2

️

⃣

Apply the service

kubectl apply -f front-end-svc.yaml

️

⃣

Verify

Check if the service is created and has a NodePort assigned:

kubectl get svc front-end-svc -n spline-reticulator

✅

You should see something like:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

front-end-svc NodePort 10.96.0.123 <none> 80:3XXXX/TCP 10s

Where 3XXXX is your automatically assigned NodePort (between 30000–32767).

Final Command Summary

ssh cka000022

kubectl edit deployment front-end -n spline-reticulator

# Add:

# ports:

# - containerPort: 80

cat <<EOF > front-end-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: front-end-svc

namespace: spline-reticulator

spec:

type: NodePort

selector:

app: front-end

ports:

- port: 80

targetPort: 80

protocol: TCP

EOF

kubectl apply -f front-end-svc.yaml

kubectl get svc front-end-svc -n spline-reticulator

Question 9

SIMULATION

You must connect to the correct host.

Failure to do so may result in a zero score.

[candidate@base] $ ssh Cka000046

Task

First, create a new StorageClass named local-path for an existing provisioner named rancher.io/local-

path .

Set the volume binding mode to WaitForFirstConsumer .

Not setting the volume binding mode or setting it to anything other than WaitForFirstConsumer may

result in a reduced score.

Next, configure the StorageClass local-path as the default StorageClass .

Answer:

See the

solution below.

Explanation:

Task Summary

You need to:

SSH into cka000046

Create a StorageClass named local-path using the provisioner rancher.io/local-path

Set the volume binding mode to WaitForFirstConsumer

Make this StorageClass the default

Step-by-Step Solution

️

⃣

SSH into the correct host

ssh cka000046

⚠️

Required. Skipping this = zero score

️

⃣

Create a StorageClass YAML file

Create a file named local-path-sc.yaml:

cat <<EOF > local-path-sc.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: local-path

annotations:

storageclass.kubernetes.io/is-default-class: "true"

provisioner: rancher.io/local-path

volumeBindingMode: WaitForFirstConsumer

EOF

✅

This:

Sets WaitForFirstConsumer (as required)

Marks the class as default using the correct annotation

️

⃣

Apply the StorageClass

kubectl apply -f local-path-sc.yaml

️

⃣

Verify it’s the default StorageClass

kubectl get storageclass

You should see local-path with a (default) marker:

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

local-path rancher.io/local-path Delete WaitForFirstConsumer false 10s

Final Command Summary

ssh cka000046

cat <<EOF > local-path-sc.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: local-path

annotations:

storageclass.kubernetes.io/is-default-class: "true"

provisioner: rancher.io/local-path

volumeBindingMode: WaitForFirstConsumer

EOF

kubectl apply -f local-path-sc.yaml

kubectl get storageclass

Question 10

SIMULATION

You must connect to the correct host.

Failure to do so may result in a zero score.

[candidate@base] $ ssh Cka000054

Context:

Your cluster 's CNI has failed a security audit. It has been removed. You must install a new CNI

that can enforce network policies.

Task

Install and set up a Container Network Interface (CNI ) that meets these requirements:

Pick and install one of these CNI options:

· Flannel version 0.26.1

Manifest:

https://github.com/flannel-io/flannel/releases/download/v0.26.1/kube-flannel.yml

· Calico version 3.28.2

Manifest:

https://raw.githubusercontent.com/project calico/calico/v3.28.2/manifests/tigera-operator.yaml

Answer:

See the

solution below.

Explanation:

Task Summary

SSH into cka000054

Install a CNI plugin that supports NetworkPolicies

Two CNI options provided:

❌

Flannel v0.26.1 (

does NOT support NetworkPolicies)

✅

Calico v3.28.2

(does support NetworkPolicies)

❗

Decision Point: Which CNI to choose?

✅

Choose Calico, because only Calico supports enforcing NetworkPolicies natively. Flannel does

not.

✅

Step-by-Step Solution

️

⃣

SSH into the correct node

ssh cka000054

⚠️

Required. Skipping this results in zero score.

️

⃣

Install Calico CNI (v3.28.2)

Use the official manifest provided:

kubectl apply -f https://raw.githubusercontent.com/projectcalico/calico/v3.28.2/manifests/tigera-

operator.yaml

This installs the Calico Operator, which then deploys the full Calico CNI stack.

️

⃣

Wait for Calico components to come up

Check the pods in tigera-operator and calico-system namespaces:

kubectl get pods -n tigera-operator

kubectl get pods -n calico-system

✅

You should see pods like:

calico-kube-controllers

calico-node

calico-typha

tigera-operator

Wait for all to be in Running state.

✅

️

(Optional) 4

⃣

Confirm CNI is enforcing NetworkPolicies

You can check:

kubectl get crds | grep networkpolicy

You should see:

networkpolicies.crd.projectcalico.org

This confirms Calico's CRDs are installed for policy enforcement.

Final Command Summary

ssh cka000054

kubectl apply -f https://raw.githubusercontent.com/projectcalico/calico/v3.28.2/manifests/tigera-

operator.yaml

kubectl get pods -n tigera-operator

kubectl get pods -n calico-system

kubectl get crds | grep networkpolicy

Question 11

SIMULATION

You must connect to the correct host.

Failure to do so may result in a zero score.

[candidate@base] $ ssh Cka000037

Context

A legacy app needs to be integrated into the Kubernetes built-in logging architecture (i.e.

kubectl logs). Adding a streaming co-located container is a good and common way to

accomplish this requirement.

Task

Update the existing Deployment synergy-leverager, adding a co-located container named sidecar

using the busybox:stable image to the existing Pod . The new co-located container has to run the

following command:

/bin/sh -c "tail -n+1 -f /var/log/syne

rgy-leverager.log"

Use a Volume mounted at /var/log to make the log file synergy-leverager.log available to the co-

located container .

Do not modify the specification of the existing container other than adding the required volume

mount .

Failure to do so may result in a reduced score.

Answer:

See the

solution below.

Explanation:

Task Summary

SSH into the correct node: cka000037

Modify existing deployment synergy-leverager

Add a sidecar container:

Name: sidecar

Image: busybox:stable

Command:

/bin/sh -c "tail -n+1 -f /var/log/synergy-leverager.log"

Use a shared volume mounted at /var/log

Don't touch existing container config except adding volume mount

Step-by-Step Solution

️

⃣

SSH into the correct node

ssh cka000037

⚠️

Skipping this will result in a zero score.

️

⃣

Edit the deployment

kubectl edit deployment synergy-leverager

This opens the deployment YAML in your default editor (vi or similar).

️

⃣

Modify the spec as follows

Inside the spec.template.spec, do these 3 things:

✅

A. Define a shared volume

Add under volumes: (at the same level as containers):

volumes:

- name: log-volume

emptyDir: {}

✅

B. Add volume mount to the existing container

Locate the existing container under containers: and add this:

volumeMounts:

- name: log-volume

mountPath: /var/log

Do not change any other configuration for this container.

✅

C. Add the sidecar container

Still inside containers:, add the new container definition after the first one:

- name: sidecar

image: busybox:stable

command:

- /bin/sh

- -c

- "tail -n+1 -f /var/log/synergy-leverager.log"

volumeMounts:

- name: log-volume

mountPath: /var/log

spec:

containers:

- name: main-container

image: your-existing-image

volumeMounts:

- name: log-volume

mountPath: /var/log

- name: sidecar

image: busybox:stable

command:

- /bin/sh

- -c

- "tail -n+1 -f /var/log/synergy-leverager.log"

volumeMounts:

- name: log-volume

mountPath: /var/log

volumes:

- name: log-volume

emptyDir: {}

Save and exit

If using vi or vim, type:

bash

CopyEdit

wq

️

⃣

Verify

Check the updated pods:

kubectl get pods -l app=synergy-leverager

Pick a pod name and describe it:

kubectl describe pod <pod-name>

Confirm:

2 containers running (main-container + sidecar)

Volume mounted at /var/log

ssh cka000037

kubectl edit deployment synergy-leverager

# Modify as explained above

kubectl get pods -l app=synergy-leverager

kubectl describe pod <pod-name>

Question 12

SIMULATION

You must connect to the correct host.

Failure to do so may result in a zero score.

[candidate@base] $ ssh Cka000058

Context

You manage a WordPress application. Some Pods

are not starting because resource requests are

too high.

Task

A WordPress application in the relative-fawn

namespace consists of:

. A WordPress Deployment with 3 replicas.

Adjust all Pod resource requests as follows:

. Divide node resources evenly across all 3 Pods.

. Give each Pod a fair share of CPU and memory.

Answer:

See the

solution below.

Explanation:

Task Summary

You are managing a WordPress Deployment in namespace relative-fawn.

Deployment has 3 replicas.

Pods are not starting due to high resource requests.

✅

Your job:

Adjust CPU and memory requests so that all 3 pods evenly split the node’s capacity.

Step-by-Step Solution

️

⃣

SSH into the correct host

bash

CopyEdit

ssh cka000058

Skipping this will result in a zero score.

️

⃣

Check node resource capacity

You need to know the node’s CPU and memory resources.

bash

CopyEdit

kubectl describe node | grep -A5 "Capacity"

Example output:

yaml

CopyEdit

Capacity:

cpu: 3

memory: 3Gi

Let’s assume the node has:

3 CPUs

3Gi memory

So for 3 pods, divide evenly:

CPU request per pod: 1

Memory request per pod: 1Gi

⚠️

In the actual exam, check real values and divide accordingly. If the node has 4 CPUs and 8Gi,

you'd allocate ~1.33 CPUs and ~2.66Gi RAM per pod (rounded reasonably).

️

⃣

Edit the Deployment

Edit the WordPress deployment in the relative-fawn namespace:

kubectl edit deployment wordpress -n relative-fawn

Look for the resources section under spec.template.spec.containers like this:

resources:

requests:

cpu: "1"

memory: "1Gi"

If the section doesn’t exist, add it manually.

Save and exit the editor (:wq if using vi).

️

⃣

Confirm changes

Wait a few seconds, then check:

kubectl get pods -n relative-fawn

Ensure all 3 pods are in Running state.

You can also describe a pod to confirm resource requests are set:

kubectl describe pod <pod-name> -n relative-fawn | grep -A5 "Containers"

ssh cka000058

kubectl describe node | grep -A5 "Capacity"

kubectl edit deployment wordpress -n relative-fawn

# Set CPU: 1, Memory: 1Gi (or according to node capacity)

kubectl get pods -n relative-fawn

Question 13

SIMULATION

You must connect to the correct host.

Failure to do so may result in a zero score.

[candidate@base] $ ssh Cka000051

Context

You manage a WordPress application. Some Pods are not starting because resource requests are too

high. Your task Is to prepare a Linux system for Kubernetes . Docker is already installed, but you need

to configure it for kubeadm .

Task

Complete these tasks to prepare the system for Kubernetes :

Set up cri-dockerd :

. Install the Debian package

~/cri-dockerd_0.3.9.3-0.ubuntu-jammy_am

d64.deb

Debian packages are installed using

dpkg .

. Enable and start the cri-docker service

Configure these system parameters:

. Set net.bridge.bridge-nf-call-iptables to 1

Answer:

See the

solution below.

Explanation:

Task Summary

You are given a host to prepare for Kubernetes:

Use dpkg to install cri-dockerd

Enable and start the cri-docker service

Set net.bridge.bridge-nf-call-iptables to 1 via sysctl

Step-by-Step Instructions

️

⃣

SSH into the correct node

bash

CopyEdit

ssh cka000051

⚠️

Required — failure to connect to the correct host = zero score.

️

⃣

Install cri-dockerd

You are told the .deb file is already located at:

bash

CopyEdit

~/cri-dockerd_0.3.9.3-0.ubuntu-jammy_amd64.deb

Install it with dpkg:

bash

CopyEdit

sudo dpkg -i ~/cri-dockerd_0.3.9.3-0.ubuntu-jammy_amd64.deb

If any dependencies are missing (e.g., golang or containerd), you might need:

bash

CopyEdit

sudo apt-get install -f -y

But usually, the exam system provides a pre-validated .deb environment.

️

⃣

Enable and start cri-docker service

Start and enable both services:

bash

CopyEdit

sudo systemctl enable cri-docker.service

sudo systemctl enable --now cri-docker.socket

sudo systemctl start cri-docker.service

Check status (optional but smart):

bash

CopyEdit

sudo systemctl status cri-docker.service

You should see it active (running).

️

⃣

Configure the sysctl parameter

Set net.bridge.bridge-nf-call-iptables=1 immediately and persistently.

Step A: Apply immediately:

sudo sysctl net.bridge.bridge-nf-call-iptables=1

Step B: Persist it in /etc/sysctl.d:

Create or modify a file:

echo "net.bridge.bridge-nf-call-iptables = 1" | sudo tee /etc/sysctl.d/k8s.conf

Reload sysctl:

sudo sysctl --system

Verify:

sysctl net.bridge.bridge-nf-call-iptables

Should return:

net.bridge.bridge-nf-call-iptables = 1

✅

Now the system is ready for kubeadm with Docker (via cri-dockerd)!

ssh cka000051

sudo dpkg -i ~/cri-dockerd_0.3.9.3-0.ubuntu-jammy_amd64.deb

sudo systemctl enable cri-docker.service

sudo systemctl enable --now cri-docker.socket

sudo systemctl start cri-docker.service

sudo sysctl net.bridge.bridge-nf-call-iptables=1

echo "net.bridge.bridge-nf-call-iptables = 1" | sudo tee /etc/sysctl.d/k8s.conf

sudo sysctl --system

Question 14

SIMULATION

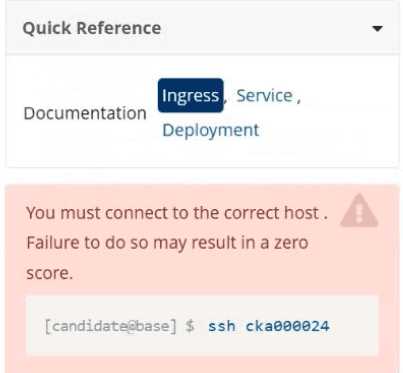

Task

Create a new Ingress resource as follows:

. Name: echo

. Namespace : sound-repeater

. Exposing Service echoserver-service on

http://example.org/echo using Service port 8080

The availability of Service

echoserver-service can be checked

i

using the following command, which should return 200 :

[candidate@cka000024] $ curl -o /de v/null -s -w "%{http_code}\n" http://example.org/echo

Answer:

See the

solution below.

Explanation:

Task Summary

Create an Ingress named echo in the sound-repeater namespace that:

Routes requests to /echo on host example.org

Forwards traffic to service echoserver-service

Uses service port 8080

Verification should return HTTP 200 using curl

✅

Step-by-Step Answer

️

⃣

SSH into the correct node

As shown in the image:

bash

CopyEdit

ssh cka000024

⚠️

Skipping this will result in a ZERO score!

️

⃣

Verify the namespace and service

Ensure the sound-repeater namespace and echoserver-service exist:

kubectl get svc -n sound-repeater

Look for:

echoserver-service ClusterIP ... 8080/TCP

️

⃣

Create the Ingress manifest

Create a YAML file: echo-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: echo

namespace: sound-repeater

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /$1

spec:

rules:

- host: example.org

http:

paths:

- path: /echo

pathType: Prefix

backend:

service:

name: echoserver-service

port:

number: 8080

️

⃣

Apply the Ingress resource

kubectl apply -f echo-ingress.yaml

️

⃣

Test with curl as instructed

Use the exact verification command:

curl -o /dev/null -s -w "%{http_code}\n" http://example.org/echo

✅

You should see:

200

✅

Final Answer Summary

ssh cka000024

kubectl get svc -n sound-repeater

# Create the Ingress YAML

cat <<EOF > echo-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: echo

namespace: sound-repeater

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /$1

spec:

rules:

- host: example.org

http:

paths:

- path: /echo

pathType: Prefix

backend:

service:

name: echoserver-service

port:

number: 8080

EOF

kubectl apply -f echo-ingress.yaml

curl -o /dev/null -s -w "%{http_code}\n" http://example.org/echo

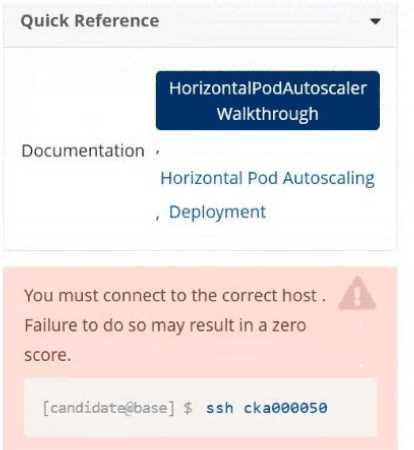

Question 15

SIMULATION

Task

Create a new HorizontalPodAutoscaler (HPA ) named apache-server in the autoscale

namespace. This HPA must target the existing Deployment called apache-server in the

autoscale namespace.

Set the HPA to aim for 50% CPU usage per Pod . Configure it to have at least 1 Pod and no more than

4 Pods . Also, set the downscale stabilization window to 30 seconds.

Answer:

See the

solution below.

Explanation:

Task Summary

Create an HPA named apache-server in the autoscale namespace.

Target an existing deployment also named apache-server.

CPU target: 50%

Pod range: min 1, max 4

Downscale stabilization window: 30 seconds

Step-by-Step Answer

Step 1: Connect to the correct host

This is critical, as shown in the warning image.

ssh cka000050

Skipping this may result in zero for this question!

Step 2: Verify the deployment exists

kubectl get deployment apache-server -n autoscale

Make sure it’s there before creating the HP

A. If it’s missing, the HPA won't bind correctly.

⚙️

Step 3: Create the HPA

We will use the kubectl autoscale command for a quick setup, then patch it to add the stabilization

window (since kubectl autoscale doesn't include it).

kubectl autoscale deployment apache-server \

--namespace autoscale \

--cpu-percent=50 \

--min=1 \

--max=4

Step 4: Add the downscale stabilization window

You’ll need to patch the HPA to include the stabilization window of 30s.

Create a patch file called hpa-patch.yaml:

spec:

behavior:

scaleDown:

stabilizationWindowSeconds: 30

Apply the patch:

bash

CopyEdit

kubectl patch hpa apache-server \

-n autoscale \

--patch "$(cat hpa-patch.yaml)"

✅

Step 5: Confirm your work

bash

CopyEdit

kubectl describe hpa apache-server -n autoscale

Look for:

Min/Max Pods: 1/4

Target CPU utilization: 50%

Stabilization window: should appear under Behavior > ScaleDown

ssh cka000050

kubectl get deployment apache-server -n autoscale

kubectl autoscale deployment apache-server \

--namespace autoscale \

--cpu-percent=50 \

--min=1 \

--max=4

# Patch to add stabilization window

cat <<EOF > hpa-patch.yaml

spec:

behavior:

scaleDown:

stabilizationWindowSeconds: 30

EOF

kubectl patch hpa apache-server -n autoscale --patch "$(cat hpa-patch.yaml)"