Huawei H13-311-V3-5 Exam Questions

Questions for the H13-311-V3-5 were updated on : Feb 18 ,2026

Page 1 out of 4. Viewing questions 1-15 out of 60

Question 1

DRAG DROP

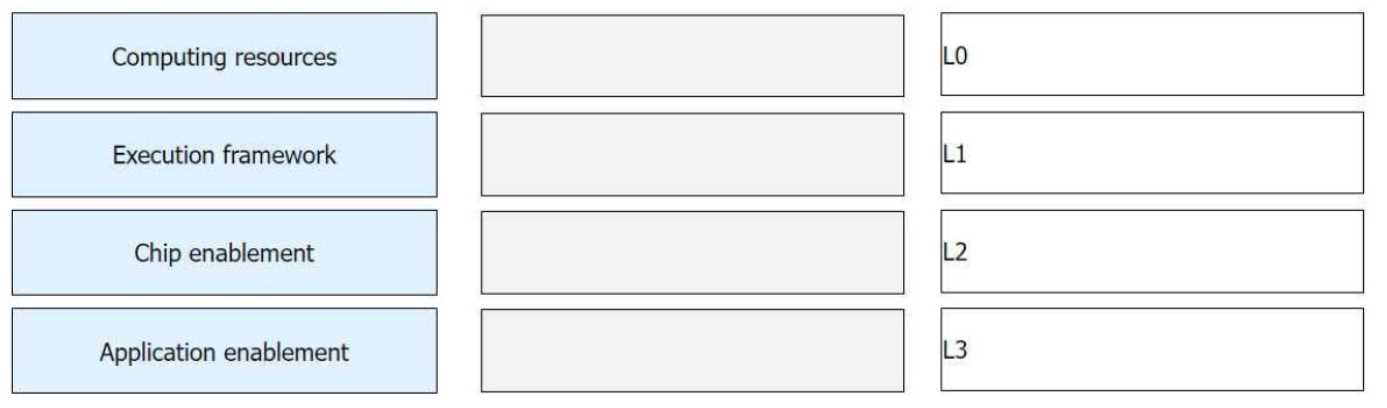

Correctly connect the layers in the architecture of an Ascend AI Processor.

Answer:

None

Explanation:

To correctly connect the layers in the architecture of an Ascend AI Processor, here’s a typical

hierarchy and explanation for each layer:

Computing resources: This is generally considered as the lowest level in an AI processor architecture,

directly related to the physical hardware. It should be matched with L0, which represents the

hardware layer where the basic computational resources are managed.

Chip enablement: This typically involves firmware or low-level software that directly interacts with

the chip hardware to enable its functionalities. This layer facilitates the operation of the hardware

and is vital for the execution of any higher-level processes. It should be linked with L1.

Execution framework: This is the middle layer where higher-level frameworks that facilitate the

development and execution of AI models operate. Frameworks like TensorFlow, PyTorch, or Huawei’s

MindSpore would operate at this level. Connect this to L2.

Application enablement: This is the topmost layer and involves the use of AI capabilities within

application-specific contexts, often utilizing the frameworks and computing resources provided by

the lower layers. It should be matched with L3, indicating the highest level of abstraction in the

architecture focused on applications.

This hierarchy reflects how each layer builds upon the lower ones to enable more complex

functionalities and user interactions with AI technologies in processors like Huawei's Ascend.

Question 2

DRAG DROP

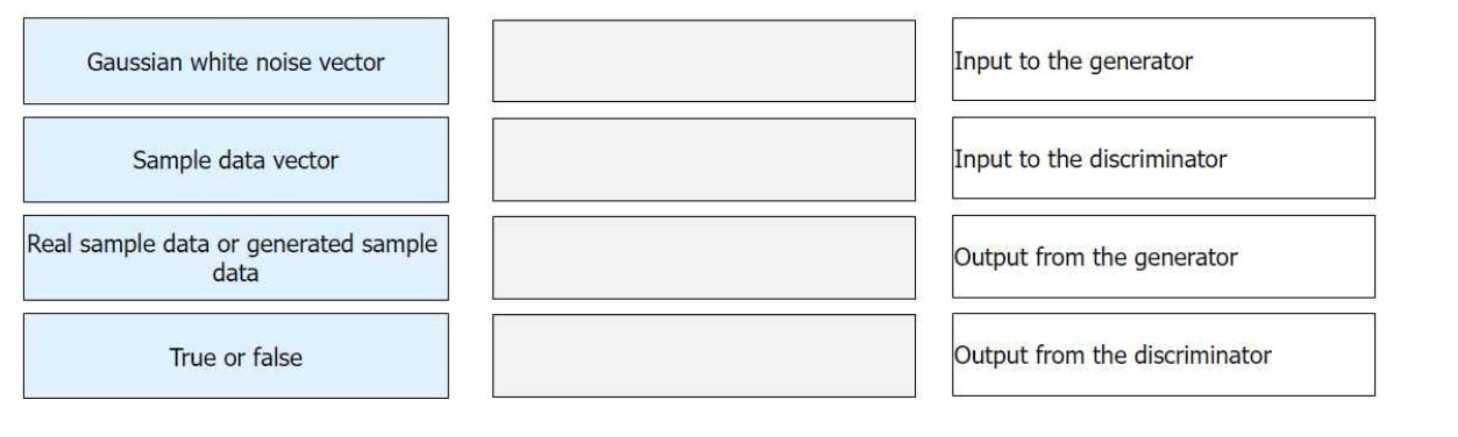

Match the input and output of a generative adversarial network (GAN).

Answer:

None

Explanation:

Based on the image you've described, here's how the inputs and outputs of a Generative Adversarial

Network (GAN) should be matched:

Gaussian white noise vector: This is typically used as an Input to the generator in GANs. The

generator uses this random noise vector to produce synthetic data.

Sample data vector: This is an Input to the discriminator. The discriminator in a GAN receives either

real data from the training set or fake data generated by the generator to determine its authenticity.

Real sample data or generated sample data: This is the Output from the generator. The generator

creates synthetic data that mimics the real training data, which is then fed into the discriminator.

True or false: This is the Output from the discriminator. The discriminator outputs a judgment about

whether the input data it received (either from real datasets or generated by the generator) is real

(true) or fake (false).

These matches align with how GANs are designed to operate, with the generator creating data and

the discriminator evaluating it.

Question 3

Which of the following are use cases of generative adversarial networks?

- A. Photo repair

- B. Generating face images

- C. Generating a 3D model from a 2D image

- D. Generating images from text

Answer:

A, B, C, D

Explanation:

Generative Adversarial Networks (GANs) are widely used in several creative and image generation

tasks, including:

A . Photo repair: GANs can be used to restore missing or damaged parts of images.

B . Generating face images: GANs are known for their ability to generate realistic face images.

C . Generating a 3D model from a 2D image: GANs can be used in applications where 2D images are

converted into 3D models.

D . Generating images from text: GANs can also generate images based on text descriptions, as seen

in tasks like text-to-image synthesis.

All of the provided options are valid use cases of GANs.

HCIA AI

Reference:

Deep Learning Overview: Discusses the architecture and use cases of GANs, including applications in

image generation and creative content.

AI Development Framework: Covers the role of GANs in various generative tasks across industries.

Question 4

Which of the following are general quantum algorithms?

- A. HHL algorithm

- B. Shor algorithm

- C. Grover algorithm

- D. A* search algorithm

Answer:

A, B, C

Explanation:

The general quantum algorithms include:

A . HHL algorithm (Harrow-Hassidim-Lloyd): An algorithm designed for solving systems of linear

equations using quantum computers.

B . Shor algorithm: A quantum algorithm for factoring large integers efficiently, which is important in

cryptography.

C . Grover algorithm: A quantum search algorithm used for unstructured database search, providing

a quadratic speedup over classical search algorithms.

The A search algorithm* is not a quantum algorithm; it is a classical algorithm used for finding the

shortest path in a graph. Therefore, D is incorrect.

HCIA AI

Reference:

Cutting-edge AI Applications: Discusses the potential of quantum algorithms in AI and other

advanced computing applications.

Question 5

Which of the following does not belong to the process for constructing a knowledge graph?

- A. Determining the target domain of the knowledge graph

- B. Data acquisition

- C. Creating new concepts

- D. Knowledge fusion

Answer:

C

Explanation:

The process of constructing a knowledge graph typically involves several key steps:

A. Determining the target domain of the knowledge graph: This defines the scope and boundaries of

the information to be represented.

B. Data acquisition: Involves gathering structured and unstructured data from various sources.

D. Knowledge fusion: This step involves integrating and reconciling data from multiple sources to

create a consistent and coherent knowledge graph.

Creating new concepts is not typically part of the knowledge graph construction process. Instead,

knowledge graphs usually focus on extracting, integrating, and structuring existing knowledge, not

creating new concepts.

HCIA AI

Reference:

AI Development Framework: Describes the steps in constructing knowledge graphs, from data

acquisition to knowledge fusion and domain determination.

Question 6

Google proposed the concept of knowledge graph and took the lead in applying knowledge graphs to

search engines in 2012, successfully improving users' search quality and experience.

- A. TRUE

- B. FALSE

Answer:

A

Explanation:

Google introduced the concept of the knowledge graph in 2012, and it played a significant role in

improving the search engine's ability to understand the relationships between different entities (e.g.,

people, places, things). This allowed Google to provide richer, more relevant search results by

moving from keyword-based search to a more semantic understanding of the user's query. The

knowledge graph helps organize information in a more structured way, making it easier for users to

find relevant answers quickly and enhancing the overall search experience.

HCIA AI

Reference:

AI Overview: Discusses the impact of knowledge graphs on search engines and their importance in

improving AI-driven user experiences.

Cutting-edge AI Applications: Provides insights into how knowledge graphs are applied in AI systems

for improving information retrieval.

Question 7

Which of the following are AI capabilities provided by the HMS Core?

- A. MindSpore Lite

- B. HiAI Foundation

- C. HiAI Engine

- D. ML Kit

Answer:

B, C, D

Explanation:

Huawei HMS Core (Huawei Mobile Services Core) provides a variety of AI capabilities, including:

HiAI Foundation: Offers basic AI infrastructure, enabling AI computing capabilities.

HiAI Engine: Provides pre-built AI engines for tasks like image processing and NLP.

ML Kit: Provides machine learning features for developers to integrate into apps.

MindSpore Lite is not part of HMS Core but rather a lightweight version of the MindSpore framework

designed for mobile and edge devices.

Reference: Huawei HCIA-AI Certification, Huawei Mobile Services (HMS) Core AI Capabilities.

Question 8

Huawei Cloud ModelArts provides ModelBox for device-edge-cloud joint development. Which of the

following are its optimization policies?

- A. Hardware affinity

- B. Operator optimization

- C. Automatic segmentation of operators

- D. Model replication

Answer:

ABC

Explanation:

Huawei Cloud ModelArts provides ModelBox, a tool for device-edge-cloud joint development,

enabling efficient deployment across multiple environments. Some of its key optimization policies

include:

Hardware affinity: Ensures that the models are optimized to run efficiently on the target hardware.

Operator optimization: Improves the performance of AI operators for better model execution.

Automatic segmentation of operators: Automatically segments operators for optimized distribution

across devices, edges, and clouds.

Model replication is not an optimization policy offered by ModelBox.

Reference: Huawei HCIA-AI Certification, Introduction to Huawei AI Platforms – ModelArts and

ModelBox.

Question 9

Which of the following are covered by Huawei Cloud EIHealth?

- A. Drug R&D

- B. Clinical research

- C. Diagnosis and treatment

- D. Genome analysis

Answer:

A, B, C, D

Explanation:

Huawei Cloud EIHealth is a comprehensive platform that offers AI-powered solutions across various

healthcare-related fields such as:

Drug R&D: Accelerates drug discovery and development using AI.

Clinical research: Enhances research efficiency through AI data analysis.

Diagnosis and treatment: Provides AI-based diagnostic support and treatment recommendations.

Genome analysis: Uses AI to analyze genetic data for medical research and personalized medicine.

Reference: Huawei HCIA-AI Certification, Introduction to Huawei AI Platforms – Huawei Cloud

EIHealth.

Question 10

Huawei Cloud EI provides knowledge graph, OCR, machine translation, and the Celia (virtual

assistant) development platform.

- A. TRUE

- B. FALSE

Answer:

A

Explanation:

Huawei Cloud EI (Enterprise Intelligence) provides a variety of AI services and platforms, including

knowledge graph, OCR (Optical Character Recognition), machine translation, and the Celia virtual

assistant development platform. These services enable businesses to integrate AI capabilities such as

language processing, image recognition, and virtual assistant development into their systems.

Reference: Huawei HCIA-AI Certification, Introduction to Huawei AI Platforms – Huawei Cloud EI.

Question 11

AI inference chips need to be optimized and are thus more complex than those used for training.

- A. TRUE

- B. FALSE

Answer:

B

Explanation:

AI inference chips are generally simpler than training chips because inference involves running a

trained model on new data, which requires fewer computations compared to the training phase.

Training chips need to perform more complex tasks like backpropagation, gradient calculations, and

frequent parameter updates. Inference, on the other hand, mostly involves forward pass

computations, making inference chips optimized for speed and efficiency but not necessarily more

complex than training chips.

Thus, the statement is false because inference chips are optimized for simpler tasks compared to

training chips.

HCIA AI

Reference:

Cutting-edge AI Applications: Describes the difference between AI inference and training chips,

focusing on their respective optimizations.

Deep Learning Overview: Explains the distinction between the processes of training and inference,

and how hardware is optimized accordingly.

Question 12

HarmonyOS can provide AI capabilities for external systems only through the integrated HMS Core.

- A. TRUE

- B. FALSE

Answer:

B

Explanation:

HarmonyOS provides AI capabilities not only through HMS Core (Huawei Mobile Services Core), but

also through other system-level integrations and AI frameworks. While HMS Core is one way to offer

AI functionalities, HarmonyOS also has native support for AI processing that can be accessed by

external systems or applications beyond HMS Core.

Thus, the statement is false as AI capabilities are not limited solely to HMS Core in HarmonyOS.

HCIA AI

Reference:

Introduction to Huawei AI Platforms: Covers HarmonyOS and the various ways it integrates AI

capabilities into external systems.

Question 13

AI chips, also called AI accelerators, optimize matrix multiplication.

- A. TRUE

- B. FALSE

Answer:

A

Explanation:

AI chips, also known as AI accelerators, are specialized hardware designed to enhance the

performance of AI workloads, particularly for tasks like matrix multiplication, which is heavily used in

machine learning and deep learning algorithms. These chips optimize operations like matrix

multiplications because they are computationally intensive and central to neural network

computations (e.g., in forward and backward passes).

HCIA AI

Reference:

Cutting-edge AI Applications: Discussion of AI chips and accelerators, with a focus on their role in

improving computation efficiency.

Deep Learning Overview: Explains how neural network operations like matrix multiplication are

optimized in AI hardware.

Question 14

Which of the following functions are provided by the nn module of MindSpore?

- A. Hyperparameter search modes such as GridSearch and RandomSearch

- B. Model evaluation indicators such as F1 Score and AUC

- C. Optimizers such as Momentum and Adam

- D. Loss functions such as MSELoss and SoftmaxCrossEntropyWithLogits

Answer:

C, D

Explanation:

The nn module in MindSpore provides essential tools for building neural networks, including:

C. Optimizers: such as Momentum and Adam, which are used to adjust the weights of the model

during training.

D. Loss functions: such as MSELoss (Mean Squared Error Loss) and SoftmaxCrossEntropyWithLogits,

which are used to compute the difference between predicted and actual values.

The other options are incorrect because:

A. Hyperparameter search modes (like GridSearch and RandomSearch) are typically found in model

training and tuning modules, but not in the nn module.

B. Model evaluation indicators like F1 Score and AUC are also handled by specific evaluation

functions or libraries outside the nn module.

HCIA AI

Reference:

AI Development Framework: Detailed coverage of MindSpore’s nn module, its optimizers, and loss

functions.

Introduction to Huawei AI Platforms: Explains various MindSpore features, including network

construction and training.

Question 15

Which of the following statements are false about softmax and logistic?

- A. In terms of probability, softmax modeling uses the polynomial distribution, whereas logistic modeling uses the binomial distribution.

- B. Multiple logistic regressions can be combined to achieve multi-class classification effects.

- C. Logistic is used for binary classification problems, whereas softmax is used for multi-class classification problems.

- D. In the multi-class classification of softmax regression, the output classes are not mutually exclusive. That is, the word "Apple" belongs to both the "fruit" and "3C" classes.

Answer:

A, D