google PROFESSIONAL DATA ENGINEER Exam Questions

Questions for the PROFESSIONAL DATA ENGINEER were updated on : Jul 02 ,2025

Page 1 out of 13. Viewing questions 1-15 out of 184

Question 1

Your neural network model is taking days to train. You want to increase the training speed. What can you do?

- A. Subsample your test dataset.

- B. Subsample your training dataset.

- C. Increase the number of input features to your model.

- D. Increase the number of layers in your neural network.

Answer:

D

Explanation:

Reference: https://towardsdatascience.com/how-to-increase-the-accuracy-of-a-neural-network-9f5d1c6f407d

Question 2

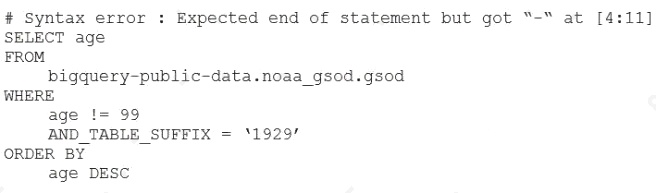

Your company is using WILDCARD tables to query data across multiple tables with similar names. The SQL statement is

currently failing with the following error:

Which table name will make the SQL statement work correctly?

- A. ‘bigquery-public-data.noaa_gsod.gsod‘

- B. bigquery-public-data.noaa_gsod.gsod*

- C. ‘bigquery-public-data.noaa_gsod.gsod’*

- D. ‘bigquery-public-data.noaa_gsod.gsod*`

Answer:

D

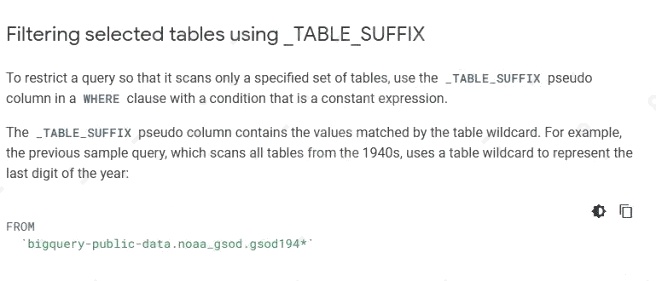

Explanation:

Reference: https://cloud.google.com/bigquery/docs/wildcard-tables

Question 3

You work for a shipping company that has distribution centers where packages move on delivery lines to route them

properly. The company wants to add cameras to the delivery lines to detect and track any visual damage to the packages in

transit. You need to create a way to automate the detection of damaged packages and flag them for human review in real

time while the packages are in transit. Which solution should you choose?

- A. Use BigQuery machine learning to be able to train the model at scale, so you can analyze the packages in batches.

- B. Train an AutoML model on your corpus of images, and build an API around that model to integrate with the package tracking applications.

- C. Use the Cloud Vision API to detect for damage, and raise an alert through Cloud Functions. Integrate the package tracking applications with this function.

- D. Use TensorFlow to create a model that is trained on your corpus of images. Create a Python notebook in Cloud Datalab that uses this model so you can analyze for damaged packages.

Answer:

A

Question 4

You launched a new gaming app almost three years ago. You have been uploading log files from the previous day to a

separate Google BigQuery table with the table name format LOGS_yyyymmdd. You have been using table wildcard

functions to generate daily and monthly reports for all time ranges. Recently, you discovered that some queries that cover

long date ranges are exceeding the limit of 1,000 tables and failing. How can you resolve this issue?

- A. Convert all daily log tables into date-partitioned tables

- B. Convert the sharded tables into a single partitioned table

- C. Enable query caching so you can cache data from previous months

- D. Create separate views to cover each month, and query from these views

Answer:

A

Question 5

Your company uses a proprietary system to send inventory data every 6 hours to a data ingestion service in the cloud.

Transmitted data includes a payload of several fields and the timestamp of the transmission. If there are any concerns about

a transmission, the system re-transmits the data. How should you deduplicate the data most efficiency?

- A. Assign global unique identifiers (GUID) to each data entry.

- B. Compute the hash value of each data entry, and compare it with all historical data.

- C. Store each data entry as the primary key in a separate database and apply an index.

- D. Maintain a database table to store the hash value and other metadata for each data entry.

Answer:

D

Question 6

You are designing an Apache Beam pipeline to enrich data from Cloud Pub/Sub with static reference data from BigQuery.

The reference data is small enough to fit in memory on a single worker. The pipeline should write enriched results to

BigQuery for analysis. Which job type and transforms should this pipeline use?

- A. Batch job, PubSubIO, side-inputs

- B. Streaming job, PubSubIO, JdbcIO, side-outputs

- C. Streaming job, PubSubIO, BigQueryIO, side-inputs

- D. Streaming job, PubSubIO, BigQueryIO, side-outputs

Answer:

A

Question 7

You are managing a Cloud Dataproc cluster. You need to make a job run faster while minimizing costs, without losing work

in progress on your clusters. What should you do?

- A. Increase the cluster size with more non-preemptible workers.

- B. Increase the cluster size with preemptible worker nodes, and configure them to forcefully decommission.

- C. Increase the cluster size with preemptible worker nodes, and use Cloud Stackdriver to trigger a script to preserve work.

- D. Increase the cluster size with preemptible worker nodes, and configure them to use graceful decommissioning.

Answer:

D

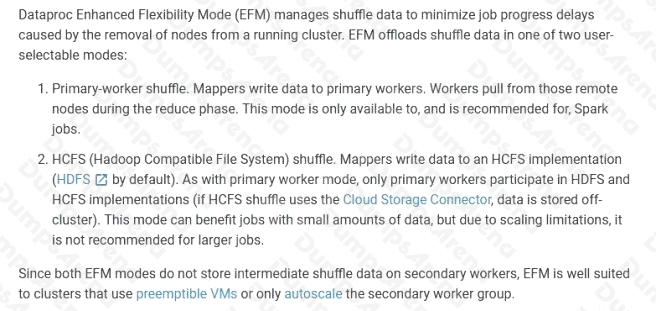

Explanation:

Reference: https://cloud.google.com/dataproc/docs/concepts/configuring-clusters/flex

Question 8

You currently have a single on-premises Kafka cluster in a data center in the us-east region that is responsible for ingesting

messages from IoT devices globally. Because large parts of globe have poor internet connectivity, messages sometimes

batch at the edge, come in all at once, and cause a spike in load on your Kafka cluster. This is becoming difficult to manage

and prohibitively expensive. What is the Google-recommended cloud native architecture for this scenario?

- A. Edge TPUs as sensor devices for storing and transmitting the messages.

- B. Cloud Dataflow connected to the Kafka cluster to scale the processing of incoming messages.

- C. An IoT gateway connected to Cloud Pub/Sub, with Cloud Dataflow to read and process the messages from Cloud Pub/Sub.

- D. A Kafka cluster virtualized on Compute Engine in us-east with Cloud Load Balancing to connect to the devices around the world.

Answer:

C

Question 9

You want to rebuild your batch pipeline for structured data on Google Cloud. You are using PySpark to conduct data

transformations at scale, but your pipelines are taking over twelve hours to run. To expedite development and pipeline run

time, you want to use a serverless tool and SOL syntax. You have already moved your raw data into Cloud Storage. How

should you build the pipeline on Google Cloud while meeting speed and processing requirements?

- A. Convert your PySpark commands into SparkSQL queries to transform the data, and then run your pipeline on Dataproc to write the data into BigQuery.

- B. Ingest your data into Cloud SQL, convert your PySpark commands into SparkSQL queries to transform the data, and then use federated quenes from BigQuery for machine learning.

- C. Ingest your data into BigQuery from Cloud Storage, convert your PySpark commands into BigQuery SQL queries to transform the data, and then write the transformations to a new table.

- D. Use Apache Beam Python SDK to build the transformation pipelines, and write the data into BigQuery.

Answer:

D

Question 10

You want to analyze hundreds of thousands of social media posts daily at the lowest cost and with the fewest steps.

You have the following requirements:

You will batch-load the posts once per day and run them through the Cloud Natural Language API.

You will extract topics and sentiment from the posts.

You must store the raw posts for archiving and reprocessing.

You will create dashboards to be shared with people both inside and outside your organization.

You need to store both the data extracted from the API to perform analysis as well as the raw social media posts for

historical archiving. What should you do?

- A. Store the social media posts and the data extracted from the API in BigQuery.

- B. Store the social media posts and the data extracted from the API in Cloud SQL.

- C. Store the raw social media posts in Cloud Storage, and write the data extracted from the API into BigQuery.

- D. Feed to social media posts into the API directly from the source, and write the extracted data from the API into BigQuery.

Answer:

D

Question 11

You are developing an application on Google Cloud that will automatically generate subject labels for users blog posts. You

are under competitive pressure to add this feature quickly, and you have no additional developer resources. No one on your

team has experience with machine learning. What should you do?

- A. Call the Cloud Natural Language API from your application. Process the generated Entity Analysis as labels.

- B. Call the Cloud Natural Language API from your application. Process the generated Sentiment Analysis as labels.

- C. Build and train a text classification model using TensorFlow. Deploy the model using Cloud Machine Learning Engine. Call the model from your application and process the results as labels.

- D. Build and train a text classification model using TensorFlow. Deploy the model using a Kubernetes Engine cluster. Call the model from your application and process the results as labels.

Answer:

A

Question 12

Your company is selecting a system to centralize data ingestion and delivery. You are considering messaging and data

integration systems to address the requirements. The key requirements are: The ability to seek to a particular offset in a

topic, possibly back to the start of all data ever captured Support for publish/subscribe semantics on hundreds of topics

Retain per-key ordering

Which system should you choose?

- A. Apache Kafka

- B. Cloud Storage

- C. Cloud Pub/Sub

- D. Firebase Cloud Messaging

Answer:

A

Question 13

You architect a system to analyze seismic data. Your extract, transform, and load (ETL) process runs as a series of

MapReduce jobs on an Apache Hadoop cluster. The ETL process takes days to process a data set because some steps are

computationally expensive. Then you discover that a sensor calibration step has been omitted. How should you change your

ETL process to carry out sensor calibration systematically in the future?

- A. Modify the transformMapReduce jobs to apply sensor calibration before they do anything else.

- B. Introduce a new MapReduce job to apply sensor calibration to raw data, and ensure all other MapReduce jobs are chained after this.

- C. Add sensor calibration data to the output of the ETL process, and document that all users need to apply sensor calibration themselves.

- D. Develop an algorithm through simulation to predict variance of data output from the last MapReduce job based on calibration factors, and apply the correction to all data.

Answer:

A

Question 14

Youre training a model to predict housing prices based on an available dataset with real estate properties. Your plan is to

train a fully connected neural net, and youve discovered that the dataset contains latitude and longitude of the property.

Real estate professionals have told you that the location of the property is highly influential on price, so youd like to engineer

a feature that incorporates this physical dependency.

What should you do?

- A. Provide latitude and longitude as input vectors to your neural net.

- B. Create a numeric column from a feature cross of latitude and longitude.

- C. Create a feature cross of latitude and longitude, bucketize at the minute level and use L1 regularization during optimization.

- D. Create a feature cross of latitude and longitude, bucketize it at the minute level and use L2 regularization during optimization.

Answer:

B

Explanation:

Reference: https://cloud.google.com/bigquery/docs/gis-data

Question 15

You need to set access to BigQuery for different departments within your company. Your solution should comply with the

following requirements:

Each department should have access only to their data.

Each department will have one or more leads who need to be able to create and update tables and provide them to their

team. Each department has data analysts who need to be able to query but not modify data.

How should you set access to the data in BigQuery?

- A. Create a dataset for each department. Assign the department leads the role of OWNER, and assign the data analysts the role of WRITER on their dataset.

- B. Create a dataset for each department. Assign the department leads the role of WRITER, and assign the data analysts the role of READER on their dataset.

- C. Create a table for each department. Assign the department leads the role of Owner, and assign the data analysts the role of Editor on the project the table is in.

- D. Create a table for each department. Assign the department leads the role of Editor, and assign the data analysts the role of Viewer on the project the table is in.

Answer:

D