databricks DATABRICKS MACHINE LEARNING ASSOCIATE Exam Questions

Questions for the DATABRICKS MACHINE LEARNING ASSOCIATE were updated on : Feb 18 ,2026

Page 1 out of 5. Viewing questions 1-15 out of 74

Question 1

Which of the following machine learning algorithms typically uses bagging?

- A. IGradient boosted trees

- B. K-means

- C. Random forest

- D. Decision tree

Answer:

C

Explanation:

Random Forest is a machine learning algorithm that typically uses bagging (Bootstrap Aggregating).

Bagging is a technique that involves training multiple base models (such as decision trees) on

different subsets of the data and then combining their predictions to improve overall model

performance. Each subset is created by randomly sampling with replacement from the original

dataset. The Random Forest algorithm builds multiple decision trees and merges them to get a more

accurate and stable prediction.

Reference:

Databricks documentation on Random Forest: Random Forest in Spark ML

Question 2

The implementation of linear regression in Spark ML first attempts to solve the linear regression

problem using matrix decomposition, but this method does not scale well to large datasets with a

large number of variables.

Which of the following approaches does Spark ML use to distribute the training of a linear regression

model for large data?

- A. Logistic regression

- B. Singular value decomposition

- C. Iterative optimization

- D. Least-squares method

Answer:

C

Explanation:

For large datasets, Spark ML uses iterative optimization methods to distribute the training of a linear

regression model. Specifically, Spark MLlib employs techniques like Stochastic Gradient Descent

(SGD) and Limited-memory Broyden–Fletcher–Goldfarb–Shanno (L-BFGS) optimization to iteratively

update the model parameters. These methods are well-suited for distributed computing

environments because they can handle large-scale data efficiently by processing mini-batches of data

and updating the model incrementally.

Reference:

Databricks documentation on linear regression: Linear Regression in Spark ML

Question 3

A data scientist has produced three new models for a single machine learning problem. In the past,

the solution used just one model. All four models have nearly the same prediction latency, but a

machine learning engineer suggests that the new solution will be less time efficient during inference.

In which situation will the machine learning engineer be correct?

- A. When the new solution requires if-else logic determining which model to use to compute each prediction

- B. When the new solution's models have an average latency that is larger than the size of the original model

- C. When the new solution requires the use of fewer feature variables than the original model

- D. When the new solution requires that each model computes a prediction for every record

- E. When the new solution's models have an average size that is larger than the size of the original model

Answer:

D

Explanation:

If the new solution requires that each of the three models computes a prediction for every record,

the time efficiency during inference will be reduced. This is because the inference process now

involves running multiple models instead of a single model, thereby increasing the overall

computation time for each record.

In scenarios where inference must be done by multiple models for each record, the latency

accumulates, making the process less time efficient compared to using a single model.

Reference:

Model Ensemble Techniques

Question 4

A data scientist has developed a machine learning pipeline with a static input data set using Spark

ML, but the pipeline is taking too long to process. They increase the number of workers in the cluster

to get the pipeline to run more efficiently. They notice that the number of rows in the training set

after reconfiguring the cluster is different from the number of rows in the training set prior to

reconfiguring the cluster.

Which of the following approaches will guarantee a reproducible training and test set for each

model?

- A. Manually configure the cluster

- B. Write out the split data sets to persistent storage

- C. Set a speed in the data splitting operation

- D. Manually partition the input data

Answer:

B

Explanation:

To ensure reproducible training and test sets, writing the split data sets to persistent storage is a

reliable approach. This allows you to consistently load the same training and test data for each model

run, regardless of cluster reconfiguration or other changes in the environment.

Correct approach:

Split the data.

Write the split data to persistent storage (e.g., HDFS, S3).

Load the data from storage for each model training session.

train_df, test_df = spark_df.randomSplit([0.8, 0.2], seed=42)

train_df.write.parquet("path/to/train_df.parquet") test_df.write.parquet("path/to/test_df.parquet")

# Later, load the data train_df = spark.read.parquet("path/to/train_df.parquet") test_df =

spark.read.parquet("path/to/test_df.parquet")

Reference:

Spark DataFrameWriter Documentation

Question 5

A data scientist is developing a single-node machine learning model. They have a large number of

model configurations to test as a part of their experiment. As a result, the model tuning process

takes too long to complete. Which of the following approaches can be used to speed up the model

tuning process?

- A. Implement MLflow Experiment Tracking

- B. Scale up with Spark ML

- C. Enable autoscaling clusters

- D. Parallelize with Hyperopt

Answer:

D

Explanation:

To speed up the model tuning process when dealing with a large number of model configurations,

parallelizing the hyperparameter search using Hyperopt is an effective approach. Hyperopt provides

tools like SparkTrials which can run hyperparameter optimization in parallel across a Spark cluster.

Example:

from hyperopt import fmin, tpe, hp, SparkTrials search_space = { 'x': hp.uniform('x', 0, 1), 'y':

hp.uniform('y', 0, 1) } def objective(params): return params['x'] ** 2 + params['y'] ** 2 spark_trials =

SparkTrials(parallelism=4) best = fmin(fn=objective, space=search_space, algo=tpe.suggest,

max_evals=100, trials=spark_trials)

Reference:

Hyperopt Documentation

Question 6

A machine learning engineer is trying to scale a machine learning pipeline by distributing its single-

node model tuning process. After broadcasting the entire training data onto each core, each core in

the cluster can train one model at a time. Because the tuning process is still running slowly, the

engineer wants to increase the level of parallelism from 4 cores to 8 cores to speed up the tuning

process. Unfortunately, the total memory in the cluster cannot be increased.

In which of the following scenarios will increasing the level of parallelism from 4 to 8 speed up the

tuning process?

- A. When the tuning process in randomized

- B. When the entire data can fit on each core

- C. When the model is unable to be parallelized

- D. When the data is particularly long in shape

- E. When the data is particularly wide in shape

Answer:

B

Explanation:

Increasing the level of parallelism from 4 to 8 cores can speed up the tuning process if each core can

handle the entire dataset. This ensures that each core can independently work on training a model

without running into memory constraints. If the entire dataset fits into the memory of each core,

adding more cores will allow more models to be trained in parallel, thus speeding up the process.

Reference:

Parallel Computing Concepts

Question 7

A machine learning engineer wants to parallelize the inference of group-specific models using the

Pandas Function API. They have developed the apply_model function that will look up and load the

correct model for each group, and they want to apply it to each group of DataFrame df.

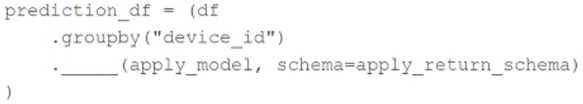

They have written the following incomplete code block:

Which piece of code can be used to fill in the above blank to complete the task?

- A. applyInPandas

- B. groupedApplyInPandas

- C. mapInPandas

- D. predict

Answer:

A

Explanation:

To parallelize the inference of group-specific models using the Pandas Function API in PySpark, you

can use the applyInPandas function. This function allows you to apply a Python function on each

group of a DataFrame and return a DataFrame, leveraging the power of pandas UDFs (user-defined

functions) for better performance.

prediction_df = ( df.groupby("device_id") .applyInPandas(apply_model,

schema=apply_return_schema) )

In this code:

groupby("device_id"): Groups the DataFrame by the "device_id" column.

applyInPandas(apply_model, schema=apply_return_schema): Applies the apply_model function to

each group and specifies the schema of the return DataFrame.

Reference:

PySpark Pandas UDFs Documentation

Question 8

A data scientist has been given an incomplete notebook from the data engineering team. The

notebook uses a Spark DataFrame spark_df on which the data scientist needs to perform further

feature engineering. Unfortunately, the data scientist has not yet learned the PySpark DataFrame

API.

Which of the following blocks of code can the data scientist run to be able to use the pandas API on

Spark?

- A. import pyspark.pandas as ps df = ps.DataFrame(spark_df)

- B. import pyspark.pandas as ps df = ps.to_pandas(spark_df)

- C. spark_df.to_pandas()

- D. import pandas as pd df = pd.DataFrame(spark_df)

Answer:

A

Explanation:

To use the pandas API on Spark, the data scientist can run the following code block:

import pyspark.pandas as ps df = ps.DataFrame(spark_df)

This code imports the pandas API on Spark and converts the Spark DataFrame spark_df into a

pandas-on-Spark DataFrame, allowing the data scientist to use familiar pandas functions for further

feature engineering.

Reference:

Databricks documentation on pandas API on Spark: pandas API on Spark

Question 9

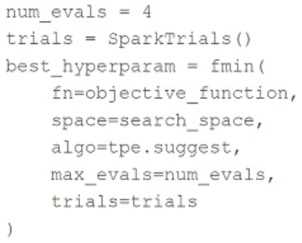

A data scientist is using the following code block to tune hyperparameters for a machine learning

model:

Which change can they make the above code block to improve the likelihood of a more accurate

model?

- A. Increase num_evals to 100

- B. Change fmin() to fmax()

- C. Change sparkTrials() to Trials()

- D. Change tpe.suggest to random.suggest

Answer:

A

Explanation:

To improve the likelihood of a more accurate model, the data scientist can increase num_evals to

100. Increasing the number of evaluations allows the hyperparameter tuning process to explore a

larger search space and evaluate more combinations of hyperparameters, which increases the

chance of finding a more optimal set of hyperparameters for the model.

Reference:

Databricks documentation on hyperparameter tuning: Hyperparameter Tuning

Question 10

Which of the following describes the relationship between native Spark DataFrames and pandas API

on Spark DataFrames?

- A. pandas API on Spark DataFrames are single-node versions of Spark DataFrames with additional metadata

- B. pandas API on Spark DataFrames are more performant than Spark DataFrames

- C. pandas API on Spark DataFrames are made up of Spark DataFrames and additional metadata

- D. pandas API on Spark DataFrames are less mutable versions of Spark DataFrames

Answer:

C

Explanation:

The pandas API on Spark DataFrames are made up of Spark DataFrames with additional metadata.

The pandas API on Spark aims to provide the pandas-like experience with the scalability and

distributed nature of Spark. It allows users to work with pandas functions on large datasets by

leveraging Spark’s underlying capabilities.

Reference:

Databricks documentation on pandas API on Spark: pandas API on Spark

Question 11

Which statement describes a Spark ML transformer?

- A. A transformer is an algorithm which can transform one DataFrame into another DataFrame

- B. A transformer is a hyperparameter grid that can be used to train a model

- C. A transformer chains multiple algorithms together to transform an ML workflow

- D. A transformer is a learning algorithm that can use a DataFrame to train a model

Answer:

A

Explanation:

In Spark ML, a transformer is an algorithm that can transform one DataFrame into another

DataFrame. It takes a DataFrame as input and produces a new DataFrame as output. This

transformation can involve adding new columns, modifying existing ones, or applying feature

transformations. Examples of transformers in Spark MLlib include feature transformers like

StringIndexer, VectorAssembler, and StandardScaler.

Reference:

Databricks documentation on transformers: Transformers in Spark ML

Question 12

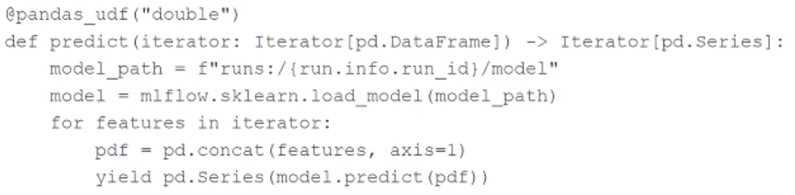

A machine learning engineer is using the following code block to scale the inference of a single-node

model on a Spark DataFrame with one million records:

Assuming the default Spark configuration is in place, which of the following is a benefit of using an

Iterator?

- A. The data will be limited to a single executor preventing the model from being loaded multiple times

- B. The model will be limited to a single executor preventing the data from being distributed

- C. The model only needs to be loaded once per executor rather than once per batch during the inference process

- D. The data will be distributed across multiple executors during the inference process

Answer:

C

Explanation:

Using an iterator in the pandas_udf ensures that the model only needs to be loaded once per

executor rather than once per batch. This approach reduces the overhead associated with repeatedly

loading the model during the inference process, leading to more efficient and faster predictions. The

data will be distributed across multiple executors, but each executor will load the model only once,

optimizing the inference process.

Reference:

Databricks documentation on pandas UDFs: Pandas UDFs

Question 13

Which of the following tools can be used to distribute large-scale feature engineering without the

use of a UDF or pandas Function API for machine learning pipelines?

- A. Keras

- B. Scikit-learn

- C. PyTorch

- D. Spark ML

Answer:

D

Explanation:

Spark MLlib is a machine learning library within Apache Spark that provides scalable and distributed

machine learning algorithms. It is designed to work with Spark DataFrames and leverages Spark’s

distributed computing capabilities to perform large-scale feature engineering and model training

without the need for user-defined functions (UDFs) or the pandas Function API. Spark MLlib provides

built-in transformations and algorithms that can be applied directly to large datasets.

Reference:

Databricks documentation on Spark MLlib: Spark MLlib

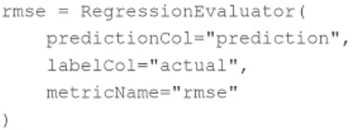

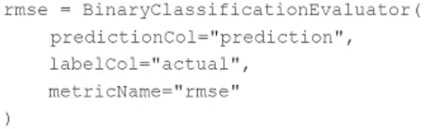

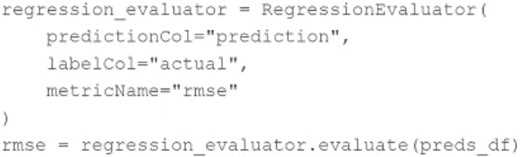

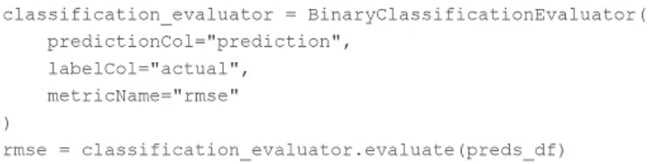

Question 14

A data scientist has developed a linear regression model using Spark ML and computed the

predictions in a Spark DataFrame preds_df with the following schema:

prediction DOUBLE

actual DOUBLE

Which of the following code blocks can be used to compute the root mean-squared-error of the

model according to the data in preds_df and assign it to the rmse variable?

A)

B)

C)

D)

- A. Option A

- B. Option B

- C. Option C

- D. Option D

Answer:

C

Explanation:

To compute the root mean-squared-error (RMSE) of a linear regression model using Spark ML, the

RegressionEvaluator class is used. The RegressionEvaluator is specifically designed for regression

tasks and can calculate various metrics, including RMSE, based on the columns containing

predictions and actual values.

The correct code block to compute RMSE from the preds_df DataFrame is:

regression_evaluator = RegressionEvaluator( predictionCol="prediction", labelCol="actual",

metricName="rmse" ) rmse = regression_evaluator.evaluate(preds_df)

This code creates an instance of RegressionEvaluator, specifying the prediction and label columns, as

well as the metric to be computed ("rmse"). It then evaluates the predictions in preds_df and assigns

the resulting RMSE value to the rmse variable.

Options A and B incorrectly use BinaryClassificationEvaluator, which is not suitable for regression

tasks. Option D also incorrectly uses BinaryClassificationEvaluator.

Reference:

PySpark ML Documentation

Question 15

A data scientist has written a feature engineering notebook that utilizes the pandas library. As the

size of the data processed by the notebook increases, the notebook's runtime is drastically

increasing, but it is processing slowly as the size of the data included in the process increases.

Which of the following tools can the data scientist use to spend the least amount of time refactoring

their notebook to scale with big data?

- A. PySpark DataFrame API

- B. pandas API on Spark

- C. Spark SQL

- D. Feature Store

Answer:

B

Explanation:

The pandas API on Spark provides a way to scale pandas operations to big data while minimizing the

need for refactoring existing pandas code. It allows users to run pandas operations on Spark

DataFrames, leveraging Spark’s distributed computing capabilities to handle large datasets more

efficiently. This approach requires minimal changes to the existing code, making it a convenient

option for scaling pandas-based feature engineering notebooks.

Reference:

Databricks documentation on pandas API on Spark: pandas API on Spark