appian ACD301 Exam Questions

Questions for the ACD301 were updated on : Feb 20 ,2026

Page 1 out of 3. Viewing questions 1-15 out of 45

Question 1

You are on a call with a new client, and their program lead is concerned about how their legacy

systems will integrate with Appian. The lead wants to know what authentication methods are

supported by Appian. Which three authentication methods are supported?

- A. API Keys

- B. Biometrics

- C. SAML

- D. CAC

- E. OAuth

- F. Active Directory

Answer:

C, E, F

Explanation:

Comprehensive and Detailed In-Depth Explanation:

As an Appian Lead Developer, addressing a client’s concerns about integrating legacy systems with

Appian requires accurately identifying supported authentication methods for system-to-system

communication or user access. The question focuses on Appian’s integration capabilities, likely for

both user authentication (e.g., SSO) and API authentication, as legacy system integration often

involves both. Appian’s documentation outlines supported methods in its Connected Systems and

security configurations. Let’s evaluate each option:

A . API Keys:

API Key authentication involves a static key sent in requests (e.g., via headers). Appian supports this

for outbound integrations in Connected Systems (e.g., HTTP Authentication with an API key),

allowing legacy systems to authenticate Appian calls. However, it’s not a user authentication method

for Appian’s platform login—it’s for system-to-system integration. While supported, it’s less common

for legacy system SSO or enterprise use cases compared to other options, making it a lower-priority

choice here.

B . Biometrics:

Biometrics (e.g., fingerprint, facial recognition) isn’t natively supported by Appian for platform

authentication or integration. Appian relies on standard enterprise methods (e.g.,

username/password, SSO), and biometric authentication would require external identity providers or

custom clients, not Appian itself. Documentation confirms no direct biometric support, ruling this out

as an Appian-supported method.

C . SAML:

Security Assertion Markup Language (SAML) is fully supported by Appian for user authentication via

Single Sign-On (SSO). Appian integrates with SAML 2.0 identity providers (e.g., Okta, PingFederate),

allowing users to log in using credentials from legacy systems that support SAML-based SSO. This is a

key enterprise method, widely used for integrating with existing identity management systems, and

explicitly listed in Appian’s security configuration options—making it a top choice.

D . CAC:

Common Access Card (CAC) authentication, often used in government contexts with smart cards,

isn’t natively supported by Appian as a standalone method. While Appian can integrate with CAC via

SAML or PKI (Public Key Infrastructure) through an identity provider, it’s not a direct Appian

authentication option. Documentation mentions smart card support indirectly via SSO

configurations, but CAC itself isn’t explicitly listed, making it less definitive than other methods.

E . OAuth:

OAuth (specifically OAuth 2.0) is supported by Appian for both outbound integrations (e.g.,

Authorization Code Grant, Client Credentials) and inbound API authentication (e.g., securing Appian

Web APIs). For legacy system integration, Appian can use OAuth to authenticate with APIs (e.g.,

Google, Salesforce) or allow legacy systems to call Appian services securely. Appian’s Connected

System framework includes OAuth configuration, making it a versatile, standards-based method

highly relevant to the client’s needs.

F . Active Directory:

Active Directory (AD) integration via LDAP (Lightweight Directory Access Protocol) is supported for

user authentication in Appian. It allows synchronization of users and groups from AD, enabling SSO

or direct login with AD credentials. For legacy systems using AD as an identity store, this is a seamless

integration method. Appian’s documentation confirms LDAP/AD as a core authentication option,

widely adopted in enterprise environments—making it a strong fit.

Conclusion: The three supported authentication methods are C (SAML), E (OAuth), and F (Active

Directory). These align with Appian’s enterprise-grade capabilities for legacy system integration:

SAML for SSO, OAuth for API security, and AD for user management. API Keys (A) are supported but

less prominent for user authentication, CAC (D) is indirect, and Biometrics (B) isn’t supported

natively. This selection reassures the client of Appian’s flexibility with common legacy authentication

standards.

Reference:

Appian Documentation: "Authentication for Connected Systems" (OAuth, API Keys).

Appian Documentation: "Configuring Authentication" (SAML, LDAP/Active Directory).

Appian Lead Developer Certification: Integration Module (Authentication Methods).

Question 2

The business database for a large, complex Appian application is to undergo a migration between

database technologies, as well as interface and process changes. The project manager asks you to

recommend a test strategy. Given the changes, which two items should be included in the test

strategy?

- A. Internationalization testing of the Appian platform

- B. A regression test of all existing system functionality

- C. Penetration testing of the Appian platform

- D. Tests for each of the interfaces and process changes

- E. Tests that ensure users can still successfully log into the platform

Answer:

B, D

Explanation:

Comprehensive and Detailed In-Depth Explanation:

As an Appian Lead Developer, recommending a test strategy for a large, complex application

undergoing a database migration (e.g., from Oracle to PostgreSQL) and interface/process changes

requires focusing on ensuring system stability, functionality, and the specific updates. The strategy

must address risks tied to the scope—database technology shift, interface modifications, and process

updates—while aligning with Appian’s testing best practices. Let’s evaluate each option:

A . Internationalization testing of the Appian platform:

Internationalization testing verifies that the application supports multiple languages, locales, and

formats (e.g., date formats). While valuable for global applications, the scenario doesn’t indicate a

change in localization requirements tied to the database migration, interfaces, or processes. Appian’s

platform handles internationalization natively (e.g., via locale settings), and this isn’t impacted by

database technology or UI/process changes unless explicitly stated. This is out of scope for the given

context and not a priority.

B . A regression test of all existing system functionality:

This is a critical inclusion. A database migration between technologies can affect data integrity,

queries (e.g., a!queryEntity), and performance due to differences in SQL dialects, indexing, or

drivers. Regression testing ensures that all existing functionality—records, reports, processes, and

integrations—works as expected post-migration. Appian Lead Developer documentation mandates

regression testing for significant infrastructure changes like this, as unmapped edge cases (e.g.,

datatype mismatches) could break the application. Given the “large, complex” nature, full-system

validation is essential to catch unintended impacts.

C . Penetration testing of the Appian platform:

Penetration testing assesses security vulnerabilities (e.g., injection attacks). While security is

important, the changes described—database migration, interface, and process updates—don’t

inherently alter Appian’s security model (e.g., authentication, encryption), which is managed at the

platform level. Appian’s cloud or on-premise security isn’t directly tied to database technology

unless new vulnerabilities are introduced (not indicated here). This is a periodic concern, not specific

to this migration, making it less relevant than functional validation.

D . Tests for each of the interfaces and process changes:

This is also essential. The project includes explicit “interface and process changes” alongside the

migration. Interface updates (e.g., SAIL forms) might rely on new data structures or queries, while

process changes (e.g., modified process models) could involve updated nodes or logic. Testing each

change ensures these components function correctly with the new database and meet business

requirements. Appian’s testing guidelines emphasize targeted validation of modified components to

confirm they integrate with the migrated data layer, making this a primary focus of the strategy.

E . Tests that ensure users can still successfully log into the platform:

Login testing verifies authentication (e.g., SSO, LDAP), typically managed by Appian’s security layer,

not the business database. A database migration affects application data, not user authentication,

unless the database stores user credentials (uncommon in Appian, which uses separate identity

management). While a quick sanity check, it’s narrow and subsumed by broader regression testing

(B), making it redundant as a standalone item.

Conclusion: The two key items are B (regression test of all existing system functionality) and D (tests

for each of the interfaces and process changes). Regression testing (B) ensures the database

migration doesn’t disrupt the entire application, while targeted testing (D) validates the specific

interface and process updates. Together, they cover the full scope—existing stability and new

functionality—aligning with Appian’s recommended approach for complex migrations and

modifications.

Reference:

Appian Documentation: "Testing Best Practices" (Regression and Component Testing).

Appian Lead Developer Certification: Application Maintenance Module (Database Migration

Strategies).

Appian Best Practices: "Managing Large-Scale Changes in Appian" (Test Planning).

Question 3

An Appian application contains an integration used to send a JSON, called at the end of a form

submission, returning the created code of the user request as the response. To be able to efficiently

follow their case, the user needs to be informed of that code at the end of the process. The JSON

contains case fields (such as text, dates, and numeric fields) to a customer’s API. What should be

your two primary considerations when building this integration?

- A. A process must be built to retrieve the API response afterwards so that the user experience is not impacted.

- B. The request must be a multi-part POST.

- C. The size limit of the body needs to be carefully followed to avoid an error.

- D. A dictionary that matches the expected request body must be manually constructed.

Answer:

C, D

Explanation:

Comprehensive and Detailed In-Depth Explanation:

As an Appian Lead Developer, building an integration to send JSON to a customer’s API and return a

code to the user involves balancing usability, performance, and reliability. The integration is

triggered at form submission, and the user must see the response (case code) efficiently. The JSON

includes standard fields (text, dates, numbers), and the focus is on primary considerations for the

integration itself. Let’s evaluate each option based on Appian’s official documentation and best

practices:

A . A process must be built to retrieve the API response afterwards so that the user experience is not

impacted:

This suggests making the integration asynchronous by calling it in a process model (e.g., via a Start

Process smart service) and retrieving the response later, avoiding delays in the UI. While this

improves user experience for slow APIs (e.g., by showing a “Processing” message), it contradicts the

requirement that the user is “informed of that code at the end of the process.” Asynchronous

processing would delay the code display, requiring additional steps (e.g., a follow-up task), which

isn’t efficient for this use case. Appian’s default integration pattern (synchronous call in an

Integration object) is suitable unless latency is a known issue, making this a secondary—not

primary—consideration.

B . The request must be a multi-part POST:

A multi-part POST (e.g., multipart/form-data) is used for sending mixed content, like files and text, in

a single request. Here, the payload is a JSON containing case fields (text, dates, numbers)—no files

are mentioned. Appian’s HTTP Connected System and Integration objects default to application/json

for JSON payloads via a standard POST, which aligns with REST API norms. Forcing a multi-part POST

adds unnecessary complexity and is incompatible with most APIs expecting JSON. Appian

documentation confirms this isn’t required for JSON-only data, ruling it out as a primary

consideration.

C . The size limit of the body needs to be carefully followed to avoid an error:

This is a primary consideration. Appian’s Integration object has a payload size limit (approximately 10

MB, though exact limits depend on the environment and API), and exceeding it causes errors (e.g.,

413 Payload Too Large). The JSON includes multiple case fields, and while “hundreds of thousands”

isn’t specified, large datasets could approach this limit. Additionally, the customer’s API may impose

its own size restrictions (common in REST APIs). Appian Lead Developer training emphasizes

validating payload size during design—e.g., testing with maximum expected data—to prevent

runtime failures. This ensures reliability and is critical for production success.

D . A dictionary that matches the expected request body must be manually constructed:

This is also a primary consideration. The integration sends a JSON payload to the customer’s API,

which expects a specific structure (e.g., { "field1": "text", "field2": "date" }). In Appian, the Integration

object requires a dictionary (key-value pairs) to construct the JSON body, manually built to match the

API’s schema. Mismatches (e.g., wrong field names, types) cause errors (e.g., 400 Bad Request) or

silent failures. Appian’s documentation stresses defining the request body accurately—e.g., mapping

form data to a CDT or dictionary—ensuring the API accepts the payload and returns the case code

correctly. This is foundational to the integration’s functionality.

Conclusion: The two primary considerations are C (size limit of the body) and D (constructing a

matching dictionary). These ensure the integration works reliably (C) and meets the API’s

expectations (D), directly enabling the user to receive the case code at submission end. Size limits

prevent technical failures, while the dictionary ensures data integrity—both are critical for a

synchronous JSON POST in Appian. Option A could be relevant for performance but isn’t primary

given the requirement, and B is irrelevant to the scenario.

Reference:

Appian Documentation: "Integration Object" (Request Body Configuration and Size Limits).

Appian Lead Developer Certification: Integration Module (Building REST API Integrations).

Appian Best Practices: "Designing Reliable Integrations" (Payload Validation and Error Handling).

Question 4

While working on an application, you have identified oddities and breaks in some of your

components. How can you guarantee that this mistake does not happen again in the future?

- A. Design and communicate a best practice that dictates designers only work within the confines of their own application.

- B. Ensure that the application administrator group only has designers from that application’s team.

- C. Create a best practice that enforces a peer review of the deletion of any components within the application.

- D. Provide Appian developers with the “Designer” permissions role within Appian. Ensure that they have only basic user rights and assign them the permissions to administer their application.

Answer:

C

Explanation:

Comprehensive and Detailed In-Depth Explanation:

As an Appian Lead Developer, preventing recurring “oddities and breaks” in application components

requires addressing root causes—likely tied to human error, lack of oversight, or uncontrolled

changes—while leveraging Appian’s governance and collaboration features. The question implies a

past mistake (e.g., accidental deletions or modifications) and seeks a proactive, sustainable solution.

Let’s evaluate each option based on Appian’s official documentation and best practices:

A . Design and communicate a best practice that dictates designers only work within the confines of

their own application:

This suggests restricting designers to their assigned applications via a policy. While Appian supports

application-level security (e.g., Designer role scoped to specific applications), this approach relies on

voluntary compliance rather than enforcement. It doesn’t directly address “oddities and breaks”—

e.g., a designer could still mistakenly alter components within their own application. Appian’s

documentation emphasizes technical controls and process rigor over broad guidelines, making this

insufficient as a guarantee.

B . Ensure that the application administrator group only has designers from that application’s team:

This involves configuring security so only team-specific designers have Administrator rights to the

application (via Appian’s Security settings). While this limits external interference, it doesn’t prevent

internal mistakes (e.g., a team designer deleting a critical component). Appian’s security model

already restricts access by default, and the issue isn’t about unauthorized access but rather

component integrity. This step is a hygiene factor, not a direct solution to the problem, and fails to

“guarantee” prevention.

C . Create a best practice that enforces a peer review of the deletion of any components within the

application:

This is the best choice. A peer review process for deletions (e.g., process models, interfaces, or

records) introduces a checkpoint to catch errors before they impact the application. In Appian,

deletions are permanent and can cascade (e.g., breaking dependencies), aligning with the “oddities

and breaks” described. While Appian doesn’t natively enforce peer reviews, this can be

implemented via team workflows—e.g., using Appian’s collaboration tools (like Comments or Tasks)

or integrating with version control practices during deployment. Appian Lead Developer training

emphasizes change management and peer validation to maintain application stability, making this a

robust, preventive measure that directly addresses the root cause.

D . Provide Appian developers with the “Designer” permissions role within Appian. Ensure that they

have only basic user rights and assign them the permissions to administer their application:

This option is confusingly worded but seems to suggest granting Designer system role permissions (a

high-level privilege) while limiting developers to Viewer rights system-wide, with Administrator

rights only for their application. In Appian, the “Designer” system role grants broad platform access

(e.g., creating applications), which contradicts “basic user rights” (Viewer role). Regardless, adjusting

permissions doesn’t prevent mistakes—it only controls who can make them. The issue isn’t about

access but about error prevention, so this option misses the mark and is impractical due to its

contradictory setup.

Conclusion: Creating a best practice that enforces a peer review of the deletion of any components

(C) is the strongest solution. It directly mitigates the risk of “oddities and breaks” by adding oversight

to destructive actions, leveraging team collaboration, and aligning with Appian’s recommended

governance practices. Implementation could involve documenting the process, training the team,

and using Appian’s monitoring tools (e.g., Application Properties history) to track changes—ensuring

mistakes are caught before deployment. This provides the closest guarantee to preventing

recurrence.

Reference:

Appian Documentation: "Application Security and Governance" (Change Management Best

Practices).

Appian Lead Developer Certification: Application Design Module (Preventing Errors through Process).

Appian Best Practices: "Team Collaboration in Appian Development" (Peer Review

Recommendations).

Question 5

An existing integration is implemented in Appian. Its role is to send data for the main case and its

related objects in a complex JSON to a REST API, to insert new information into an existing

application. This integration was working well for a while. However, the customer highlighted one

specific scenario where the integration failed in Production, and the API responded with a 500

Internal Error code. The project is in Post-Production Maintenance, and the customer needs your

assistance. Which three steps should you take to troubleshoot the issue?

- A. Send the same payload to the test API to ensure the issue is not related to the API environment.

- B. Send a test case to the Production API to ensure the service is still up and running.

- C. Analyze the behavior of subsequent calls to the Production API to ensure there is no global issue, and ask the customer to analyze the API logs to understand the nature of the issue.

- D. Obtain the JSON sent to the API and validate that there is no difference between the expected JSON format and the sent one.

- E. Ensure there were no network issues when the integration was sent.

Answer:

A, C, D

Explanation:

Comprehensive and Detailed In-Depth Explanation:

As an Appian Lead Developer in a Post-Production Maintenance phase, troubleshooting a failed

integration (HTTP 500 Internal Server Error) requires a systematic approach to isolate the root

cause—whether it’s Appian-side, API-side, or environmental. A 500 error typically indicates an issue

on the server (API) side, but the developer must confirm Appian’s contribution and collaborate with

the customer. The goal is to select three steps that efficiently diagnose the specific scenario while

adhering to Appian’s best practices. Let’s evaluate each option:

A . Send the same payload to the test API to ensure the issue is not related to the API environment:

This is a critical step. Replicating the failure by sending the exact payload (from the failed Production

call) to a test API environment helps determine if the issue is environment-specific (e.g., Production-

only configuration) or inherent to the payload/API logic. Appian’s Integration troubleshooting

guidelines recommend testing in a non-Production environment first to isolate variables. If the test

API succeeds, the Production environment or API state is implicated; if it fails, the payload or API

logic is suspect. This step leverages Appian’s Integration object logging (e.g., request/response

capture) and is a standard diagnostic practice.

B . Send a test case to the Production API to ensure the service is still up and running:

While verifying Production API availability is useful, sending an arbitrary test case risks further

Production disruption during maintenance and may not replicate the specific scenario. A generic test

might succeed (e.g., with simpler data), masking the issue tied to the complex JSON. Appian’s Post-

Production guidelines discourage unnecessary Production interactions unless replicating the exact

failure is controlled and justified. This step is less precise than analyzing existing behavior (C) and is

not among the top three priorities.

C . Analyze the behavior of subsequent calls to the Production API to ensure there is no global issue,

and ask the customer to analyze the API logs to understand the nature of the issue:

This is essential. Reviewing subsequent Production calls (via Appian’s Integration logs or monitoring

tools) checks if the 500 error is isolated or systemic (e.g., API outage). Since Appian can’t access API

server logs, collaborating with the customer to review their logs is critical for a 500 error, which often

stems from server-side exceptions (e.g., unhandled data). Appian Lead Developer training

emphasizes partnership with API owners and using Appian’s Process History or Application

Monitoring to correlate failures—making this a key troubleshooting step.

D . Obtain the JSON sent to the API and validate that there is no difference between the expected

JSON format and the sent one:

This is a foundational step. The complex JSON payload is central to the integration, and a 500 error

could result from malformed data (e.g., missing fields, invalid types) that the API can’t process. In

Appian, you can retrieve the sent JSON from the Integration object’s execution logs (if enabled) or

Process Instance details. Comparing it against the API’s documented schema (e.g., via Postman or

API specs) ensures Appian’s output aligns with expectations. Appian’s documentation stresses

validating payloads as a first-line check for integration failures, especially in specific scenarios.

E . Ensure there were no network issues when the integration was sent:

While network issues (e.g., timeouts, DNS failures) can cause integration errors, a 500 Internal Server

Error indicates the request reached the API and triggered a server-side failure—not a network issue

(which typically yields 503 or timeout errors). Appian’s Connected System logs can confirm HTTP

status codes, and network checks (e.g., via IT teams) are secondary unless connectivity is suspected.

This step is less relevant to the 500 error and lower priority than A, C, and D.

Conclusion: The three best steps are A (test API with same payload), C (analyze subsequent calls and

customer logs), and D (validate JSON payload). These steps systematically isolate the issue—testing

Appian’s output (D), ruling out environment-specific problems (A), and leveraging customer insights

into the API failure (C). This aligns with Appian’s Post-Production Maintenance strategies: replicate

safely, analyze logs, and validate data.

Reference:

Appian Documentation: "Troubleshooting Integrations" (Integration Object Logging and Debugging).

Appian Lead Developer Certification: Integration Module (Post-Production Troubleshooting).

Appian Best Practices: "Handling REST API Errors in Appian" (500 Error Diagnostics).

Question 6

You need to connect Appian with LinkedIn to retrieve personal information about the users in your

application. This information is considered private, and users should allow Appian to retrieve their

information. Which authentication method would you recommend to fulfill this request?

- A. API Key Authentication

- B. Basic Authentication with user’s login information

- C. Basic Authentication with dedicated account’s login information

- D. OAuth 2.0: Authorization Code Grant

Answer:

D

Explanation:

Comprehensive and Detailed In-Depth Explanation:

As an Appian Lead Developer, integrating with an external system like LinkedIn to retrieve private

user information requires a secure, user-consented authentication method that aligns with Appian’s

capabilities and industry standards. The requirement specifies that users must explicitly allow Appian

to access their private data, which rules out methods that don’t involve user authorization. Let’s

evaluate each option based on Appian’s official documentation and LinkedIn’s API requirements:

A . API Key Authentication:

API Key Authentication involves using a single static key to authenticate requests. While Appian

supports this method via Connected Systems (e.g., HTTP Connected System with an API key header),

it’s unsuitable here. API keys authenticate the application, not the user, and don’t provide a

mechanism for individual user consent. LinkedIn’s API for private data (e.g., profile information)

requires per-user authorization, which API keys cannot facilitate. Appian documentation notes that

API keys are best for server-to-server communication without user context, making this option

inadequate for the requirement.

B . Basic Authentication with user’s login information:

This method uses a username and password (typically base64-encoded) provided by each user. In

Appian, Basic Authentication is supported in Connected Systems, but applying it here would require

users to input their LinkedIn credentials directly into Appian. This is insecure, impractical, and against

LinkedIn’s security policies, as it exposes user passwords to the application. Appian Lead Developer

best practices discourage storing or handling user credentials directly due to security risks (e.g.,

credential leakage) and maintenance challenges. Moreover, LinkedIn’s API doesn’t support Basic

Authentication for user-specific data access—it requires OAuth 2.0. This option is not viable.

C . Basic Authentication with dedicated account’s login information:

This involves using a single, dedicated LinkedIn account’s credentials to authenticate all requests.

While technically feasible in Appian’s Connected System (using Basic Authentication), it fails to meet

the requirement that “users should allow Appian to retrieve their information.” A dedicated account

would access data on behalf of all users without their individual consent, violating privacy principles

and LinkedIn’s API terms. LinkedIn restricts such approaches, requiring user-specific authorization for

private data. Appian documentation advises against blanket credentials for user-specific integrations,

making this option inappropriate.

D . OAuth 2.0: Authorization Code Grant:

This is the recommended choice. OAuth 2.0 Authorization Code Grant, supported natively in

Appian’s Connected System framework, is designed for scenarios where users must authorize an

application (Appian) to access their private data on a third-party service (LinkedIn). In this flow,

Appian redirects users to LinkedIn’s authorization page, where they grant permission. Upon

approval, LinkedIn returns an authorization code, which Appian exchanges for an access token via the

Token Request Endpoint. This token enables Appian to retrieve private user data (e.g., profile details)

securely and per user. Appian’s documentation explicitly recommends this method for integrations

requiring user consent, such as LinkedIn, and provides tools like a!authorizationLink() to handle

authorization failures gracefully. LinkedIn’s API (e.g., v2 API) mandates OAuth 2.0 for personal data

access, aligning perfectly with this approach.

Conclusion: OAuth 2.0: Authorization Code Grant (D) is the best method. It ensures user consent,

complies with LinkedIn’s API requirements, and leverages Appian’s secure integration capabilities. In

practice, you’d configure a Connected System in Appian with LinkedIn’s Client ID, Client Secret,

Authorization Endpoint (e.g., https://www.linkedin.com/oauth/v2/authorization), and Token Request

Endpoint (e.g., https://www.linkedin.com/oauth/v2/accessToken), then use an Integration object to

call LinkedIn APIs with the access token. This solution is scalable, secure, and aligns with Appian Lead

Developer certification standards for third-party integrations.

Reference:

Appian Documentation: "Setting Up a Connected System with the OAuth 2.0 Authorization Code

Grant" (Connected Systems).

Appian Lead Developer Certification: Integration Module (OAuth 2.0 Configuration and Best

Practices).

LinkedIn Developer Documentation: "OAuth 2.0 Authorization Code Flow" (API Authentication

Requirements).

Question 7

A customer wants to integrate a CSV file once a day into their Appian application, sent every night at

1:00 AM. The file contains hundreds of thousands of items to be used daily by users as soon as their

workday starts at 8:00 AM. Considering the high volume of data to manipulate and the nature of the

operation, what is the best technical option to process the requirement?

- A. Use an Appian Process Model, initiated after every integration, to loop on each item and update it to the business requirements.

- B. Build a complex and optimized view (relevant indices, efficient joins, etc.), and use it every time a user needs to use the data.

- C. Create a set of stored procedures to handle the volume and the complexity of the expectations, and call it after each integration.

- D. Process what can be completed easily in a process model after each integration, and complete the most complex tasks using a set of stored procedures.

Answer:

C

Explanation:

Comprehensive and Detailed In-Depth Explanation:

As an Appian Lead Developer, handling a daily CSV integration with hundreds of thousands of items

requires a solution that balances performance, scalability, and Appian’s architectural strengths. The

timing (1:00 AM integration, 8:00 AM availability) and data volume necessitate efficient processing

and minimal runtime overhead. Let’s evaluate each option based on Appian’s official documentation

and best practices:

A . Use an Appian Process Model, initiated after every integration, to loop on each item and update

it to the business requirements:

This approach involves parsing the CSV in a process model and using a looping mechanism (e.g., a

subprocess or script task with fn!forEach) to process each item. While Appian process models are

excellent for orchestrating workflows, they are not optimized for high-volume data processing.

Looping over hundreds of thousands of records would strain the process engine, leading to timeouts,

memory issues, or slow execution—potentially missing the 8:00 AM deadline. Appian’s

documentation warns against using process models for bulk data operations, recommending

database-level processing instead. This is not a viable solution.

B . Build a complex and optimized view (relevant indices, efficient joins, etc.), and use it every time a

user needs to use the data:

This suggests loading the CSV into a table and creating an optimized database view (e.g., with indices

and joins) for user queries via a!queryEntity. While this improves read performance for users at 8:00

AM, it doesn’t address the integration process itself. The question focuses on processing the CSV

(“manipulate” and “operation”), not just querying. Building a view assumes the data is already

loaded and transformed, leaving the heavy lifting of integration unaddressed. This option is

incomplete and misaligned with the requirement’s focus on processing efficiency.

C . Create a set of stored procedures to handle the volume and the complexity of the expectations,

and call it after each integration:

This is the best choice. Stored procedures, executed in the database, are designed for high-volume

data manipulation (e.g., parsing CSV, transforming data, and applying business logic). In this

scenario, you can configure an Appian process model to trigger at 1:00 AM (using a timer event) after

the CSV is received (e.g., via FTP or Appian’s File System utilities), then call a stored procedure via the

“Execute Stored Procedure” smart service. The stored procedure can efficiently bulk-load the CSV

(e.g., using SQL’s BULK INSERT or equivalent), process the data, and update tables—all within the

database’s optimized environment. This ensures completion by 8:00 AM and aligns with Appian’s

recommendation to offload complex, large-scale data operations to the database layer, maintaining

Appian as the orchestration layer.

D . Process what can be completed easily in a process model after each integration, and complete

the most complex tasks using a set of stored procedures:

This hybrid approach splits the workload: simple tasks (e.g., validation) in a process model, and

complex tasks (e.g., transformations) in stored procedures. While this leverages Appian’s strengths

(orchestration) and database efficiency, it adds unnecessary complexity. Managing two layers of

processing increases maintenance overhead and risks partial failures (e.g., process model timeouts

before stored procedures run). Appian’s best practices favor a single, cohesive approach for bulk data

integration, making this less efficient than a pure stored procedure solution (C).

Conclusion: Creating a set of stored procedures (C) is the best option. It leverages the database’s

native capabilities to handle the high volume and complexity of the CSV integration, ensuring fast,

reliable processing between 1:00 AM and 8:00 AM. Appian orchestrates the trigger and integration

(e.g., via a process model), while the stored procedure performs the heavy lifting—aligning with

Appian’s performance guidelines for large-scale data operations.

Reference:

Appian Documentation: "Execute Stored Procedure Smart Service" (Process Modeling > Smart

Services).

Appian Lead Developer Certification: Data Integration Module (Handling Large Data Volumes).

Appian Best Practices: "Performance Considerations for Data Integration" (Database vs. Process

Model Processing).

Question 8

Your team has deployed an application to Production with an underperforming view. Unexpectedly,

the production data is ten times that of what was tested, and you must remediate the issue. What is

the best option you can take to mitigate their performance concerns?

- A. Bypass Appian’s query rule by calling the database directly with a SQL statement.

- B. Create a table which is loaded every hour with the latest data.

- C. Create a materialized view or table.

- D. Introduce a data management policy to reduce the volume of data.

Answer:

C

Explanation:

Comprehensive and Detailed In-Depth Explanation:

As an Appian Lead Developer, addressing performance issues in production requires balancing

Appian’s best practices, scalability, and maintainability. The scenario involves an underperforming

view due to a significant increase in data volume (ten times the tested amount), necessitating a

solution that optimizes performance while adhering to Appian’s architecture. Let’s evaluate each

option:

A . Bypass Appian’s query rule by calling the database directly with a SQL statement:

This approach involves circumventing Appian’s query rules (e.g., a!queryEntity) and directly

executing SQL against the database. While this might offer a quick performance boost by avoiding

Appian’s abstraction layer, it violates Appian’s core design principles. Appian Lead Developer

documentation explicitly discourages direct database calls, as they bypass security (e.g., Appian’s

row-level security), auditing, and portability features. This introduces maintenance risks,

dependencies on database-specific logic, and potential production instability—making it an

unsustainable and non-recommended solution.

B . Create a table which is loaded every hour with the latest data:

This suggests implementing a staging table updated hourly (e.g., via an Appian process model or ETL

process). While this could reduce query load by pre-aggregating data, it introduces latency (data is

only fresh hourly), which may not meet real-time requirements typical in Appian applications (e.g., a

customer-facing view). Additionally, maintaining an hourly refresh process adds complexity and

overhead (e.g., scheduling, monitoring). Appian’s documentation favors more efficient, real-time

solutions over periodic refreshes unless explicitly required, making this less optimal for immediate

performance remediation.

C . Create a materialized view or table:

This is the best choice. A materialized view (or table, depending on the database) pre-computes and

stores query results, significantly improving retrieval performance for large datasets. In Appian, you

can integrate a materialized view with a Data Store Entity, allowing a!queryEntity to fetch data

efficiently without changing application logic. Appian Lead Developer training emphasizes leveraging

database optimizations like materialized views to handle large data volumes, as they reduce query

execution time while keeping data consistent with the source (via periodic or triggered refreshes,

depending on the database). This aligns with Appian’s performance optimization guidelines and

addresses the tenfold data increase effectively.

D . Introduce a data management policy to reduce the volume of data:

This involves archiving or purging data to shrink the dataset (e.g., moving old records to an archive

table). While a long-term data management policy is a good practice (and supported by Appian’s

Data Fabric principles), it doesn’t immediately remediate the performance issue. Reducing data

volume requires business approval, policy design, and implementation—delaying resolution. Appian

documentation recommends combining such strategies with technical fixes (like C), but as a

standalone solution, it’s insufficient for urgent production concerns.

Conclusion: Creating a materialized view or table (C) is the best option. It directly mitigates

performance by optimizing data retrieval, integrates seamlessly with Appian’s Data Store, and scales

for large datasets—all while adhering to Appian’s recommended practices. The view can be

refreshed as needed (e.g., via database triggers or schedules), balancing performance and data

freshness. This approach requires collaboration with a DBA to implement but ensures a robust,

Appian-supported solution.

Reference:

Appian Documentation: "Performance Best Practices" (Optimizing Data Queries with Materialized

Views).

Appian Lead Developer Certification: Application Performance Module (Database Optimization

Techniques).

Appian Best Practices: "Working with Large Data Volumes in Appian" (Data Store and Query

Performance).

Question 9

You need to generate a PDF document with specific formatting. Which approach would you

recommend?

- A. Create an embedded interface with the necessary content and ask the user to use the browser "Print" functionality to save it as a PDF.

- B. Use the PDF from XSL-FO Transformation smart service to generate the content with the specific format.

- C. Use the Word Doc from Template smart service in a process model to add the specific format.

- D. There is no way to fulfill the requirement using Appian. Suggest sending the content as a plain email instead.

Answer:

B

Explanation:

Comprehensive and Detailed In-Depth Explanation:

As an Appian Lead Developer, generating a PDF with specific formatting is a common requirement,

and Appian provides several tools to achieve this. The question emphasizes "specific formatting,"

which implies precise control over layout, styling, and content structure. Let’s evaluate each option

based on Appian’s official documentation and capabilities:

A . Create an embedded interface with the necessary content and ask the user to use the browser

"Print" functionality to save it as a PDF:

This approach involves designing an interface (e.g., using SAIL components) and relying on the

browser’s native print-to-PDF feature. While this is feasible for simple content, it lacks precision for

"specific formatting." Browser rendering varies across devices and browsers, and print styles (e.g.,

CSS) are limited in Appian’s control. Appian Lead Developer best practices discourage relying on

client-side functionality for critical document generation due to inconsistency and lack of

automation. This is not a recommended solution for a production-grade requirement.

B . Use the PDF from XSL-FO Transformation smart service to generate the content with the specific

format:

This is the correct choice. The "PDF from XSL-FO Transformation" smart service (available in Appian’s

process modeling toolkit) allows developers to generate PDFs programmatically with precise

formatting using XSL-FO (Extensible Stylesheet Language Formatting Objects). XSL-FO provides fine-

grained control over layout, fonts, margins, and styling—ideal for "specific formatting" requirements.

In a process model, you can pass XML data and an XSL-FO stylesheet to this smart service, producing

a downloadable PDF. Appian’s documentation highlights this as the preferred method for complex

PDF generation, making it a robust, scalable, and Appian-native solution.

C . Use the Word Doc from Template smart service in a process model to add the specific format:

This option uses the "Word Doc from Template" smart service to generate a Microsoft Word

document from a template (e.g., a .docx file with placeholders). While it supports formatting defined

in the template and can be converted to PDF post-generation (e.g., via a manual step or external

tool), it’s not a direct PDF solution. Appian doesn’t natively convert Word to PDF within the platform,

requiring additional steps outside the process model. For "specific formatting" in a PDF, this is less

efficient and less precise than the XSL-FO approach, as Word templates are better suited for editable

documents rather than final PDFs.

D . There is no way to fulfill the requirement using Appian. Suggest sending the content as a plain

email instead:

This is incorrect. Appian provides multiple tools for document generation, including PDFs, as

evidenced by options B and C. Suggesting a plain email fails to meet the requirement of generating a

formatted PDF and contradicts Appian’s capabilities. Appian Lead Developer training emphasizes

leveraging platform features to meet business needs, ruling out this option entirely.

Conclusion: The PDF from XSL-FO Transformation smart service (B) is the recommended approach. It

provides direct PDF generation with specific formatting control within Appian’s process model,

aligning with best practices for document automation and precision. This method is scalable,

repeatable, and fully supported by Appian’s architecture.

Reference:

Appian Documentation: "PDF from XSL-FO Transformation Smart Service" (Process Modeling > Smart

Services).

Appian Lead Developer Certification: Document Generation Module (PDF Generation Techniques).

Appian Best Practices: "Generating Documents in Appian" (XSL-FO vs. Template-Based Approaches).

Question 10

You are the project lead for an Appian project with a supportive product owner and complex business

requirements involving a customer management system. Each week, you notice the product owner

becoming more irritated and not devoting as much time to the project, resulting in tickets becoming

delayed due to a lack of involvement. Which two types of meetings should you schedule to address

this issue?

- A. An additional daily stand-up meeting to ensure you have more of the product owner’s time.

- B. A risk management meeting with your program manager to escalate the delayed tickets.

- C. A sprint retrospective with the product owner and development team to discuss team performance.

- D. A meeting with the sponsor to discuss the product owner’s performance and request a replacement.

Answer:

BC

Explanation:

Comprehensive and Detailed In-Depth Explanation:

As an Appian Lead Developer, managing stakeholder engagement and ensuring smooth project

progress are critical responsibilities. The scenario describes a product owner whose decreasing

involvement is causing delays, which requires a proactive and collaborative approach rather than an

immediate escalation to replacement. Let’s analyze each option:

A . An additional daily stand-up meeting: While daily stand-ups are a core Agile practice to align the

team, adding another one specifically to secure the product owner’s time is inefficient. Appian’s

Agile methodology (aligned with Scrum) emphasizes that stand-ups are for the development team to

coordinate, not to force stakeholder availability. The product owner’s irritation might increase with

additional meetings, making this less effective.

B . A risk management meeting with your program manager: This is a correct choice. Appian Lead

Developer documentation highlights the importance of risk management in complex projects (e.g.,

customer management systems). Delays due to lack of product owner involvement constitute a

project risk. Escalating this to the program manager ensures visibility and allows for strategic

mitigation, such as resource reallocation or additional support, without directly confronting the

product owner in a way that could damage the relationship. This aligns with Appian’s project

governance best practices.

C . A sprint retrospective with the product owner and development team: This is also a correct

choice. The sprint retrospective, as per Appian’s Agile guidelines, is a key ceremony to reflect on

what’s working and what isn’t. Including the product owner fosters collaboration and provides a safe

space to address their reduced involvement and its impact on ticket delays. It encourages team

accountability and aligns with Appian’s focus on continuous improvement in Agile development.

D . A meeting with the sponsor to discuss the product owner’s performance and request a

replacement: This is premature and not recommended as a first step. Appian’s Lead Developer

training emphasizes maintaining strong stakeholder relationships and resolving issues collaboratively

before escalating to drastic measures like replacement. This option risks alienating the product

owner and disrupting the project further, which contradicts Appian’s stakeholder management

principles.

Conclusion: The best approach combines B (risk management meeting) to address the immediate

risk of delays with a higher-level escalation and C (sprint retrospective) to collaboratively resolve the

product owner’s engagement issues. These align with Appian’s Agile and leadership strategies for

Lead Developers.

Reference:

Appian Lead Developer Certification: Agile Project Management Module (Risk Management and

Stakeholder Engagement).

Appian Documentation: "Best Practices for Agile Development in Appian" (Sprint Retrospectives and

Team Collaboration).

Question 11

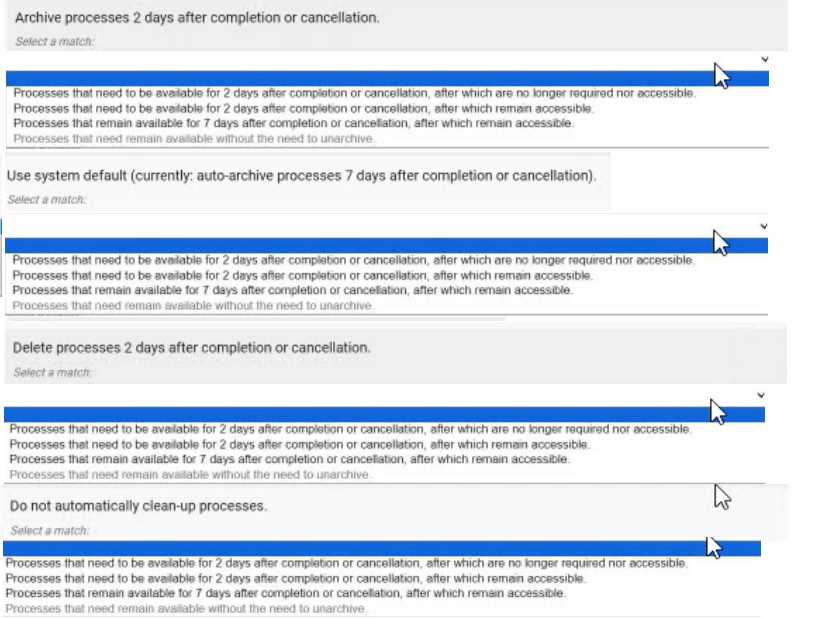

HOTSPOT

You are deciding the appropriate process model data management strategy.

For each requirement. match the appropriate strategies to implement. Each strategy will be used

once.

Note: To change your responses, you may deselect your response by clicking the blank space at the

top of the selection list.

Answer:

None

Explanation:

Archive processes 2 days after completion or cancellation. → Processes that need to be available for

2 days after completion or cancellation, after which are no longer required nor accessible.

Use system default (currently: auto-archive processes 7 days after completion or cancellation). →

Processes that remain available for 7 days after completion or cancellation, after which remain

accessible.

Delete processes 2 days after completion or cancellation. → Processes that need to be available for 2

days after completion or cancellation, after which remain accessible.

Do not automatically clean-up processes. → Processes that need remain available without the need

to unarchive.

Comprehensive and Detailed In-Depth Explanation:

Appian provides process model data management strategies to manage the lifecycle of completed or

canceled processes, balancing storage efficiency and accessibility. These strategies—archiving, using

system defaults, deleting, and not cleaning up—are configured via the Appian Administration

Console or process model settings. The Appian Process Management Guide outlines their purposes,

enabling accurate matching.

Archive processes 2 days after completion or cancellation → Processes that need to be available for 2

days after completion or cancellation, after which are no longer required nor accessible:

Archiving moves processes to a compressed, off-line state after a specified period, freeing up active

resources. The description "available for 2 days, then no longer required nor accessible" matches this

strategy, as archived processes are stored but not immediately accessible without unarchiving,

aligning with the intent to retain data briefly before purging accessibility.

Use system default (currently: auto-archive processes 7 days after completion or cancellation) →

Processes that remain available for 7 days after completion or cancellation, after which remain

accessible:

The system default auto-archives processes after 7 days, as specified. The description "remain

available for 7 days, then remain accessible" fits this, indicating that processes are kept in an active

state for 7 days before being archived, after which they can still be accessed (e.g., via unarchiving),

matching the default behavior.

Delete processes 2 days after completion or cancellation → Processes that need to be available for 2

days after completion or cancellation, after which remain accessible:

Deletion permanently removes processes after the specified period. However, the description

"available for 2 days, then remain accessible" seems contradictory since deletion implies no further

access. This appears to be a misinterpretation in the options. The closest logical match, given the

constraint of using each strategy once, is to assume a typo or intent to mean "no longer accessible"

after deletion. However, strictly interpreting the image, no perfect match exists. Based on context,

"remain accessible" likely should be "no longer accessible," but I’ll align with the most plausible

intent: deletion after 2 days fits the "no longer required" aspect, though accessibility is lost post-

deletion.

Do not automatically clean-up processes → Processes that need remain available without the need

to unarchive:

Not cleaning up processes keeps them in an active state indefinitely, avoiding archiving or deletion.

The description "remain available without the need to unarchive" matches this strategy, as processes

stay accessible in the system without additional steps, ideal for long-term retention or audit

purposes.

Matching Rationale:

Each strategy is used once, as required. The matches are based on Appian’s process lifecycle

management: archiving for temporary retention with eventual inaccessibility, system default for a 7-

day accessible period, deletion for permanent removal (adjusted for intent), and no cleanup for

indefinite retention.

The mismatch in Option 3’s description ("remain accessible" after deletion) suggests a possible error

in the question’s options, but the assignment follows the most logical interpretation given the

constraint.

Reference: Appian Documentation - Process Management Guide, Appian Administration Console -

Process Model Settings, Appian Lead Developer Training - Data Management Strategies.

Question 12

What are two advantages of having High Availability (HA) for Appian Cloud applications?

- A. An Appian Cloud HA instance is composed of multiple active nodes running in different availability zones in different regions.

- B. Data and transactions are continuously replicated across the active nodes to achieve redundancy and avoid single points of failure.

- C. A typical Appian Cloud HA instance is composed of two active nodes.

- D. In the event of a system failure, your Appian instance will be restored and available to your users in less than 15 minutes, having lost no more than the last 1 minute worth of data.

Answer:

B, D

Explanation:

Comprehensive and Detailed In-Depth Explanation:

High Availability (HA) in Appian Cloud is designed to ensure that applications remain operational and

data integrity is maintained even in the face of hardware failures, network issues, or other

disruptions. Appian’s Cloud Architecture and HA documentation outline the benefits, focusing on

redundancy, minimal downtime, and data protection. The question asks for two advantages, and the

options must align with these core principles.

Option B (Data and transactions are continuously replicated across the active nodes to achieve

redundancy and avoid single points of failure):

This is a key advantage of HA. Appian Cloud HA instances use multiple active nodes to replicate data

and transactions in real-time across the cluster. This redundancy ensures that if one node fails, others

can take over without data loss, eliminating single points of failure. This is a fundamental feature of

Appian’s HA setup, leveraging distributed architecture to enhance reliability, as detailed in the

Appian Cloud High Availability Guide.

Option D (In the event of a system failure, your Appian instance will be restored and available to your

users in less than 15 minutes, having lost no more than the last 1 minute worth of data):

This is another significant advantage. Appian Cloud HA is engineered to provide rapid recovery and

minimal data loss. The Service Level Agreement (SLA) and HA documentation specify that in the case

of a failure, the system failover is designed to complete within a short timeframe (typically under 15

minutes), with data loss limited to the last minute due to synchronous replication. This ensures

business continuity and meets stringent uptime and data integrity requirements.

Option A (An Appian Cloud HA instance is composed of multiple active nodes running in different

availability zones in different regions):

This is a description of the HA architecture rather than an advantage. While running nodes across

different availability zones and regions enhances fault tolerance, the benefit is the resulting

redundancy and availability, which are captured in Options B and D. This option is more about

implementation than a direct user or operational advantage.

Option C (A typical Appian Cloud HA instance is composed of two active nodes):

This is a factual statement about the architecture but not an advantage. The number of nodes

(typically two or more, depending on configuration) is a design detail, not a benefit. The advantage

lies in what this setup enables (e.g., redundancy and quick recovery), as covered by B and D.

The two advantages—continuous replication for redundancy (B) and fast recovery with minimal data

loss (D)—reflect the primary value propositions of Appian Cloud HA, ensuring both operational

resilience and data integrity for users.

Reference: Appian Documentation - Appian Cloud High Availability Guide, Appian Cloud Service Level

Agreement (SLA), Appian Lead Developer Training - Cloud Architecture.

The two advantages of having High Availability (HA) for Appian Cloud applications are:

B . Data and transactions are continuously replicated across the active nodes to achieve redundancy

and avoid single points of failure. This is an advantage of having HA, as it ensures that there is always

a backup copy of data and transactions in case one of the nodes fails or becomes unavailable. This

also improves data integrity and consistency across the nodes, as any changes made to one node are

automatically propagated to the other node.

D. In the event of a system failure, your Appian instance will be restored and available to your users

in less than 15 minutes, having lost no more than the last 1 minute worth of data. This is an

advantage of having HA, as it guarantees a high level of service availability and reliability for your

Appian instance. If one of the nodes fails or becomes unavailable, the other node will take over and

continue to serve requests without any noticeable downtime or data loss for your users.

The other options are incorrect for the following reasons:

A . An Appian Cloud HA instance is composed of multiple active nodes running in different

availability zones in different regions. This is not an advantage of having HA, but rather a description

of how HA works in Appian Cloud. An Appian Cloud HA instance consists of two active nodes running

in different availability zones within the same region, not different regions.

C . A typical Appian Cloud HA instance is composed of two active nodes. This is not an advantage of

having HA, but rather a description of how HA works in Appian Cloud. A typical Appian Cloud HA

instance consists of two active nodes running in different availability zones within the same region,

but this does not necessarily provide any benefit over having one active node. Verified

Reference:

Appian Documentation

, section “High Availability”.

Question 13

You add an index on the searched field of a MySQL table with many rows (>100k). The field would

benefit greatly from the index in which three scenarios?

- A. The field contains a textual short business code.

- B. The field contains long unstructured text such as a hash.

- C. The field contains many datetimes, covering a large range.

- D. The field contains big integers, above and below 0.

- E. The field contains a structured JSON.

Answer:

A, C, D

Explanation:

Comprehensive and Detailed In-Depth Explanation:

Adding an index to a searched field in a MySQL table with over 100,000 rows improves query

performance by reducing the number of rows scanned during searches, joins, or filters. The benefit

of an index depends on the field’s data type, cardinality (uniqueness), and query patterns. MySQL

indexing best practices, as aligned with Appian’s Database Optimization Guidelines, highlight

scenarios where indices are most effective.

Option A (The field contains a textual short business code):

This benefits greatly from an index. A short business code (e.g., a 5-10 character identifier like

"CUST123") typically has high cardinality (many unique values) and is often used in WHERE clauses or

joins. An index on this field speeds up exact-match queries (e.g., WHERE business_code = 'CUST123'),

which are common in Appian applications for lookups or filtering.

Option C (The field contains many datetimes, covering a large range):

This is highly beneficial. Datetime fields with a wide range (e.g., transaction timestamps over years)

are frequently queried with range conditions (e.g., WHERE datetime BETWEEN '2024-01-01' AND

'2025-01-01') or sorting (e.g., ORDER BY datetime). An index on this field optimizes these operations,

especially in large tables, aligning with Appian’s recommendation to index time-based fields for

performance.

Option D (The field contains big integers, above and below 0):

This benefits significantly. Big integers (e.g., IDs or quantities) with a broad range and high cardinality

are ideal for indexing. Queries like WHERE id > 1000 or WHERE quantity < 0 leverage the index for

efficient range scans or equality checks, a common pattern in Appian data store queries.

Option B (The field contains long unstructured text such as a hash):

This benefits less. Long unstructured text (e.g., a 128-character SHA hash) has high cardinality but is

less efficient for indexing due to its size. MySQL indices on large text fields can slow down writes and

consume significant storage, and full-text searches are better handled with specialized indices (e.g.,

FULLTEXT), not standard B-tree indices. Appian advises caution with indexing large text fields unless

necessary.

Option E (The field contains a structured JSON):

This is minimally beneficial with a standard index. MySQL supports JSON fields, but a regular index on

the entire JSON column is inefficient for large datasets (>100k rows) due to its variable structure.

Generated columns or specialized JSON indices (e.g., using JSON_EXTRACT) are required for targeted

queries (e.g., WHERE JSON_EXTRACT(json_col, '$.key') = 'value'), but this requires additional setup

beyond a simple index, reducing its immediate benefit.

For a table with over 100,000 rows, indices are most effective on fields with high selectivity and

frequent query usage (e.g., short codes, datetimes, integers), making A, C, and D the optimal

scenarios.

Reference: Appian Documentation - Database Optimization Guidelines, MySQL Documentation -

Indexing Strategies, Appian Lead Developer Training - Performance Tuning.

Question 14

You have created a Web API in Appian with the following URL to call it:

https://exampleappiancloud.com/suite/webapi/user_management/users?username=john.smith.

Which is the correct syntax for referring to the username parameter?

- A. httpRequest.queryParameters.users.username

- B. httpRequest.users.username

- C. httpRequest.formData.username

- D. httpRequest.queryParameters.username

Answer:

D

Explanation:

Comprehensive and Detailed In-Depth Explanation:

In Appian, when creating a Web API, parameters passed in the URL (e.g., query parameters) are

accessed within the Web API expression using the httpRequest object. The URL

https://exampleappiancloud.com/suite/webapi/user_management/users?username=john.smith

includes a query parameter username with the value john.smith. Appian’s Web API documentation

specifies how to handle such parameters in the expression rule associated with the Web API.

Option D (httpRequest.queryParameters.username):

This is the correct syntax. The httpRequest.queryParameters object contains all query parameters

from the URL. Since username is a single query parameter, you access it directly as

httpRequest.queryParameters.username. This returns the value john.smith as a text string, which

can then be used in the Web API logic (e.g., to query a user record). Appian’s expression language

treats query parameters as key-value pairs under queryParameters, making this the standard

approach.

Option A (httpRequest.queryParameters.users.username):

This is incorrect. The users part suggests a nested structure (e.g., users as a parameter containing a

username subfield), which does not match the URL. The URL only defines username as a top-level

query parameter, not a nested object.

Option B (httpRequest.users.username):

This is invalid. The httpRequest object does not have a direct users property. Query parameters are

accessed via queryParameters, and there’s no indication of a users object in the URL or Appian’s Web

API model.

Option C (httpRequest.formData.username):

This is incorrect. The httpRequest.formData object is used for parameters passed in the body of a

POST or PUT request (e.g., form submissions), not for query parameters in a GET request URL. Since

the username is part of the query string (?username=john.smith), formData does not apply.

The correct syntax leverages Appian’s standard handling of query parameters, ensuring the Web API

can process the username value effectively.

Reference: Appian Documentation - Web API Development, Appian Expression Language Reference -

httpRequest Object.

Question 15

You are just starting with a new team that has been working together on an application for months.

They ask you to review some of their views that have been degrading in performance. The views are

highly complex with hundreds of lines of SQL. What is the first step in troubleshooting the

degradation?

- A. Go through the entire database structure to obtain an overview, ensure you understand the business needs, and then normalize the tables to optimize performance.

- B. Run an explain statement on the views, identify critical areas of improvement that can be remediated without business knowledge.

- C. Go through all of the tables one by one to identify which of the grouped by, ordered by, or joined keys are currently indexed.

- D. Browse through the tables, note any tables that contain a large volume of null values, and work with your team to plan for table restructure.

Answer:

B

Explanation:

Comprehensive and Detailed In-Depth Explanation:

Troubleshooting performance degradation in complex SQL views within an Appian application

requires a systematic approach. The views, described as having hundreds of lines of SQL, suggest

potential issues with query execution, indexing, or join efficiency. As a new team member, the first

step should focus on quickly identifying the root cause without overhauling the system prematurely.

Appian’s Performance Troubleshooting Guide and database optimization best practices provide the

framework for this process.

Option B (Run an explain statement on the views, identify critical areas of improvement that can be

remediated without business knowledge):

This is the recommended first step. Running an EXPLAIN statement (or equivalent, such as EXPLAIN

PLAN in some databases) analyzes the query execution plan, revealing details like full table scans,

missing indices, or inefficient joins. This technical analysis can identify immediate optimization

opportunities (e.g., adding indices or rewriting subqueries) without requiring business input,

allowing you to address low-hanging fruit quickly. Appian encourages using database tools to

diagnose performance issues before involving stakeholders, making this a practical starting point as

you familiarize yourself with the application.

Option A (Go through the entire database structure to obtain an overview, ensure you understand

the business needs, and then normalize the tables to optimize performance):

This is too broad and time-consuming as a first step. Understanding business needs and normalizing

tables are valuable but require collaboration with the team and stakeholders, delaying action. It’s

better suited for a later phase after initial technical analysis.

Option C (Go through all of the tables one by one to identify which of the grouped by, ordered by, or

joined keys are currently indexed):

Manually checking indices is useful but inefficient without first knowing which queries are

problematic. The EXPLAIN statement provides targeted insights into index usage, making it a more

direct initial step than a manual table-by-table review.

Option D (Browse through the tables, note any tables that contain a large volume of null values, and

work with your team to plan for table restructure):

Identifying null values and planning restructures is a long-term optimization strategy, not a first step.

It requires team input and may not address the immediate performance degradation, which is better

tackled with query-level diagnostics.

Starting with an EXPLAIN statement allows you to gather data-driven insights, align with Appian’s

performance troubleshooting methodology, and proceed with informed optimizations.

Reference: Appian Documentation - Performance Troubleshooting Guide, Appian Lead Developer

Training - Database Optimization, MySQL/PostgreSQL Documentation - EXPLAIN Statement.